A performance profile mystery: The cost of Stopwatch

Measuring the length of time that a particular piece of code takes is a surprising challenging task. There are two aspects to this, the first is how do you ensure that the cost of getting the start and end times won’t interfere with the work you are doing. The second is how to actually get the time (potentially many times a second) in as efficient way as possible.

To give some context, Andrey Akinshin does a great overview of how the Stopwatch class works in C#. On Linux, that is basically calling to the clock_gettime system call, except that this is not a system call. That is actually a piece of code that the Kernel sticks inside your process that will then integrate with other aspects of the Kernel to optimize this. The idea is that this system call is so frequent that you cannot pay the cost of the Kernel mode transition. There is a good coverage of this here.

In short, that is a very well-known problem and quite a lot of brainpower has been dedicated to solving it. And then we reached this situation:

What you are seeing here is us testing the indexing process of RavenDB under the profiler. This is indexing roughly 100M documents, and according to the profiler, we are spending 15% of our time gathering metrics?

The StatsScope.Start() method simply calls Stopwatch.Start(), so we are basically looking at a profiler output that says that Stopwatch is accounting for 15% of our runtime?

Sorry, I don’t believe that. I mean, it is possible, but it seems far-fetched.

In order to test this, I wrote a very simple program, which will generate 100K integers and test whether they are prime or not. I’m doing that to test compute-bound work, basically, and testing calling Start() and Stop() either across the whole loop or in each iteration.

I run that a few times and I’m getting:

- Windows: 311 ms with Stopwatch per iteration and 312 ms without

- Linux: 450 ms with Stopwatch per iteration and 455 ms without

On Linux, there is about 5ms overhead if we use a per iteration stopwatch, on Windows, it is either the same cost or slightly cheaper with per iteration stopwatch.

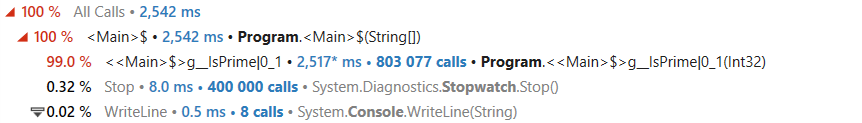

Here is the profiler output on Windows:

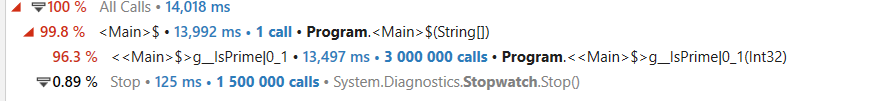

And on Linux:

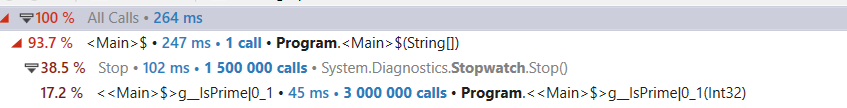

Now, that is what happens when we are doing a significant amount of work, what happens if the amount of work is negligible? I made the IsPrime() method very cheap, and I got:

So that is a good indication that this isn’t free, but still…

Comparing the costs, it is utterly ridiculous that the profiler says that so much time is spent in those methods.

Another aspect here may be the issue of the profiler impact itself. There are differences between using Tracing and Sampling methods, for example.

I don’t have an answer, just a lot of very curious questions.

Comments

No clue if this is it, but two possible guesses: * possibly TieredPGO moved code around so that it looks like it's in Stopwatch methods when it's actually other code * I've noticed that some profilers will assign prologue code of a function to the first line of the function, so it looks like the first line (which is starting the

StatsScope) is doing a lot, when it's actually the prologue that's doing a lot, at least relatively. Similarly, epilogue code can end up as being counted as the last line.Possibly one or both together is confusing the profiler into assigning blame to the wrong method.

Is it tracing?

Stuart,

I agree, there is absolutely something funky going on here.

The fact that we aren't seeing that in

Tracingmode, only inSamplingalso lends credence to this being a Profiler issue rather than a real problem.Mario,

We observed this with

Sampling, but not withTracing.Isn't it because the sampling MUST do the sampling on a safe location? And a pseudo syscall is one such thing, so you end up with an overrepresentation for that reason.

Dennis,

I guess that is possible. We are using a lot of unsafe call, etc. Which may have an impact.

Did you try a different profiler like perf, or did you run strace to count the number of system calls? Perhaps some things are disabled when running under the profiler like the mentioned kernel optimization?

This might be due to clock/timer interrupt interval being 15ms by default to save power, and this clock interrupt is source of all time inside OS

Adam,

I think you are confusing the timer interval vs. the high frequency timer. Those are actually different from one another.

Comment preview