The subtleties of proper B+Tree implementation

I mentioned earlier that B+Trees are a gnarly beast to implement properly. On the face of it, this is a really strange statement, because they are a pretty simple data structure. What is so complex about the implementation? You have a fixed size page, you add to it until it is full, then you split the page, and you are done. What’s the hassle?

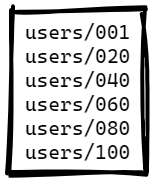

Here is a simple scenario for page splits, the following page is completely full. We cannot fit another entry there:

Now, if we try to add another item to the tree, we’ll need to split the page, and the result will be something like this (we add an entry with a key: users/050):

How did we split the page? The code for that is really simple:

As you can see, since the data is sorted, we can simply take the last half of the entries from the source, copy them to the new page and call it a day. This is simple, effective, and will usually work just fine. The key word here is usually.

Given a B+Tree that uses variable size keys, with a page size of 4KB and a maximum size of 1 KB for the keys. On the face of it, this looks like a pretty good setup. If we split the page, we can be sure that we’ll have enough space to accommodate any valid key, right? Well, just as long as the data distribution makes sense. It often does not. Let’s talk about a concrete scenario, shall we? We store in the B+Tree a list of public keys.

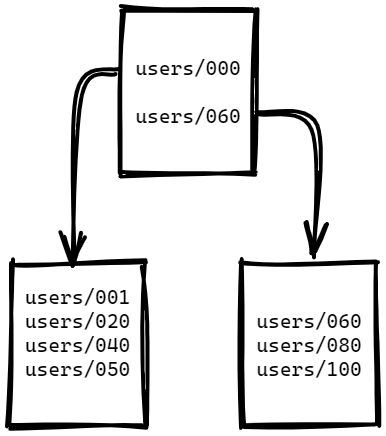

This looks like the image below, where we have a single page with 16 entries and 3,938 bytes in use, and 158 bytes that are free. Take a look at the data for a moment, and you’ll notice some interesting patterns.

The data is divided into two distinct types, EdDSA keys and RSA keys. Because they are prefixed with their type, all the EdDSA keys are first on the page, and the RSA keys are last. There is a big size difference between the two types of keys. And that turns out to be a real problem for us.

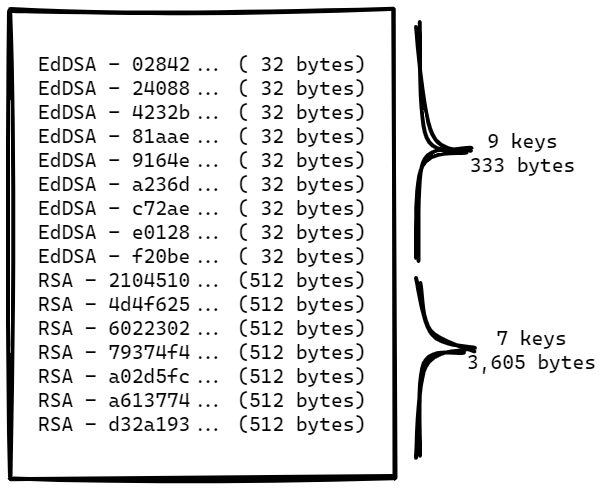

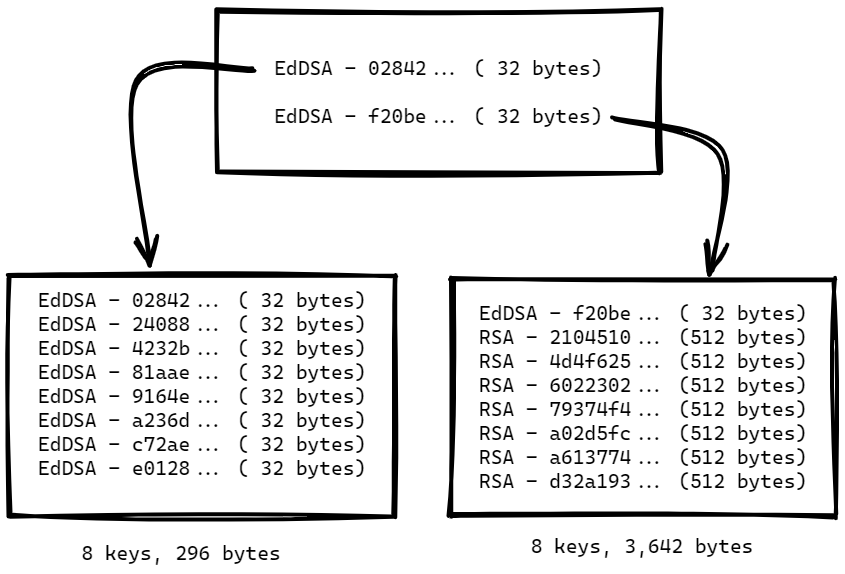

Consider what will happen when we want to insert a new key to this page. We still have room to a few more EdDSA keys, so that isn’t really that interesting, but what happens when we want to insert a new RSA key? There is not enough room here, so we split the page. Using the algorithm above, we get the following tree structure post split:

Remember, we need to add an RSA key, so we are now going to go to the bottom right page and try to add the value. But there is not enough room to add a bit more than 512 bytes to the page, is there?

What happens next depends on the exact implementation. It is possible that you’ll get an error, or another split, or the tree will attempt to proceed and do something completely bizarre.

The key here (pun intended) is that even though the situation looks relatively simple, a perfectly reasonable choice can hide a pretty subtle bug for a very long time. It is only when you hit the exact problematic circumstances that you’ll run into problems.

This has been a fairly simple problem, but there are many such edge cases that may be hiding in the weeds of B+Tree implementations. that is one of the reasons that working with production data is such a big issue. Real world data is messy, it has unpredictable patterns and stuff that you’ll likely never think of. It is also the best way I have found to smoke out those details.

Comments

What solution did you pick? Split nodes so that new nodes have roughly equal amount of used space instead of an equal number of keys? Or split by number of keys, and do it again if necessary until you have enough room to do the insert?

Dathan,

Instead of splitting the page by key blindly (which led to this unbalanced size), I split the key by size. Read the data from the page until we had half of the size moved

What if you know ahead that the size of the next key(s) to be saved are greater than the split page, even splitting it by key size? In such situation splitting it in different sizes would avoid a new recursive split. Could that happen?

Cassion,

That cannot happen.

Let's see why not.

Why a fixed size page? There are databases and b-trees with a variable node size. Nothing particularly wrong with fixed sizes, this is how it's usually done. And it works the way it does. Fixing the size commits you to a lot of things you don't really want to commit to, and gets you in trouble with long keys, or, as you show here, size mismatched keys. Fixing the size has many merits, and many demerits.

Ryan,

Separate page & node terminology - they aren't the same. You need fixed size pages because you cannot insert data into the middle of a file. It also makes buffer management really hard.

In almost all cases, you have a fixed size page for the whole database. Note that a node is typically a value inside a page, so can be variable size.

I don't know what you mean by page and node, you haven't said. I assume the node is a B-tree node and the page is... something pertaining the file / space manager. Which may or may not match the page of the OS / file system / hardware, usually 4K.

You are correct, the space management becomes difficult and possibly suboptimal without a fixed page size. But it's just that, more difficult, not impossible.

And yes, there are databases that don't fix a page size. For various reasons. Like compression.

The B-tree node size is itself variable with variable size items. Usually it's variable in items count, but it can be variable in byte size.

The LMDB which you know very well started with code from an "append only" B-tree. A B-tree that only writes at the end of the file (and never rewrites anything). Until a compaction occurs -- case in which a new file is written.

You can see how in this case the buffer management has no need for a fixed page size and it is in fact very simple.

Ryan, Page is a fixed size section of the file. Typically 4K, as you noted.A node is typically a particular value inside a B+Tree. (composed of key & value).A B+Tree is composed of fixed size pages, and is stored multiple nodes in a single page. The structure of a B+Tree is very closely tied to the page, not something related, tied to it. Note that compression, for example, doesn't mean that you don't have fixed page size. You absolutely have that. For example, RavenDB has compressed B+Tree, with fixed size pages. MySQL implements compression using 16KB pages (IIRC) and punch holes in the file to release the free space. That is still fixed size pages. Compression doesn't change the page size, only how many items you can pack per page. I'm not aware of any B+Tree implementation that isn't based on fixed page sizes.

Ryan,

LMDB uses fixed size pages, reference: https://github.com/LMDB/lmdb/blob/mdb.master/libraries/liblmdb/lmdb.h#L491

LMDB also doesn't do compaction at all.

And it doesn't do buffer management since it uses a memory map file, the code for that is in the OS

You've misread me.

I haven't said anything about LMDB not using fixed page sizes. Nor about LMDB and compaction.

You did not pay attention.

What I've said is LMDB took the code (that is, it started with the code) of an "append only" B-tree. One that was doing compaction.

LMDB does not do compaction.

As per their description, LMDB is: "Derived From: This code is derived from btree.c written by Martin Hedenfalk."

See this for more mentions of the append only B-tree:

http://www.lmdb.tech/media/20141120-BuildStuff-Lightning.pdf

And this for b-trees with variable node size:

https://dsxxi.home.blog/2023/01/24/database-b-trees/ https://dsxxi.home.blog/2023/01/25/database-b-trees-ii/

Comment preview