My new interview task: Stop the flow

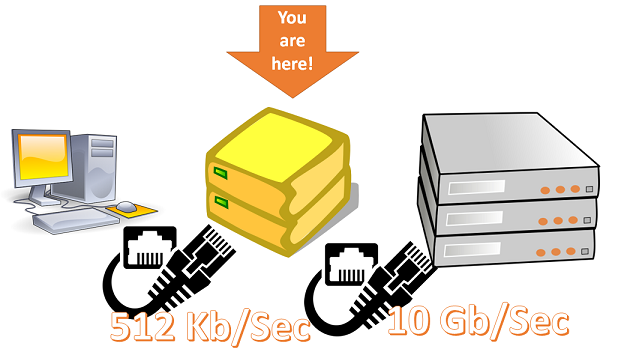

We run into an interesting scenario at work that I thought would make for a pretty good interview task. Consider a server that needs to proxy a request from the outside world to an internal service, something like this:

That isn’t that interesting. The interesting bit is that the network between the internal server and the proxy is running at 10Gb/sec and the external network is limited to 512Kb/sec.

Furthermore, the internal server expects the other side to just… take the load. It will blast the other side with as much data as it can, and if you can’t handle that, will cut the connection. What this means is that for small requests, the proxy can successfully pass the data to the external server, but for larger ones, it is unable to read the data quickly enough to do so and the internal server will disconnect from it.

It is the responsibility of the proxy to manage that scenario. That is the background for this task, practically speaking, this means that you have the following code, which works if the size is 8MB but fails if it is 64MB.

We have the SlowClientStream and the FastServerStream – which means that we are able to focus completely on the task at hand (ignoring networks, etc).

The requirement is to pass a 64 MB of data between those two streams (which have conflicting requirements)

- The FastServerStream requires that you’ll read from it in a rate of about 31Kb / sec.

- The SlowClientStream, on the other hand, will accept data at a maximum rate of about 30Kb/sec (but is variable across time).

You may not change the implementation of either stream (but may add behavior in separate classes).

You may not read the entire response from the server before sending to the client.

There is a memory limit of 32 MB on the maximum amount of memory you may use in the program.

How would you go about solving this?

Comments

While I assume not the intent, it seems that just upping the buffer to 9MB results in just shy of the 32MB memory limit and is large enough to deliver the 64MB payload in the necessary time.

David,

If you do that, I'll just double the size to 128MB. :-) You need a generic solution, not just for that

You could do this with a non-persistent memory-mapped file, which would allow the proxy to run within the memory constraints. This only requires changing the proxy implementation and allows you to buffer the difference in read/write speed up until however much disk space you have/specified.

If you're not allowed to use any extra storage and the proxy is meant to run purely from RAM, that should be specified in the requirements.

The actual requirements are a bit lacking. It is very easy to make a solution that works in the specific scenario. Just read up to the memory limit and write it out as needed. I would argue that any decent programmer SHOULD implement this first. If however the requirements are specified as specific bandwidth requirements for short amounts of time it requires a totally different solution. And this difference in stated requirements vs actual requirements is what leads a great many solutions to be over engineered or over featured because you think "what if".

After spending two hours on this I come to the conclusion that trying to store anything but a tiny read buffer / write buffer in memory is a fool's game :-) Just write the incoming to a temp file and read from the file at the same time in a separate thread and send it to client. Upstream server can finish your request fast and do something else. Basically what nginx does above a certain threshold.

The closest I got with a memory only approach was slowing down the read from upstream to just above the failure point to minimize buffers needed to keep, but this proved to be unreliable and more so in real world I think.

Laurance,

Non persistent memory mapped file would be complex, you need to grow the buffer on the fly.

Dennis,

You cannot actually just read to memory and then stop. The sender side will timeout if you do that.

Gerdus,

Yes, a temporary file would work. But then the next wrinkle is that you need to deal with:

In that case I would store read segments in separate files and put the filenames in a bounded queue/channel sized for maximum speed delta we allow. For very large deltas this is basically an attack (flood,Slow Loris). After each segment file is written to destination, it can be deleted. It would still be the same file I/O but better spread out over time.

https://gist.github.com/gerdus/90c83d8162235a64f093d5dbbb7a7348

This feels a bit hacky, but can we make the request to the backend more than once? If that's possible, then we can have something like a ring buffer where the server reader fills it up, then re-makes the request to the server, throwing everything away until it has reached the point it ended at before, then filling the buffer starting with the first location the client writer has already sent, and filling until it catches up with the client writer. The client writer just goes around and around the buffer, reading until the whole thing has been sent. There's probably an optimization where we throttle the read by some amount to reduce the number of times we need to do the restart/throwaway trick, as well as waiting until there's some maximum amount of buffer available for us to fill before reading from the server again, while still ensuring that the client writer is always writing. Sounds like a linear algebra problem maybe.

Heh, if we can assume the server rate is hugely greater than the client rate, we can just chain some readers together, starting each one at an interval such that it will be able to read-and-throwaway up to the point where the current reader got killed for being too slow, then hand the newly caught-up reader off to the client writer and starting a new reader some time after that, and so on. It's pretty similar to my other answer, but you don't need the buffer, just some math. But if you predict wrongly, you're stuck with nothing to write until you catch up another reader, so it's less resilient with a variable downstream rate.

Gerdus,

Initially when I read your code, I thought that it won't work if the destination is faster. You'll reach the end of the file and then assume no more data. But it is handled at the expense of having separate file for each read.

This works given that you are reading from an in memory source here. From the network, that would be a scenario where you have a LOT of very small files.

But it is a great solution in general, I liked how simple it turned out to be.

Steve,

That is a solution, but it isn't valid here. Consider the answer for an expensive query, you already got the result, and now you have to recompute it each time

Steve,

You want to keep a single TCP connection. Otherwise you'll need to re-run the query

Comment preview