High performance .NETBuilding a Redis Clone–Architecture

My previous attempts to write a Redis clone were done in about as straightforward a way as possible. Open a socket to listen on, have a separate Task for each client that reads from the network, parse the command and execute it. There are some smarts around supporting pipelining, but that is pretty much it.

Let’s take a step back and build ourselves a Redis clone that matches the actual Redis architecture more closely. In order to do that, I’ll need to do everything in a single thread. That is… surprisingly hard to do in C#. There are no APIs for doing the kind of work that Redis is doing. To be rather more exact, there is the Socket.Select() method, but that requires building everything on top of that (meaning that we have to handle buffering, string handling, etc).

Given that this is a way station to the final proposed architecture, I decided to skip this entirely. Instead, I’m going to focus first on removing the major bottleneck in the system, the ConcurrentDictionary.

The profiler results show that the biggest cost we have here is the scalability of the concurrent dictionary. Even when we tried to shard it across 1024 locks, it still took almost 50% of our runtime. The question is, can we do better? One good option that we can try is to shard things directly. Instead of using a single concurrent dictionary, we will split it to separate dictionaries, each one of them would be accessed without concurrency.

The idea goes like this, we’ll have the usual read & write for the clients. But instead of processing the command inline, we’ll route it to a dedicated thread (with its own dictionary) to do the work. I set it so we’ll have 10 such threads (assuming they will reside on individual cores and that I’ll be able to process all I/O on the other 6 cores.

Here are the results after the change:

============================================================================================================================ Type Ops/sec Hits/sec Misses/sec Avg. Latency p50 Latency p99 Latency p99.9 Latency KB/sec ---------------------------------------------------------------------------------------------------------------------------- Sets 113703.56 --- --- 3.06261 0.95900 25.59900 39.93500 33743.38 Gets 1137015.79 19211.78 1117804.01 3.06109 0.95900 25.59900 39.93500 49150.52 Waits 0.00 --- --- --- --- --- --- --- Totals 1250719.35 19211.78 1117804.01 3.06122 0.95900 25.59900 39.93500 82893.90

Note that we are now at 1.25 million, almost 25% better than the previous run.

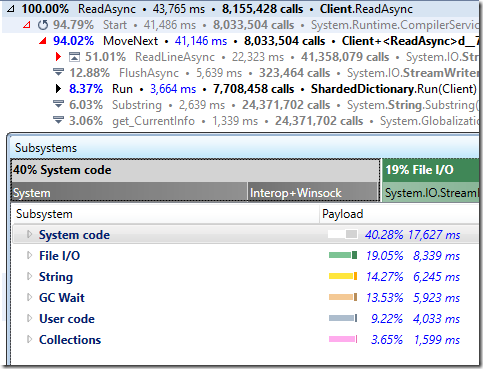

Here are some profiler results of running this code:

So in this case, we are spending a lot of time doing string processing of various kinds, waiting for GC (almost 30%). The costs for collections went down a lot (but we’ll see that it shifted somewhat).

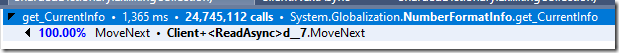

There are some other things that pop to mind, take a look here:

That is a surprising cost for a “simple” property lookup. The substrings calls are also expensive, over 6% of the overall runtime.

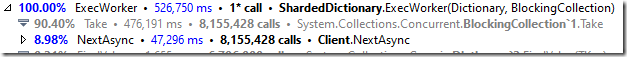

When looking at other parts of the system, we have:

This is really interesting, because we spend a lot of time just waiting for items in the queue. We could probably do more things in there rather than just wait.

I also tried various other concurrency values. With a single ExecWorker running, we have 404,187 ops/sec and with two of them we are at 715,157 ops/sec. When running with four threads dedicated to processing the requests, we are at 1,060,622.24 ops/sec.

So it is obvious that we need to rethink this approach for concurrency. We aren’t able to properly scale to bigger values.

Note that this approach also does not take advantage of pipelining. We process each command separately from all else. My next move is to add support for pipelining with this approach and measure that impact.

On the one hand, we are still at around the million mark, but given that I spent very little time (and not a lot of complexity) getting an extra 250,000 ops/second from that level of change is encouraging. The profiler is also telling us that there are more things that we can do, but I want to focus on fixing the approach we take first.

Here is the current state of the code, so you can compare it to the original one.

More posts in "High performance .NET" series:

- (19 Jul 2022) Building a Redis Clone–Analysis II

- (27 Jun 2022) Building a Redis Clone – skipping strings

- (20 Jun 2022) Building a Redis Clone– the wrong optimization path

- (13 Jun 2022) Building a Redis Clone–separation of computation & I/O

- (10 Jun 2022) Building a Redis Clone–Architecture

- (09 Jun 2022) Building a Redis Clone–Analysis

- (08 Jun 2022) Building a Redis Clone–naively

Comments

By sharding the dictionaries, are you not separating the clients from each other? They might not see the inserted values of the other clients?

// Ryan

Ryan, Yeah, there is an issue in the code, the sharding was meant to be on a per key basis, not per client basis :-(

Comment preview