Criticizing Hare language approach for generic data structures

I run into this blog post about the Hare language and its approach to generic data structures. From the blog post, we have this quote:

…it’s likely that the application of a higher-level data structure will provide a meaningful impact to your program. Instead of providing such data structures in the standard library (or even, through generics, in third-party libraries), Hare leaves this work to you.

And this one, at the end:

Hare doesn’t provide us with a generic hash map, but we were able to build one ourselves in just a few lines of code. A hash map is one of the simpler data structures we could have shown here, but even for more complex ones, you’ll generally find that it’s not too difficult to implement them yourself in Hare.

I… don’t really know where to begin. The relevant code is here, by the way, and you can see how this works.

A hash table is not a simple data structure, let’s start with that. It is the subject of much research and a ton of effort was spent on optimizing them. They are not the sort of things that you roll out yourself. To give some context, here are some talks from CppCon that talks about this:

-

Abseil's Open Source Hashtables: 2 Years In - Matt Kulukundis - CppCon 2019

-

C++Now 2018: You Can Do Better than std::unordered_map: New Improvements to Hash Table Performance

-

CppCon 2017: Matt Kulukundis “Designing a Fast, Efficient, Cache-friendly Hash Table, Step by Step”

-

CppCon 2017 Designing a Fast, Efficient, Cache friendly Hash Table, Step by Step

So in a quick search, we can see that there is a lot to discuss here. For that matter, here are some benchmark results, which compare:

Why are there so many of those?

Well, because that matters. Each of those implementations is optimizing for something specific in different ways. There isn’t just a hash table algorithm, the details matter. A lot.

The fact that Hare believes that a Hashtable or a map does not have to have a solution is pure insanity in my opinion. Let’s look at the example that is provided in the post, shall we? You can see the raw code here.

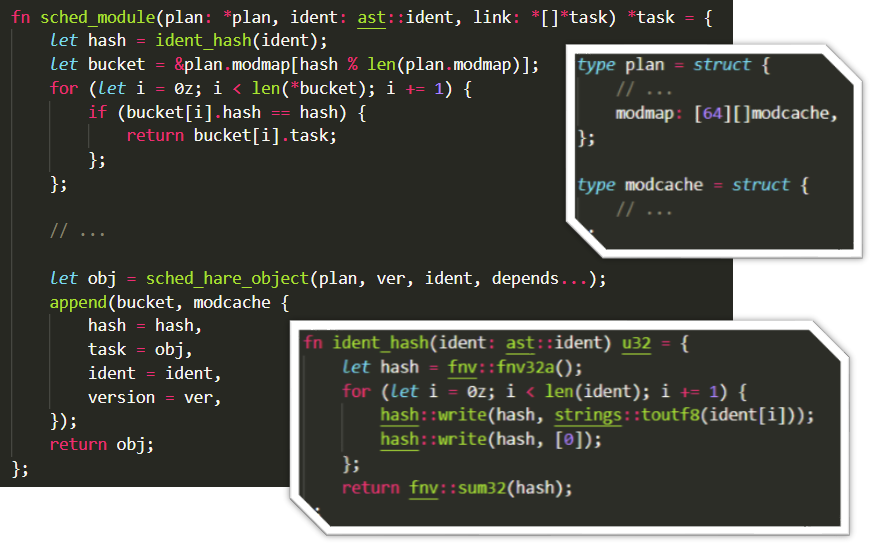

Let’s take a look to understand what is going on here. There is a static array with 64 buckets that are used as the module cache. In each one of those buckets, you have an array of entries that match that bucket. The hash key here is the FNV32 of the AST node in question.

Let’s see how many things just pop to mind immediately in here as issues. Let’s start with the fact that this is a statically sized hash table, which may be appropriate for this scenario, but won’t fit many others. If we need to handle growing the underlying array, the level of complexity will shoot up significantly.

The code is also not handling deletes (another complicated topic), and the hash collision mode is chaining (via growing the array). In other words, for many other scenarios, you’ll need to roll your own hash table (and see above about the complexities involved).

But let’s take it a bit further. The code is using FNV to compute the hash key. It is also making an assumption here, that the keys will never collide. Let’s see how well that holds up, shall we?

In other words, it took me a few minutes and under 130 ms to find a hash collision for this scenario. The code above does not handle it. For example, here are a couple of collisions:

- “intoxicated” and “tonsillectomy's”

- “Helvetius2” and “Rochester0”

Those are going to be counted as the same value by the Hare code above. Fixing this requires non trivial amount of code changes.

For that matter, let’s talk for a second about the manner in which I found it. If I were trying to write the same code in Hare, what would I have to do?

Well, the answer to that is to write a lot of code, of course. Because I would have to re-implement a hash table from scratch.

And the design of the Hare language doesn’t even allow me to provide that as a library. I have to fall down to code generation at best.

These sorts of things matter. In C, you don’t have a hash table, and the most natural data structure is some form of a linked list. So that gets used a lot. You can bring in a hash table, of course, but adapting it for use is non trivial, so they are used a lot less often. Try writing the same in Hare, and then compare the cost in time to run (and time to execute).

In modern languages, the inclusion of a hash table in the base language is a basic requirement. Languages like C++, Rust or Zig have that in the base class library and have the facilities to allow you to write your own generic data structure. That means that good data structures exist. That it make sense to spend the time writing them because they’ll be broadly applicable. Languages like C# or Java took this further and make sure that all objects have GetHashCode() and Equals() methods, specifically to support the hash table scenario. It is that important.

Even Go, before it had generics, had a dedicated syntax carved out in the language to allow you to use maps natively. And now that Go has generics, that is actually far faster.

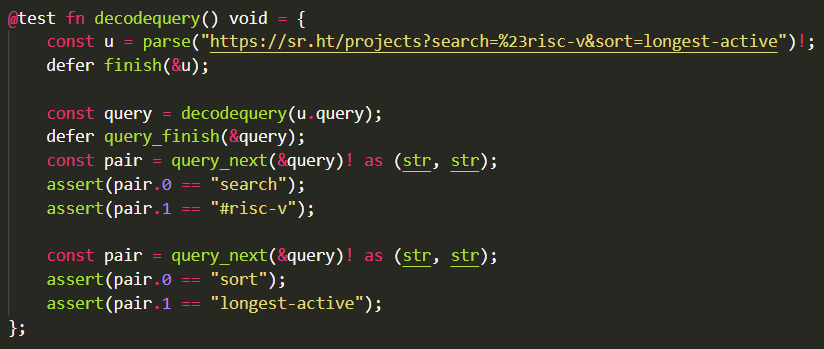

In many systems, a hash table is one of the core data structures. It is used everywhere, and it lack make the ecosystem a lot more painful. Take a look at how Hare handles query string parameters:

I mean, I guess it would be nice to have a way to do streaming on query strings? But the most natural way to do that is to use a hash table directly. The same applies for things like headers in web requests, how would you even model that in Hare?

I couldn’t disagree more with the premise of the original post. A hashtable is not something that you should punt, the consequences for your users are dire.

Comments

It is made clear that Hare is not a general purpose language. The finer points of a hash table aren't lost on a systems programmer.

David,

What makes you think that a systems programming language isn't a general purpose one? C has the same issues as Hare, but has the benefit of being far older.

Seems really strange to eschew hashmaps, but then have this in the roadmap: "Graphics (image support, pixel format conversions, vector drawing)"

Oren, In my view a feature rich language can be useful for many purposes whereas a language with low level control in a constrained environment is mostly useful for building those features.

Yesterday was checking Hare (not impressive) and Today's your article. I was playing last week with some langs to build my map data structure (very first steps) in Rust, Zig, Odin. So far, Odin is something I want to proceed with a bit further. Good balance of low‐level control, sensible defaults and great ergonomics (Imho). Would be interesting to hear your thoughts on the language.

Disregard my comment, found your blog post on Odin https://ayende.com/blog/194466-A/looking-into-odin-and-zig-my-notes

Kind of funny to me how you're linking to cppcon videos, I bet Drew would never in a million years watch those as this is his take on c++:

C++

Pros: none

Cons: ill-defined, far too big, Object Oriented Programming, loads of baggage, ecosystem that buys into its crap, enjoyed by bad programmers.

https://drewdevault.com/2019/09/08/Enough-to-decide.html

Language designers love languages, not libraries. C++ initially also came without the STL which was considered a big mistake. I assume it is too much work to come up with everything in advance when too few people are working on it. Peoples expectations on a new production grade language are much higher, because so many good languages already exist.

lmao,

Well... that is the easiest place to show the research done on hash tables, where it is actually practical usages.

Maybe Hare need time to be purified.

Comment preview