Data ownership in a distributed system

When you have a distributed system, one of the key issues that you have to deal with is the notion of data ownership. The problem is that it can be a pretty hard issue to explain properly, given the required background material. I recently came up with an explanation for the issue that I think is both clear and shows the problem in a way that doesn’t require a lot of prerequisites.

First, let’s consider two types of distributed systems:

- A distributed system that is strongly consistent – such a system requires coordination between at least a majority of the nodes in the system to do anything meaningful. Such systems are inherently limited in their ability to scale out, since the number of nodes that you need for a consensus will become unrealistic quite quickly.

- A distributed system that is eventually consistent – such a system allows individual components to perform operations on their own, which will be replicated to the other nodes in due time. Such systems are easier to scale, but there is no true global state here.

A strongly consistent system with ten nodes requires each operation to reach at least 6 members before it can proceed. With 100 nodes, you’ll need 51 members to act, etc. There is a limit to how many nodes you can add to such a system before it is untenable. The advantage here, of course, is that you have a globally consistent state. An eventually consistent system has no such limit and can grow without bound. The downside is that it is possible for different parts of the system to make decisions that each make sense individually, but are not taken together. The classic example is the notion of a unique username, a new username that is added in two distinct portions of the system can be stored, but we’ll later find out that we have a duplicate.

A strongly consistent system will prevent that, of course, but has its limititations. A common way to handle that is to split the strongly consistent system in some manner. For example, we may have 100 servers, but we split it into 20 groups, of 5 servers each. Now each username belongs to one of those groups. We can now have our cake and eat it too, we have 100 servers in the system, but we can make strongly consistent operations with a majority of 3 nodes out of 5 for each username. That is great, unless you need to do a strongly consistent operation on two usernames that belong in different groups.

I mentioned that distributed system can be tough, right? And that you may need some background to understand how to solve that.

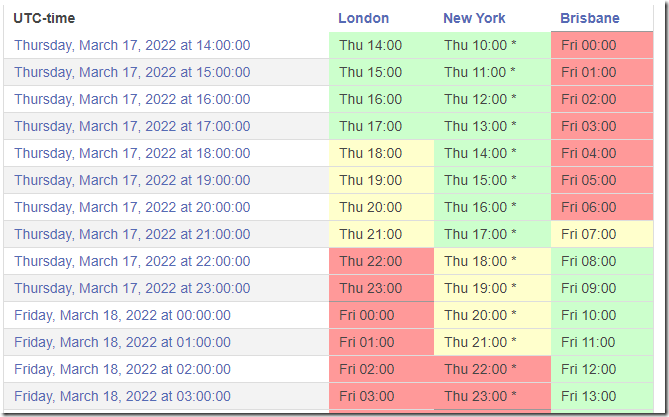

Instead of trying to treat all the data in the same manner, we can define data ownership rules. Let’s consider a real world example, we have a company that has three branches, in London, New York City and Brisbane. The company needs to issue invoices to customers and it has a requirement that the invoice numbers will be consecutive numbers. I used World Clock Planner to pull the intersection of availability of those offices, which you can see below:

Given the requirement for consecutive numbers, what do we know?

Each time that we need to generate a new invoice number, each office will need to coordinate with at least another office (2 out of 3 majority). For London, that is easy, there are swaths of times where both London and New York business hours are overlapping.

For Brisbane, not so much. Maybe if someone is staying late in the New York office, but Brisbane will not be able to issue any invoices on Friday past 11 AM. I think you’ll agree that being able to issue an invoice on Friday’s noon is not an unreasonable requirement.

The problem here is that we are dealing with a requirement that we cannot fulfill. We cannot issue globally consecutive numbers for invoices with this sort of setup.

I’m using business hours for availability here, but the exact same problem occurs if we are using servers located around the world. If we have to have a consensus, then the cost of getting it will escalate significantly as the system becomes more distributed.

What can we do, then? We can change the requirement. There are two ways to do so. The first is to assign a range of numbers to each office, which they are able to allocate without needing to coordinate with anyone else. The second is to declare that the invoice numbers are local to their office and use the following scheme:

- LDN-2921

- NYC-1023

- BNE-3483

This is making the notion of data ownership explicit. Each office owns its set of invoice numbers and can generate them completely independently. Branch offices may get an invoice from another office, but it is clear that it is not something that they can generate.

In a distributed system, defining the data ownership rules can drastically simplify the overall architecture and complexity that you have to deal with.

As a simple example, assume that I need a particular shirt from a store. The branch that I’m currently at doesn’t have the particular shirt I need. They are able to lookup inventory in other stores and direct me to them. However, they aren’t able to reserve that shirt for me.

The ownership on the shirt is in another branch, changing the data in the local database (even if it is quickly reflected in the other store) isn’t sufficient. Consider the following sequence of events:

- Branch A is “reserving” the shirt for me on Branch B’s inventory

- At Branch B, the shirt is being sold at the same time

What do you think will be the outcome of that? And how much time and headache do you think you’ll need to resolve this sort of distributed race condition.

On the other hand, a phone call to the other store and a request to hold the shirt until I arrive is a perfect solution to the issue, isn’t it? If we are talking in terms of data ownership, we aren’t trying to modify the other store’s inventory directly. Instead we are calling them and asking them to hold that. The data ownership is respected (and if I can’t get a hold of them, it is clear that there was no reservation).

Note that in the real world it is often easier to just ignore such race conditions since they are rare and “sorry” is usually good enough, but if we are talking about building a distributed system architecture, race conditions are something that happens yesterday, today and tomorrow, but not necessarily in that order.

Dealing with them properly can be a huge hassle, or negligible cost, depending on how you setup your system. I find that proper data ownership rules can be a huge help here.

Comments

Comment preview