The performance regression odyssey

_thumb_1.png) We care a lot about the performance of RavenDB.

We care a lot about the performance of RavenDB.

Aside from putting a lot of time an effort into ensuring that RavenDB uses optimal code, we also have a monitoring system in place to alert us if we can observe a performance degradation. Usually those are fairly subtle issues, but we got an alert on the following scenario. As you can see, we are seeing a big degradation of this test.

The actual test in question is doing a low level manipulation of Voron (RavenDB’s storage engine), and as such, stand at the core of our performance hotspots to watch for.

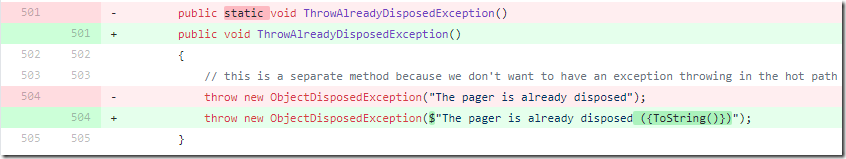

Looking at the commits around that time frame, we quickly narrow the fault down to the following changes:

A really important observation here, however, is that this method is not called in the test. So we were looking at whatever this change caused a regression in code generation. I couldn’t believe that this is the case, to be honest.

Indeed, looking at the generated assembly, there was no difference between the two versions. But something cause the performance to degrade significantly enough for this test that it raised all sorts of alarm bells.

We started looking into a lot of details about the system, the usual things like checking for thermal throttling, etc.

We struck gold on this command: sudo smartctl --all /dev/nvme0n1

Take a look at the results:

SMART overall-health self-assessment test result: FAILED! - NVM subsystem reliability has been degradedSMART/Health Information (NVMe Log 0x02, NSID 0x1) Critical Warning: 0x04 Temperature: 35 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 115% Data Units Read: 462,613,897 [236 TB] Data Units Written: 2,100,668,468 [1.07 PB] Host Read Commands: 10,355,495,581 Host Write Commands: 9,695,954,131 Controller Busy Time: 70,777

In other words, the disk is literally crying at us. This tells us that the drive has been in action for ~50 days of actual activity and that it has gone beyond is design specs.

In particular, you can see that we wrote over a petabyte of data to the disk as part of our test case executions. This is a 500GB drive, which means that we fill it to capacity over 2,000 times before we hit this issue.

Once we hit this capacity (Percentage Used is > 100%), the drive needs to do a lot more work, so we are seeing longer test times.

First time that I closed a bug by sending a PO to get new hardware, I got to admit.

Comments

Not the first time I see a ticket closed by blaming hardware. First time to see it actually being justified and not just a lazy developer ;-)

now that is really interesting. I assume the disk IOs decreased, but doesn't ravendb monitor raw IO for slow writes already?

Would it have helped to modify the value that triggers the slow write warning?

I had also recently deal with slow SSDs which caused me to write: https://github.com/Alois-xx/FileStress. This is the first tool which would allow you to check the real sustained write performance while testing over hours. The link to Seagate about the many lies of SSD performance testing is true. My tester is not trying to only measure the disk performance but also NTFS file system performance where we might run into NTFS performance issues. I had the case to write sensor data with 110 MB/s for > 30 minutes to a SSD. It turned out the vender delivered a SSD which did go from 450 MB/s write performance after 3 minutes down to 90 MB/s which was ruining the system because the data was buffered and we were running of memory. The usual disk benchmarks do not cover this and SSD Garbage collection characteristics is poorly understood as well as the effects of data entropy where the SSD controller will compress data leading to much better numbers which low entropy data.

Peter, RavenDB will alert of slow I/O if a single write takes over a second to run. That is because we need something really bad to avoid spurious warnings. In this case, the cost went by a LOT, but that went from 1 ms to 5 ms. Huge change, but not enough to trigger this.

Alois, Yes, doing sustained work like that is a PITA if you need to understand what exactly is going on in the system. Great tool, will be useful if we need smoking gun.We actually built something similar to RavenDB at one point. In the cloud, mind you, this is actually worse, you have I/O credits and the speed can go from 3K IOPS to 100 IOPS effectively instantly.

gradual performance degradation is certain like death and taxes.

Comment preview