Benchmarking RavenDB on the Raspberry PI

I recently got my hands on a the Raspberry PI 400 (the one that comes in a keyboard form). That is an amazing idea and it make the Raspberry a lot more approachable for consumer cases.

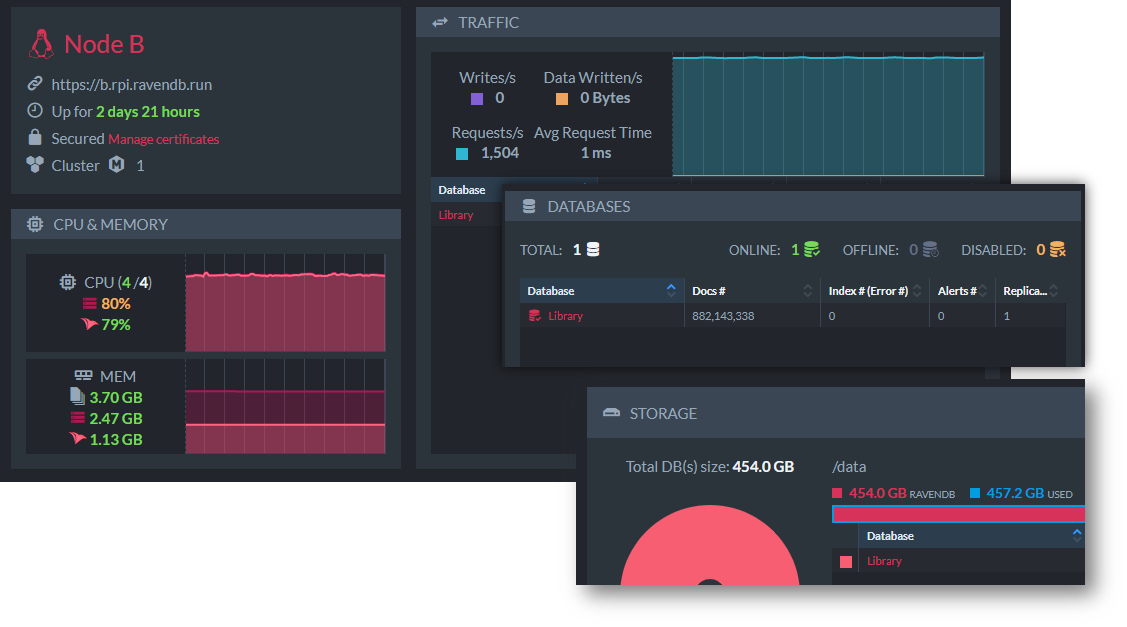

At any rate, one of my first actions was to put RavenDB on it and see how well it performs. You can see the results in the image below.

In this case, we are running 1,500 queries per second on the system. It has 4 GB of RAM and the database we are using has 450 GB (!) worth of data. I actually just took the nearest external disk I had available and plugged that into the PI. This is a generic hard disk and I can get a maximum of about 30 MB / sec from it.

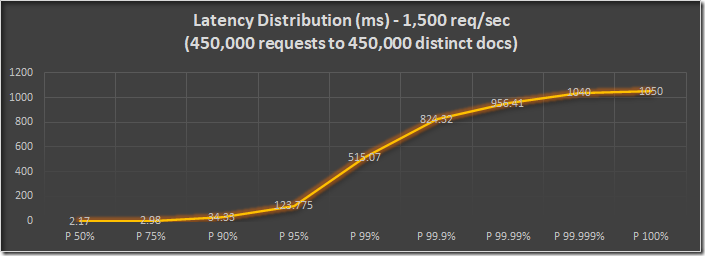

This is important because my queries are covering more data than can fit in memory. Each query asks for a random (different) document, so there is little chance for optimizations by having a hot working set. We are going to see some I/O to the (pretty poor) disk impacting the outcome. Here are the results:

You can see that the for 95% of the queries, we got a result in under 125 milliseconds and that for 99% of the requests, RavenDB on a Raspberry PI is able to answer in about half a second. And even with some of the requests having to hit the disk, the maximum number of time to wait for a request is just above a second. All of that when we are facing 1,500 queries per second, which is respectable even for big applications running on much more massive hardware.

Of particular interest to me is the state of the server when we are running this benchmark. You can see that both in terms of CPU utilization and in the number of queries processed, we are nearly absolutely flat. There aren’t any hiccups in the load, there haven’t been a GC pause that stopped the world and the system just runs at top speed for as long as we’ll let it. In this case, the benchmark lasted over 5 minutes, so more than enough time to run through all the usual suspects.

Note also the number of documents involved here. We are looking at 882 million documents. And we are requesting close to half a million of them. I run the benchmark long enough to ensure that we will cover more documents than can be fit into memory, so we are seeing I/O work here (on a fairly poor disk, I might add, but that is what I had available at the moment).

The actual size of disk is a bit of a cheat, I’m using documents compression here to pack the data more tightly. The actual data size, without using RavenDB data compression is around 750GB. That also helps a lot with the amount of I/O we have to deal with, but it increase the CPU consumption. Given the difference in relative costs, that is a task that is paying dividends in spades.

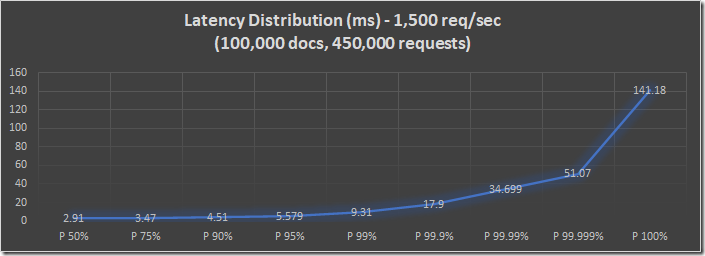

I also decided to see what we can look at when we are running a query that touches just a small part of the documents. Instead of working through nearly half a million, I chose to run it on about 100,000 documents. That is small enough that it should mostly all fit in memory. It also represent a far more likely scenario, to be frank.

And here we can see that we get all requests, under 1,500 queries per second on a Raspberry PI in under 150 ms, with the 99.999% (!!) percentile in about 50 milliseconds.

And that makes me very happy, because it shows the result of all the work we put into optimizing RavenDB.

Comments

I just had to post something to this... Wow! Pretty incredible performance on any hardware I think. You guys have spent a fair amount of time on improving performance. Impressive!

Thanks for all the interesting blog posts, much appreciated!

Comment preview