How efficient is RavenDB?

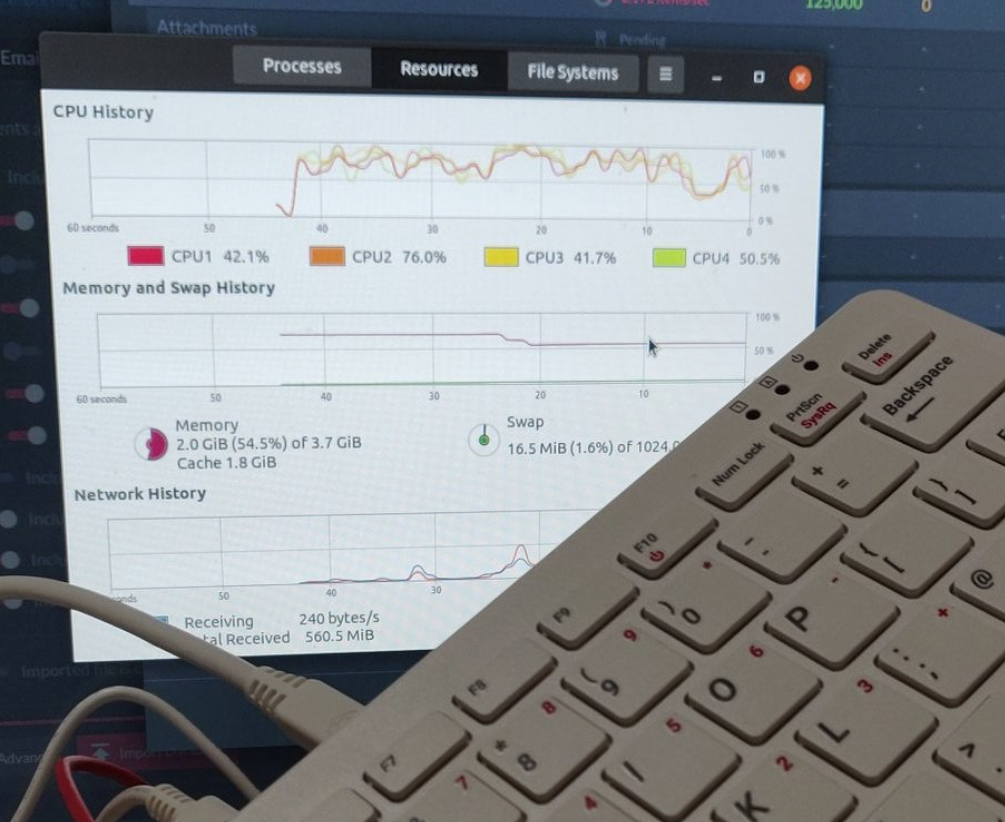

We have been working on a big benchmark of RavenDB recently. The data size that we are working on is beyond the TB range and we are dealing with over a billion documents. Working with such data sizes can be frustrating, because it takes quite a bit of time for certain things to complete. Since I had downtime while I was waiting for the data to load, I reached to a new toy I just got, a Raspberry PI 400. Basically, a Raspberry Pi 4 that is embedded inside a keyboard. It is a pretty cool machine and an awesome thing to play around with:

Naturally, I had to try it out with RavenDB. We have had support on running on ARM devices for a long while now, and we have dome some performance work on the Raspberry PI 3. There are actually a whole bunch of customers that are using RavenDB in production on the Pi. These range from embedding RavenDB in industrial robots, using RavenDB to store traffic analysis data on vehicles and deploying Raspberry PI servers to manage fleets of IoT sensors in remote locations.

The Raspberry PI 4 is supposedly much more powerful and I got the model with 4GB of RAM to play around with. And since I already had a data set that hit the TB ranges lying around, I decided to see what we could do with both of those.

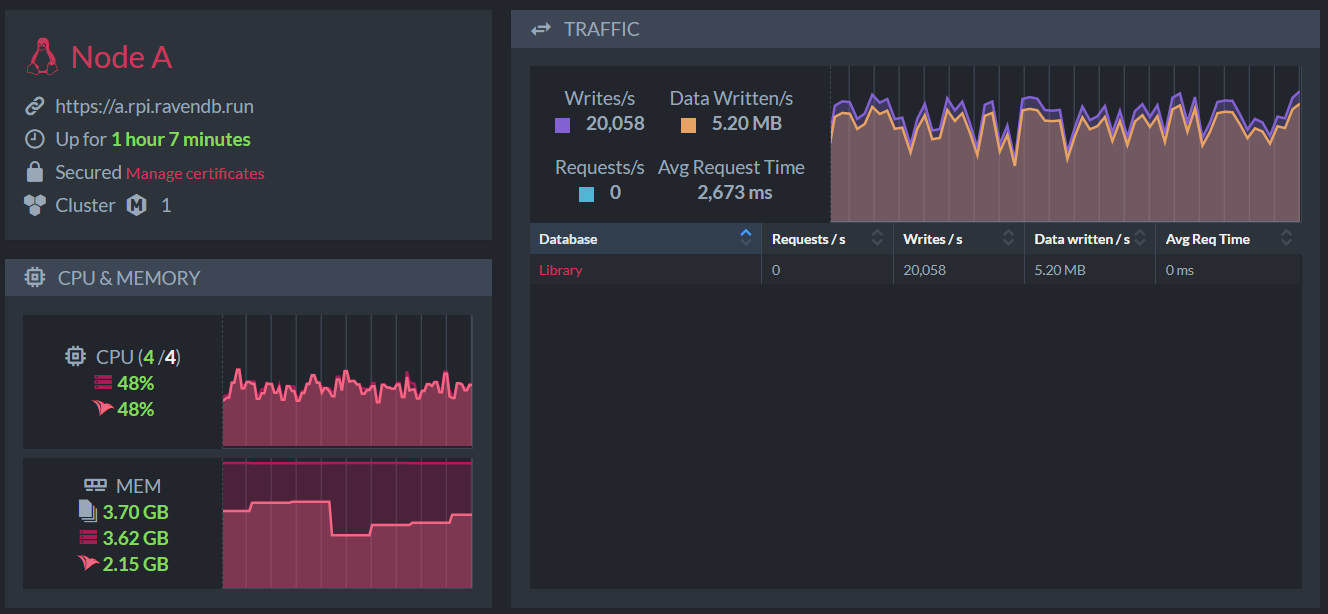

I scrounged an external hard disk that we had lying around that had sufficient capacity and started the import process. This is where we are after a few minutes:

A couple of things to notice about this. At this point the import process is running for about two and half minutes and imported about 4 million documents. I want to emphasize that this is running on an HDD (and a fairly old one at that). Currently I can feel its vibrations on the table, so we are definitely I/O limited there.

Once I’ll be done with the data load (which I expect to take a couple of days), we’ll be testing this with queries. Should be quite fun to compare the costs of this to a cloud instance. Given typical cloud machines, we can probably cover the costs of the PI in a few days![]() .

.

Comments

Is this in your opinion a normal load for a document database?

Because it looks to me like you are inserting documents of 260 bytes which I would say is more a key-value than a document type of load... Or maybe a time-series load.

And btw, what does the Data written/s represent? Is that data on disk or is that the json that the client generates (including/excluding compression)?

Because in our instances of Raven our average document size is a 100Kb with in the extreme some documents going up to 20Mb. And we are nowhere near getting these kind of numbers, usually we are happy if we get 2000/s writes

Christiaan,

The documents in question are actually not that small. What you are seeing in the MB / sec is the cost to write to the write ahead journal, which is compressed.

The average document size in the system in this stage is a user document, which has about 7 or so properties.

And the major factor in write speed is usually the kind of disk you have, to be honest. This is also without indexing, though, so there isn't any competition on the drive.

Comment preview