Building a social media platform without going bankruptPart VI–Dealing with edits and deletions

In the series so far, we talked about reading and writing posts, updating the timeline and distributing it, etc. I talked briefly about the challenges of caching data when we have to deal with updates in the background, but I haven’t really touched on that. Edits and updates are a pain to handle, because they invalidate the cache, which is one of the primary ways we can scale cheaply.

We need to consider a few different aspects of the problem. What sort of updates do we have in the context of a social media platform? We can easily disable editing of posts, of course, but you have to be able to support deletes. A user may post about Broccoli, which is verboten and we have to be able to remove that. And of course, users will want to be able to delete their own post, let’s their salad tendencies come back to hunt them in the future. Another reason we need to handle updates is this:

We keep track of the interactions of the post and we need to update them as they change. In fact, in many cases we want to update them “live”. How are we going to handle this? I discussed the caching aspects of this earlier, but the general idea is that we have two caching layers in place.

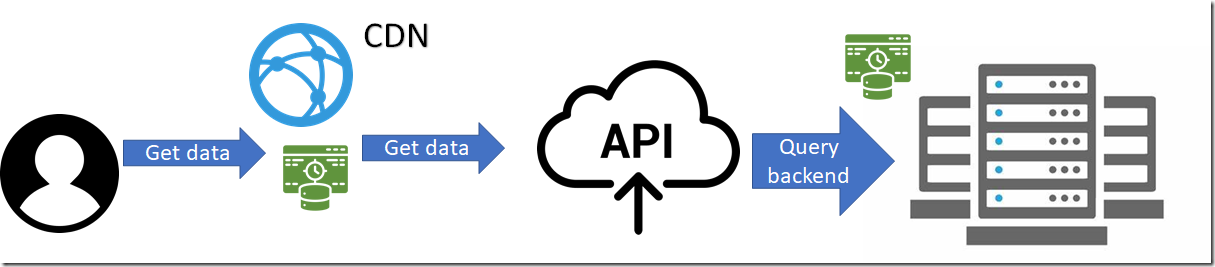

An user getting data will first hit the CDN, which may cache the data (green icon) and then the API, which will get the data from the backend. The API endpoint will query its own local state and other pieces of the puzzle are responsible for the data distribution.

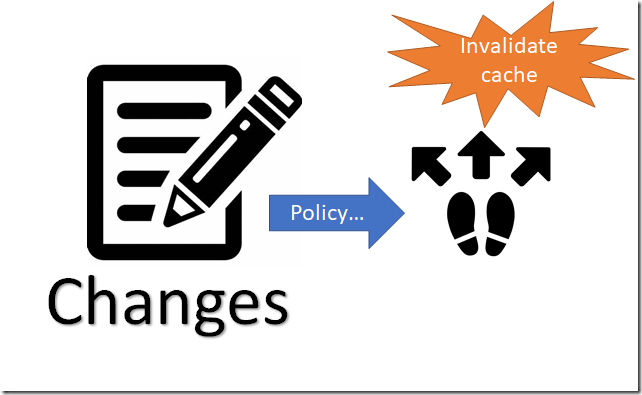

When changes happen, we need to deal with them, like so:

Each change means that we have to deal with a policy decision. For example, a deletion to a post means that we need to go and push an update to all the data centers to update the data. The same is relevant for updates to the post itself. In general, updating the content of the post or updating its view counts aren’t really that different. We’ll usually want to avoid editing the post content for non technical reasons, not for lack of ability to do so.

Another important aspect to take into account is latency and updates. Depending on the interaction model with the CDN, we likely have it setup to cache data based on duration, so API requests are cached for a period of a few seconds to a minute or two. That is usually good enough to reduce the load on our servers and still retain good enough level of updates.

Another advantage that we can use is the fact that when we get to high numbers, we can reduce the update rate. Consider:

We now need to update the post only once every 100,000 likes or shares or once for 10,000 replies. Depending on the rate of change, we can skip that if this happened recently enough. That is the kind of thing that can reduce the load curve significantly.

There is also the need to consider live updates. Typically, that means that we’ll have the client connected via web socket to a server and we need to be able to tell it that a post has been updated. We can do that using the same cache update mechanism. The update cache command is placed on a queue and the web socket servers process messages from there. A client will indicate what post ids it is interested in and the web socket server will notify it about such changes.

The idea is that we can completely separate the different pieces in the system. We have the posts storage and the timeline as one system and the live updates as a separate system. There is some complexity here about cache usage, but it is actually better to assume that stuff will not work than to try for cache coherency.

For example, a client may get an update that a particular post was updated. When it query for the new post details, it gets a notice that it wasn’t modified. This is a classic race condition issue which can case a lot of trouble for the backend people to eradicate. If we don’t try, we can simply state that on the client side, getting a not modified response after an update note is not an error. Instead, we need to schedule (on the client) to query the post again after the cache period elapsed.

A core design tenant in the system is to assume failure and timing issues, to avoid having to force a unified view of the system, because that is hard. Punting the problem even just a little bit allows us a much better architecture.

More posts in "Building a social media platform without going bankrupt" series:

- (05 Feb 2021) Part X–Optimizing for whales

- (04 Feb 2021) Part IX–Dealing with the past

- (03 Feb 2021) Part VIII–Tagging and searching

- (02 Feb 2021) Part VII–Counting views, replies and likes

- (01 Feb 2021) Part VI–Dealing with edits and deletions

- (29 Jan 2021) Part V–Handling the timeline

- (28 Jan 2021) Part IV–Caching and distribution

- (27 Jan 2021) Part III–Reading posts

- (26 Jan 2021) Part II–Accepting posts

- (25 Jan 2021) Part I–Laying the numbers

Comments

Why the hate on Broccoli? I really love my Broccoli once a week! ;)

// Ryan

Ryan,

I'm trying to use a metaphor for hot button issues. I don't think that there are people who feel strongly about Broccoli in a positive manner.

Just joking ... partly ... 😬

Really enjoying this series of posts. Particular interesting to me since I was involved with a heavy traffic social site in the 00s. A few things listed here we did too. It was really giving the user the feeling everything was real-time. But in reality it was different for each of them.

// Ryan

Comment preview