Surviving Black Friday: Or designing for failure

It’s the end of November, so like almost every year around this time, we have the AWS outage impacting a lot of people. If past experience is any indication, we’re likely to see higher failure rates in many instances, even if it doesn’t qualify as an “outage”, per se.

It’s the end of November, so like almost every year around this time, we have the AWS outage impacting a lot of people. If past experience is any indication, we’re likely to see higher failure rates in many instances, even if it doesn’t qualify as an “outage”, per se.

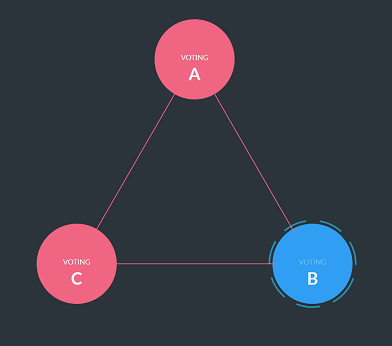

The image on the right shows the status of an instance of a RavenDB cluster running in useast-1. The additional load is sufficient to disrupt the connection between the members of the cluster. Note that this isn’t load on the specific RavenDB cluster, this is the environment load. We are seeing busy neighbors and higher network latency in general, enough to cause a disruption of the connection between the nodes in the cluster.

And yet, while the cluster connection is unstable, the individual nodes are continuing to operate normally and are able to continue to work with no issues. This is part of the multi layer design of RavenDB. The cluster is running using a consensus protocol, which is sensitive to network issues and require a quorum to progress. The databases, on the other hand, uses a separate, gossip based protocol to allow for multi master distributed work.

What this means is that even in the presence of increased network disruption, we are able to run without needing to consult other nodes. As long as the client is able to reach any node in the database, we are able to serve reads and writes successfully.

In RavenDB, both clients and servers understand the topology of the system and can independently fail over between nodes without any coordination. A client that can’t reach a server will be able to consult the cached topology to know what is the next server in line. That server will be able to process the request (be it read or write) without consulting any other machine.

The servers will gossip with one another about misplaced writes and set everything in order.

RavenDB gives you a lot of knobs to control exactly this process works, but we have worked hard to ensure that by default, everything should Just Work.

Since we released the 4.0 version of RavenDB, we have had multiple Black Fridays, Cyber Monday and Crazy Tuesdays go by peacefully. Planning, explicitly, for failures has proven to be a major advantage. When they happen, it isn’t a big deal and the system know how to deal with them without scrambling the ready team. Just another (quite) day, with 1000% increase in load.

Comments

1000% would mean 10x the load (right?). This is not unusual for a Cyber Monday type of event.

But as far as a network outage, what would be an example that would cause a 10x increase - would it simply mean a 10-node cluster is left with one node?

I guess you also need to avoid any cluster wide transactions if you want your system to stay up during such an outage? 😀

Ant,

If you are using cluster wide transactions, you are explicitly choosing to want to fail if there is no cluster.

That is usually reserved for rare operations. The common scenario I like to talk about is the difference between buying a lottery ticket ( you always want to record the sale ) vs. declaring the winner of the lottery ( you want to fail if you can't do that consistently ).

Peter,

10X the load, yes. But the question is in what capacity? For example, you may have 10 times more requests, but you may also have 10 times more bandwidth being used.

Remember that at the same time, on the same networks, you have everyone else also showing the same behavior, which means that you are now also being affected by other neighbors.

Comment preview