Always profile! The hidden cost of serializing large object graphs to JSON

We were looking at the cost of writing 10,000 documents to RavenDB and found out something truly interesting. The documents in question are complex, this is an object graph that includes over 120 objects belonging to 20 different classes. The serialized JSON is over 16KB in size. It is expected that something like that would take a lot of time.

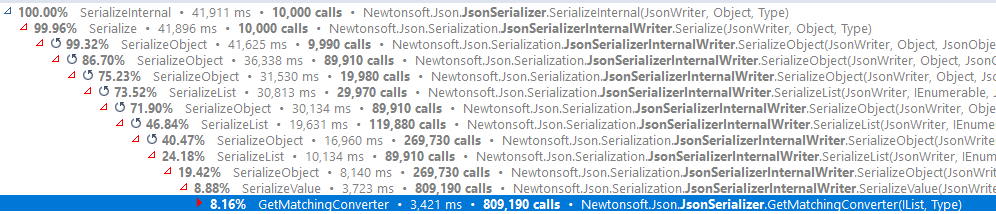

Here is the results under the profiler:

Given the size involved, taking (under the profiler), just under 4.2 ms per document isn’t bad, I thought. In general, we consider the cost of JSON serialization as pretty fixed and ignore it. This time, I looked little bit deeper and found this:

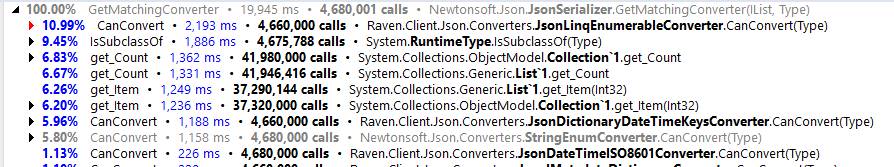

Note that this is deep in the call stack. But it is a pretty expensive call, I decided to look at all the calls that we had for this method, which gave me:

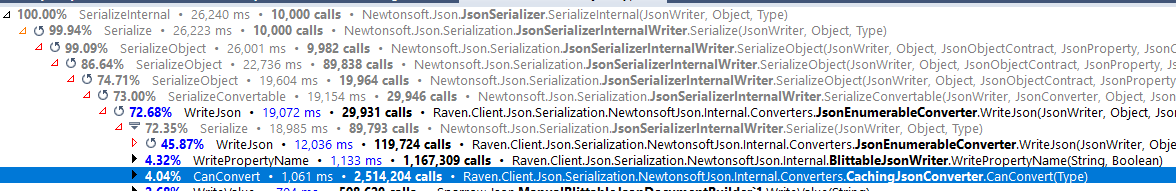

A few things that I would like to note here. The time this single method takes is roughly 50% of the total time it takes to serialize the documents. In fact, I checked what would happen if I could remove this cost:

You can insert your favorite profanity here, I usually go with Pasta!

Looking at the stack trace, it is clear what is going on. RavenDB has a bunch of customizations for the default way documents are serialized. And JSON.Net will call the CanConvet() method on the converters on each new object that it is about to covert.

Given the level of complexity in these documents (over 450 values), that means that we would call this a lot. And the cost of finding out if we need to check convert the value or not dominated this kind of operation completely.

I wrote the following converter, instead:

As you can see, the core of the fix is in the CanConvertInternal() method, there I’m checking the internal converters and caching the results. Note that I’m intentionally not using any thread safe operations here, even though this is meant to be a shared instance. The idea is that on each new item, we’ll create a new copy of the cache, and we can afford to waste the memory / work to compute the cache if we can reduce the common cost of verifying the types on each call.

We are actually expecting to lose the race conditions here, which is fine. After a very short while, we are going to be stabilized and not have to pay any additional costs.

Here is the result, you can see that we are taking about half of the previous run time.

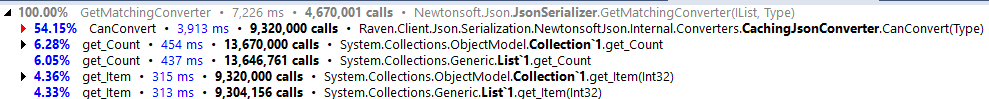

And if we look at just the GetMatchingConverter() call:

I actually had to end up with two such calls, because I have some converters that are only for writes. Given the huge performance improvement, we just created two converters to handle the caching.

Note that without a profiler, I would have never been able to figure something like this out, and even with the profiler, I had to dig deep and it took a while to narrow down where the costs actually were. I’m very surprised.

Comments

I usually profile the machine as a whole during internal stress tests for hours with ETW and 10ms sample profiling. This gives very interesting insights how the tests went and which methods were the most expensive ones. The best thing about ETW I do not need to attach to anything I can just start recording on any Windows 10 machine. No addtional installs are needed if you stick to wpr.exe which is part of Windows. With some automated data extraction tooling I can query each method or the most expensive methods either programatically or get the data on the command line which is much easier and faster to work with. Creating metrics about what are the top 100 most expensive methods and such things are easy.

Comment preview