Using machine learning with RavenDB

In this post, I'm going to walk you through the process of setting up machine learning pipeline within RavenDB. The first thing to ask, of course, is what am I talking about?

RavenDB is a database, it is right there in the name, what does this have to do with machine learning? And no, I'm not talking about pushing exported data from RavenDB into your model. I'm talking about actual integration.

Consider the following scenario. We have users with emails. We want to add additional information about them, so we assign as their default profile picture their Gravatar image. Here is mine:

On the other hand, we have this one:

In addition to simply using the Gravatar to personalize the profile, we can actually analyze the picture to derive some information about the user. For example, in non professional context, I like to use my dog's picture as my profile picture.

Let's see what use we can make of this with RavenDB, shall we?

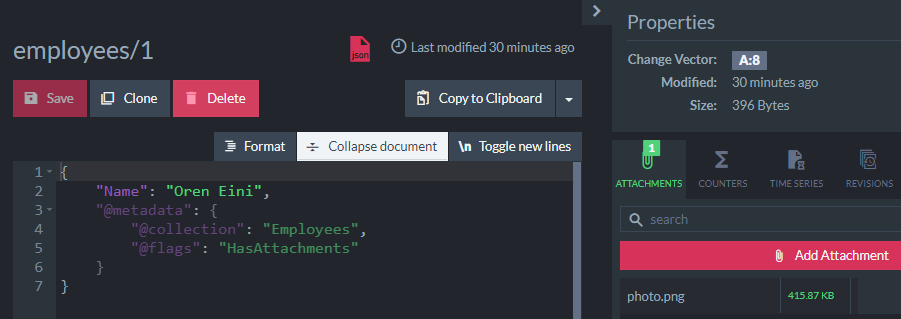

Here you can see a simple document, with the profile picture stored in an attachment. So far, this is fairly standard fare for RavenDB. Where do we get to use machine learning? The answer is very quickly. I'm going to define the Employees/Tags index, like this:

This requires us to use the nightly of RavenDB 5.1, where we have support for indexing attachments. The idea here is that we are going to be making use of that to apply machine learning to classify the profile photo.

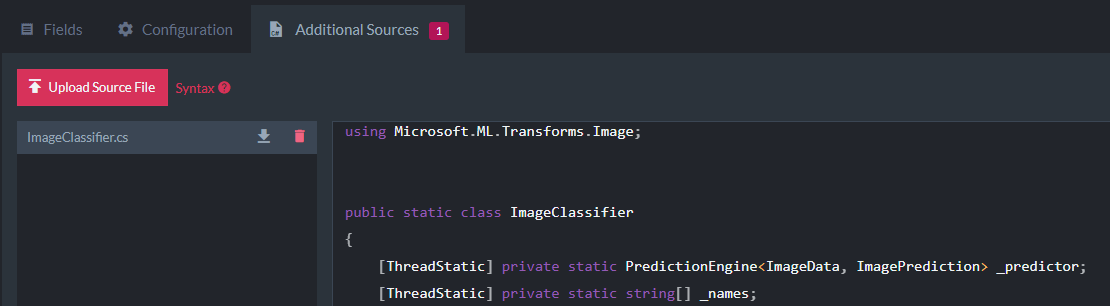

You'll note that we pass the photo's stream to ImageClassifier.Classify(), but what is that? The answer is that RavenDB itself has no idea about image classification and other things of this nature. What it does have is an easy way for you to extend RavenDB. We are going to use Additional Sources to make this happen:

The actual code is as simple as I could make it and is mostly concerned with setting up the prediction engine and outputting the results:

In order to make it work, we have to copy the following files to the RavenDB's directory. This allows the ImageClassifier to compile against the ML.Net code. The usual recommendations about making sure that the ML.Net version you deploy best matches the CPU you are running on applies, of course.

If you'll look closely at the code in ImageClassifier, you'll note that we are actually loading the model from a file via:

mlContext.Model.Load("model.zip", out _);This model is meant to be trained offline by whatever system would work for you, the idea is that in the end, you just deploy the trained model as part of your assets and you can start applying machine learning as part of your indexing.

That brings us to the final piece of the puzzle. The output of this index. We output the data as indexed fields and give the classification for them. The Tag field in the index is going to contains all the matches that are above 75% and we are using dynamic fields to record the matches to all the other viable matches.

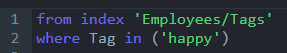

That means that we can run queries such as:

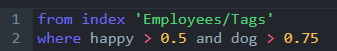

Insert your own dystopian queries here. You can also do a better drill down using something like:

The idea in this case is that we are able to filter the image by multiple tags and search for pictures of happy people with dogs. The capabilities that you get from this are enormous.

The really interesting thing here is that there isn't much to it. We run the machine learning process once, at indexing time. Then we have the full query abilities at our hands, including some pretty sophisticated ones. Want to find dog owners in a particular region? Combine this with a spatial query. And whenever the user will modify their profile picture, we'll automatically re-index the data and recompute the relevant tags.

I'm using what is probably the simplest possible options here, given that I consider myself very much a neophyte in this area. That also means that I've focused mostly on the pieces of integrating RavenDB and ML.Net, it is possible (likely, even) that the ML.Net code isn't optimal or the idiomatic way to do things. The beautiful part about that is that it doesn't matter. This is something that you can easily change by modifying the ImageClassifier's implementation, that is an extension, not part of RavenDB itself.

I would be very happy to hear from you about any additional scenarios you have in mind. Given the growing use of machine learning in the world right now, we are considering ways to allow you to utilize machine learning on your data with RavenDB.

This post required no code changes to RavenDB, which is really gratifying. I'm looking to see what features this would enable and what kind of support we should be bringing to the mainline product. Your feedback would be very welcome.

Comments

Interesting. I'd never come up with an idea to use RavenDb like this.

Btw where should the

model.zipfile be uploaded to?What I find a bit failure risky with this approach is managing the dependant DLLs and model files. Ie if at some later stage additional RavenDB instances are brought to the cluster we must not forget to add the additional DLLs and model files, and also managing files in the RavenDb directory seems a bit risky. Some sort of plugin architecture where we upload a self-contained plugin folder would be great, like what the Prise plugin framework does. This is just a thought based on reading your article. I have not used the Additional sources feature yet with RavenDb. (what would be nice is a RavenDb marketplace that would allow installing plugins from the studio) ;D ;D ;D

Nik,

The

model.zipis located in this case in the RavenDB directory. You can of course place it anywhere you want (including doing things like remote resources, an attachment, etc).I agree that the DLL model isn't ideal, but it is the simplest option available. A better option might be to allow users to upload the binaries as part of the index definition, but that leads to other issues. The

ML.NETbinaries are > 250MB, which isn't something that you want to do on a per index basis.Is there a way to upload these files to RavenDB Cloud?

Hi oren, so how many happy dogs work for the happy rhino? 😂

Quick question, I see you use Memory Stream and copying here without buffering. I guess the reason is demo code. Still curious what Raven does and how those things impact GC as well as Perf given that this code runs as part of the server app domain (or is there some clever isolation)?

Cheers from the happy lazy dog

Dejan,

In the cloud, you'll likely pull the

model.zipfrom a cloud resource, instead. Something like S3 endpoint, for example.Given that we'll need to add the ML binaries anyway, we can do that as well, but it is usually easier if the customer control the model.zip without needing to contact support.

Daniel,

Sadly, I have to report that a large majority is actually cat owners :-(

The reason I'm using

MemoryStreamhere is that this is apngimage, which requires a seekable stream.You are correct that this is not ideal, a better option would be to use something like this:

Microsoft.IO.RecyclableMemoryStreamHave you considered using NuGet? That way instead of end-user having to manage uploading files, they can simply specify package IDs and versions and let NuGet manage the dependencies. You can specify which NuGet repositories to use like internal feeds or the public NuGet feed.

Anyway, when calling your own code, does it support async?

Kiliman,

Yes, I have, see: https://ayende.com/blog/192385-A/ravendb-5-1-features-searching-in-office-documents

For async, the code is running inside the indexing function, which is non async

Comment preview