RavenDB 5.0 Features: Smart document compression

Documents in RavenDB can be of arbitrary size. Technically, they are limited to 2 GB in size, but if you get anywhere near that, you have other issues. The worst case I have seen is a 700+ MB file, but RavenDB will issue warnings if you have documents that exceed the 5 MB range. This is mostly because the cost of sending those MB range documents back and forth. RavenDB itself is doing quite fine with those documents. However, most documents tend to be much smaller. The typical data size of documents is in the order of few to low dozens of KBs.

Since RavenDB 2.5, we had a document compression feature, which allowed you to trade CPU cycles for disk space. That was used quite successfully in a number of client projects. The way it worked was simple, all you need to do is to enable compression, and the documents would be compressed on the fly for you. That approached worked, and could see some interesting space savings, but it didn’t make the cut when we rebuilt RavenDB in 4.0.

Why is that?

There are a number of reasons for that. To start with, document compression in previous versions of RavenDB were applied on the individual document level. What this meant is that we would be able to compress away redundancies only inside a single document. There is still quite a lot that you can compress using this method, but it isn’t as efficient as compressing multiple documents all at once, in which case we can benefit from the cross document redundancies.

For example, the JSON data for all the documents that were created today have: “2020-04-13T”, when we compress many of them together, we can take advantage of this. When they are compressed independently, there is far fewer redundancies.

The other problem was that RavenDB 4.x was designed with performance in mind. Having to decompress the document data meant that we would have to do some additional processing on the documents as they came from disk. That was considered expensive and against our policy of zero copies. Given that the compression rate we saw wasn’t that attractive, we left that feature on the editing room floor.

What we did instead was address a far more common scenario for document compression, the compressing of values. In RavenDB, a large string value is going to be automatically (and transparently) compressed for you. But large, I’m talking larger than 127 characters.

In the last couple of years, we have run into numerous cases where people are storing stupendous amount of data inside of RavenDB. And we started to get complaints about two distinct (but related) issues:

- RavenDB not having a schema meant that we repeat the JSON structure on each document.

- They have very large number of documents that are very rarely touched (live archive) and were seeing storage issues at that scale.

When we set out to build document compression, we had a simple goal. We need to be efficient, there is some tradeoff of computation power to disk utilization, but it needs to be reasonable. We also need to be sure that our work pay off in terms of the space savings we got. That effectively ruled out the option of compressing documents independently. We needed a way to do that across documents.

I actually took a deep dive into compression almost 6 years ago and learned a lot about how compression algorithm work, what is possible and how it can be made to work with different scenarios. I’m not an expert, I want to emphasis that, and some of the things that are going on in the compression world are flat out opaque to me. But I learned enough to figure out how the parts come together.

Data compression is not something new. Other database offer value compression similar to RavenDB’s large string compression.This is usually done on a per value method. MySQL has support for compressed tables, where each page is compressed using zlib. This allows to compress data that is shared between rows, but that only covers the values in a particular (1 KB – 8 KB page). We actually have this feature, compression of pages inside RavenDB. This is used to store map/reduce values and can really help, because many map/reduce results are inherently very similar to one another. They are also likely to change independently and we rely on the B+Tree structure of the data to process the map/reduce indexes.

Out of the available libraries and algorithms, the Zstd library is the one I selected. This is because it is one of the fastest options while giving great compression ratios. Lz4, which is something that we already use quite extensively, is faster, but it has worse compression ratio and it is lacking a crucial feature, the ability to train the compression algorithm. Why is that important? It is important because our scenario is compressing of a lot of small (usually similar) documents, not a massive corpus all at once.

If we can train the algorithm on the documents, we can get great benefits from removing redundancies across documents. The question is how exactly to manage this. The key problem is that we need to handle data changing over time and sharing of resources. Here is how it works:

- You can mark certain collections (and revisions) as compressed. This is a setting that you can toggle on and off at will.

- When RavenDB is writing a document to a compressed collection, it will look up the current dictionary for this collection and compress the document using that dictionary.

- The first few documents will be compressed with no dictionary, independently.

- We measure the compression ratio of the values and once the compression ratio exceed the appropriate tolerances we will evaluate the last 256 documents on that collection and train a new dictionary.

- If the new dictionary compression ratio is better than the existing one (for the new document), we’ll use that from now on.

What ends up happening is that as you write documents into a compressed collection, RavenDB watches your data and learn how to best compress it. The more you write, the more information RavenDB has to find the optimal dictionary to compress your data. This way, we are able to individually compress and decompress documents, while still retaining great compression rates.

There are a lot of details that I’m not getting into, because they aren’t that interesting from the outside world. I might do a webinar on that to explain them in detail, things like how RavenDB decide to re-train, how do we handle recovery of data in the case of hardware failure, etc. But the end result is really nice.

Here are the results from some of the tests we run.

My Blog, with 25,725 docs and 30,232 revisions takes 380.24 MB without compression and it takes 4 seconds to load it to an empty database.

With compression, the time taken soars to 29 seconds (725% higher!), but the disk space drops to 208.85 MB (54%). Note that my blog is a very small dataset, so it is usually not a good candidate from compression. The reason that we see such a major slowdown is probably because we are paying the cost to train on the data (which is expensive) but we don’t have enough writes to amortize these costs.

Let’s see some of the results with larger datasets.

Production dataset, composed of 2,654,072 documents and then 2,803,947 writes to those documents. This took 9 minutes and 23 seconds and 5.98 GB.

This is an interesting test, because what we have here is a totally different test. Before, I was testing raw write speed. Here, most of the time is actually spent in handling updates.

With compression, the time it took was 9 minutes and 58 seconds. So now we have 6% increase in the write time. Note that here we are talking about a speed difference that isn’t truly meaningful. I have seen it swing the other way in these benchmarks. It is possible that other things that happened on the machine impacted this. At any rate, this is really close.

What about the compressed size? Well, we now have: 3.79 GB, we got it down to 63%. This is a bit more impressive than the numbers actually tell. This particular data set contains 1.73GB of data that we don’t compress (it isn’t documents or revisions).

The largest collection had the following changes:

| Before | After | % Original |

|

|

|

This is good, but I think that there is still some room for tuning here. We’ll look into that after the feature is stabilized.

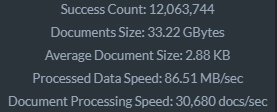

The last test that I run was to check the Stack Overflow dataset. This is a dump of the Stack Overflow data which provides both significant data size and real world data. Loading the dataset to RavenDB without compression yields the following numbers:

- A total of 18,338,102 documents loaded in 7 minutes and 58 seconds.

- The size on disk is: 51.46 GB

As for the compressed version?

- Loading time was 12 minutes, 40 seconds – 158% slower

- The size on disk, however, was: 21.39 GB – 41% of the original.

Those are good numbers, given the fact that we have these ingest rates:

- Uncompressed: 38, 364 docs / sec

- Compressed: 24,129 docs / sec

In most cases, these high rate of inserts are going to be rare. And there is a lot of capacity here for normal workloads.

So far I talked only about write times, but what are the costs on reads? A simple way to look into the cost is to index the data. This forces RavenDB to access all the data in the relevant collection, so that gives us a good test.

On my production dataset, without compression, a simple index was clocked at:

Total indexing time: 1 minute, 50 seconds.

With compression, the same index was clocked at:

Total indexing time: 1 minute, 36 seconds.

This is not a mistake. We are actually seeing the compressed portion as faster. The reason for this is simple, the cost of I/O. In this case, the uncompressed indexing had to read 3.32 GB from disk while the compressed version had to read a third of that. It turns out that this matters, a lot, and that it has a huge impact on the overall time.

Doing the same testing on Stack Overflow dataset, I created an index covering 12 million of those documents, and I clocked the uncompressed version at:

Total time to index the data (this particular collection stands at 47.32 GB) was: 7 minutes, 2 seconds.

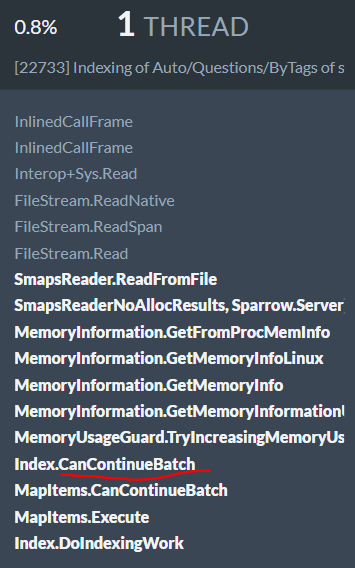

The compressed database, on the other hand, had to deal with just 18.76 GB of data, but proceeded at a much slow rate, about 7,000 docs/sec. I’m pretty sure that the underlying reason is that we have enough data and we are indexing it quickly enough and in big enough batches to start seeing memory allocations issues. In fact, I was able to confirm that. Here is the output from RavenDB:

What we can see here is that we are blowing the memory budget for the index. Whenever we do that, we have to take into account the state of the system, to figure out if we need to stop the current batch and release resources. In this case, we are paying for that by checking every 16 MB of allocated memory. Given average document size of 2.88 KB, that means that we need to run this (not inexpensive) check once every 6,000 documents or so. Once we fix that, I hope to see much greater numbers.

However, remember that the whole point here is to trade CPU time for disk space. We certainly see the space savings here, so it isn’t surprising that this is has an impact on performance.

A quick test of random reads throughout the Stack Overflow database also shows the impact, but on the other direction. I’m running these tests on a dedicated machine with 32 GB of RAM.

When using compressed database, I can reach 95,000 reads / sec. When using uncompressed data, I can only reach a maximum of 85,000 reads / sec. The size of the collection I’m querying exceeds the size of the RAM when it isn’t compressed, so I think that at least part of that is related to the difference in IO costs.

When running queries for both compressed and uncompressed in parallel (so they have to fight for the memory), I can see that the compressed database has a 1,500 – 2,500 reads / sec advantage over the uncompressed database.

In short, all of this lead to a good set of rules of thumbs. Compression makes write somewhat slower, it is currently (but we’ll be fixing that, probably by the time you read this post) make indexing somewhat slower, but queries and reads tend to be faster due to reduced I/O.

The space savings can range between one half to two thirds of your data. If you care about disk space, especially if you have a dataset where many of your documents are only touched for a while and then mostly are rarely touched, this is a good fit for you.

We intend to make this an experimental feature in RavenDB 5.0, get some field testing in the real world on this and graduate this to on by default in future releases.

As usual, you feedback is critical and I would greatly appreciate it.

Comments

could you give an idea of the sizes (eg approx GB s) the clients were using that were causing issues, and were the issues financial or technical?

Your saving column is showing the wrong percentage, it shows after*100/before(%) where it should show (1 - after/before) * 100 (%)

Peter,

One of them was mostly concerned about a few GB of data in relational database exploding to 15+ GB in JSON format. The other had to deal with 800 GB+ in one instance and 1.3 TB in another.

Tal,

Thanks, I updated the post header

LZ4 can also use dictionaries created by zstd, and benefit accordingly, with the restriction that dictionaries should not be larger than 64 KB.

Tollen,

I did not know that. I think that the last time I did serious work on LZ4, it didn't have this capability. Given that we need zstd for the training capabilities, I don't think it would matter.

Comment preview