Design exerciseArbitrary range aggregations in RavenDB

RavenDB is really great in aggregations. Even if you have a stupendous amount of information, it will do really well in crunching through the data and summarizing it for you. This is due to the way RavenDB implements map/reduce operations, it allows us to instantly give you aggregation results, regardless of data size. However, this approach requires that you’ll tell RavenDB up front how you want to do the aggregation. This allows RavenDB to do the work ahead of time, as you modify the data, instead of each time you query for it.

A question was raised in the mailing list. How can you use RavenDB to do arbitrary ranges during aggregation. For example, let’s say that we want to be able to look at the total charges for a customer, but slice it so we’ll have:

- Active – 7 days back

- Recent – 7 – 21 days back

- History – 21 – 60 days back

And each user can define they own time period for Active / Recent / History ranges. That complicate things somewhat, but luckily, RavenDB has multiple ways to solve this issue. First, let’s create the appropriate index for this.

This isn’t really anything special. It will simply aggregate the data by company and by date. That isn’t enough for what we want to query. For that, we need to reach for another tool in our belt, facets.

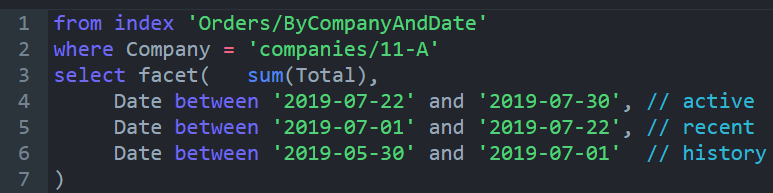

Here is the relevant query:

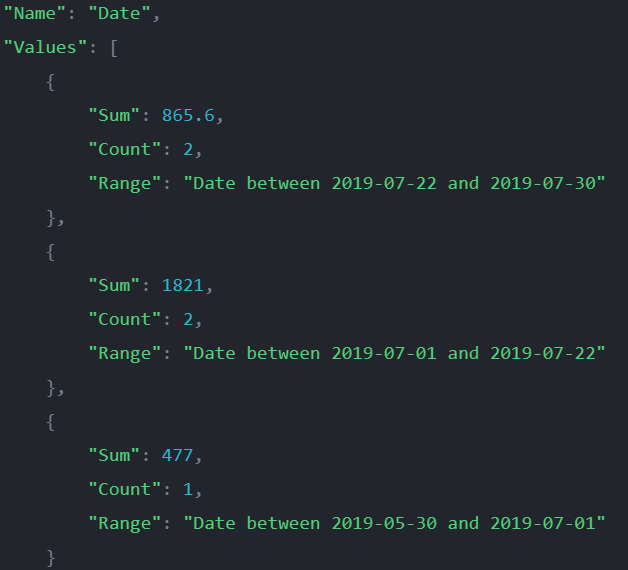

And here is what the output looks like:

The idea is that we have two stages for the process. First, we use the map/reduce index to pre-aggregate the data at a daily level. Then we use facets to roll up the information to the level we desire. Instead of having to go through the raw data, we can operate on the partially aggregated data and dramatically reduce the overall cost.

More posts in "Design exercise" series:

- (01 Aug 2019) Complex data aggregation with RavenDB

- (31 Jul 2019) Arbitrary range aggregations in RavenDB

- (30 Jul 2019) File system abstraction in RavenDB

- (19 Dec 2018) Distributing (consistent) data at scale, answer

- (18 Dec 2018) Distributing (consistent) data at scale

- (26 Nov 2018) A generic network protocol

Comments

Comment preview