TimeSeries in RavenDB: Exploring the requirements

Last week I posted about some timeseries work that we have been doing with RavenDB. But I haven’t actually talked about the feature in this space before, so I thought that this would be a good time to present what we want to build.

The basic idea with timeseries is that this is a set of data points taken over time. We usually don’t care that much about an individual data point but care a lot about their aggregation. Common usages for time series include:

- Heart beats per minute

- CPU utilization

- Central back interest rate

- Disk I/O rate

- Height of ocean tide

- Location tracking for a vehicle

- USD / Bitcoin closing price

As you can see, the list of stuff that you might want to apply this to is quite diverse. In a world that keep getting more and more IoT devices, timeseries storing sensor data are becoming increasingly common. We looked into quite a few timeseries databases to figure out what needs they serve when we set out to design and build timeseries support to RavenDB.

RavenDB is a document database, and we envision timeseries support as something that you use at the document boundary. A good example of that would the heartrate example. Each person has their own timeseries that record their own heartrate over time. In RavenDB, you would model this as a document for each person, and a heartrate timeseries on each document.

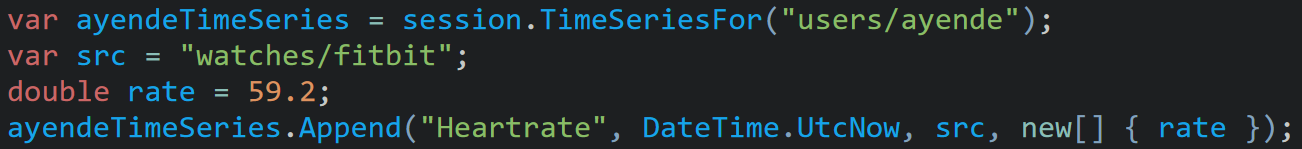

Here is how you would add a data point to my Heartrate’s timeseries:

I intentionally starts from the Client API, because it allow me to show off several things at once.

- Appending a value to a timeseries doesn’t require us to create it upfront. It will be created automatically on first use.

- We use UTC date times for consistency and the timestamps have millisecond precision.

- We are able to record a tag (the source for this measurement) on a particular timestamp.

- The timeseries will accept an array of values for a single timestamp.

Each one of those items is quite important to the design of RavenDB timeseries, so let’s address them in order.

The first thing to address is that we don’t need to create timeseries ahead of time. Doing so will introduce a level of schema to the database, which is something that we want to avoid. We want to allow the user complete freedom and minimum of fuss when they are building features on top of timeseries. That does lead to some complications on our end. We need to be ab le to support timeseries merging. Allowing you to append values on multiple machines and merging them together into a coherent whole.

Given the nature of timeseries, we don’t expect to see conflicting values. While you might see the same values come in multiple times, we assume that in that case you’ll likely just get the same values for the same timestamps (duplicate writes). In the case of different writes on different machines with different values for the same timestamp, we’ll arbitrarily select the largest of those values and proceed.

Another implication of this behavior is that we need to handle out of band updates. Typically in timeseries, you’ll record values in increasing date order. We need to be able to accept values out of order. This turns out to be pretty useful in general, not just for being able to handle values from multiple sources, but also because it is possible that you’ll need to load archived data to already existing timeseries. The rule that guided us here was that we wanted to allow the user as much flexibility as possible and we’ll handle any resulting complexity.

The second topic to deal with is the time zone and precision. Given the overall complexity of time zones in general, we decided that we don’t want to deal with any of that and want to store the times in UTC only. That allows you to work properly with timestamps taken from different locations, for example. Given the expected usage scenarios for this feature, we also decided to support millisecond precision. We looked at supporting only second level of precision, but that was far too limiting. At the same time, supporting lower resolution than millisecond would result in much lower storage density for most situations and is very rarely useful.

Using DateTime.UtcNow, for example, we get a resolution on 0.5 – 15 ms, so trying to represent time to a lower resolution isn’t really going to give us anything. Other platforms have similar constraints, which added to the consideration of only capturing the time to millisecond granularity.

The third item on the list may be the most surprising one. RavenDB allows you to tag individual timestamps in the timeseries with a value. This gives you the ability to record metadata about the value. For example, you may want to use this to record the type of instrument that supplied the value. In the code above, you can see that this is a value that I got from a FitBit watch. I’m going to assign it lower confidence value than a value that I got from an actual medical device, even if both of those values are going to go on the same timeseries.

We expect that the number of unique tags for values in a given time period is going to be small, and optimize accordingly. Because of the number of weasel words in the last sentence, I feel that I must clarify. A given time period is usually in the order of an hour to a few days, depending on the number of values and their frequencies. And what matters isn’t so much the number of values with a tag, but the number of unique tags. We can very efficiently store tags that we have already seen, but having each value tagged with a different tag is not something that we designed the system for.

You can also see that the tag that we have provided looks like a document id. This is not accidental. We expect you to store a document id there, and use the document itself to store details about the value. For example, if the type of the device that captured the value is medical grade or just a hobbyist. You’ll be able to filter by the tag as well as filter by the related tag document’s properties. But I’ll show that when I’ll post about queries, in a different post.

The final item on the list that I want to discuss in this post is the fact that a timestamp may contain multiple values. There are actually quite a few use cases for recording multiple values for a single timestamp:

- Longitude and latitude GPS coordinates

- Bitcoin value against USD, EUR, YEN

- Systolic and diastolic reading for blood pressure

In each cases, we have multiple values to store for a single measurement. You can make the case that the Bitcoin vs. Currencies may be store as stand alone timeseries, but GPS coordinates and blood pressure both produce two values that are not meaningful on their own. RavenDB handles this scenario by allowing you to store multiple values per timestamp. Including support for each timestamp coming with a separate number of values. Again, we are trying to make it as easy as possible to use this feature.

The number of values per timestamp is going to be limited to 16 or 32, we haven’t made a final decision here. Regardless of the actual maximum size, we don’t expect to have more than a few of those values per timestamp in a single timeseries.

Then again, the point of this post is to get you to consider this feature in your own scenarios and provide feedback about the kind of usage you want to have for this feature. So please, let us know what you think.

Comments

Are the values limited to double/numeric? That would be a reasonable limitation, but other timeseries (with discrete values) might be useful too. Examples: the color of a traffic light over time, whether a host was online at a given time, type of weather (clear sky, cloudy, rain, hail, thunderstorm, snow).

A use case I have in mind would be to track solar panel output (see pvoutput.org for example). Useful use cases would be to compare today 8:00 with yesterday 8:00, to find a jump in value (select timestamp where value > interpolated_value(timestamp - 1 day) * 1.5) to figure out when shade kicks in etc.

kvleeuwen, I expect that if you want to store such values, you'll use an enum and set the value in that manner. Otherwise, you actually got 8 bytes to work with, so that would work as well by just turning the ASCII to numeric values. :-)

If I understand correctly your scenario with the solar panels, you want to issue a query that would look roughly like this?

What sort of timescale are you looking at for this feature? I'd be interested in playing with it to see how we might make use of it... and feedback of course.

Hi Piers, This is going to be in 5.0, we plan to have the bits available for testing toward the end of the year.

Comment preview