Memory management as the operating system sees it

About 15 years ago I got a few Operating Systems books and started reading them cover to cover. They were quite interesting to someone who was just starting to learn that there is something under the covers. I remember thinking that this was a pretty complex topics and that the operating system had to do a lot to make everything seem to go.

About 15 years ago I got a few Operating Systems books and started reading them cover to cover. They were quite interesting to someone who was just starting to learn that there is something under the covers. I remember thinking that this was a pretty complex topics and that the operating system had to do a lot to make everything seem to go.

The discussion of memory was especially enlightening, since the details of what was going on behind the scene of the flat memory model we usually take for granted are fascinating. In this post, I’m going to lay out a few terms and try to explain how the operating system sees it and how it impacts your application.

The operating system needs to manage the RAM, and typically there is also some swap space that is available as well to spill things to. There is also mmap files, which come with their own backing store, but I’m jumping ahead a bit.

Physical memory – The amount of RAM on the device. This is probably the simplest to grasp here.

Virtual memory – The amount of virtual memory each process can access. This is different between each process and quite different from how much memory is actually in used.

- Reserved virtual memory – a section of virtual memory that was reserved by the process. The only thing that the operating system needs to do is not allocate anything within this range of memory. It comes with no other costs. Trying to access this memory without first committing it will cause a fault.

- Committed virtual memory – a section of virtual memory that the process has told the operating system that they intend to use. The operating system commits to having this memory when the process actually uses it. The system can also refuse to commit memory if it choses to do so (for example, because it doesn’t have enough memory for that).

- Used virtual memory – a memory section that was previously committed from the operating system and is actually in use. When you commit memory, that isn’t actually doing anything. Only when you access the memory will the OS actually assign a physical memory page for that memory you just accessed. The distinction between the last two is quite important. It is very common to commit far more memory than is actually in use. By not actually taking any space until it is used, the OS can save a lot of work.

Memory mapped files – a section of the virtual address space that uses a particular file as its backing store.

Shared memory – a named piece of memory that may be mapped into more than a single process.

All of these interact with one another in non trivial manners, so it can sometimes be hard to figure out what is going on.

The interesting case happens when the amount of memory we want to access is higher than the amount of physical RAM on the machine. At this point, the operating system needs to start juggle things around and actually make decisions.

Reserving virtual memory is mostly a very cheap operation. This can be used if you will want some contiguous memory space but don’t need all of it right now. On 32 bits, the address space is quite constrained, so that can fail, but on 64 bits, you typically have enough memory address space that you don’t have to worry about it.

Committing virtual memory is where we start getting into interesting issues. We ask the operating system to ensure that we can access this memory, and it will typically say yes. But in order to make that commitment, the OS needs to look at its global state. How many other commitment did it make? In general, the amount of memory commitments that the OS can safely do is limited to the size of the RAM plus the size of the swap. Windows will simply refuse to allocate more (but it can dynamically increase the size of the swap as load grows) but Linux will happily ignore the limit and rely on the fact that applications will rarely actually make use of all the memory they are committing.

So committed memory is counted against the limit, but it isn’t memory that is actually in use. When a process is accessing memory, only then will the OS allocate that memory for it, until then, it is just a ledger entry.

But the memory on your machine is not just stuff that processes allocated. There are a bunch of other stuff that may make use of the physical memory. There are I/O bound devices, which we’ll ignore because they don’t matter for us at this point.

But of much more interest to us at this point is the notion of memory mapped files. These are most certainly memory resident, but they aren’t counted against the commit size of the system. Why is that? Because when we use a memory mapped file, by definition, we are also supplying a file that will be the backing store for this memory. That, in turn, means that we don’t need to worry about where we’ll put this memory if we need to evict some for other purposes, we have the actual file.

All of this, of course, revolves around the issue of what will actually reside in physical memory. And that leads us to another very important term:

Active working set – The portion of the process memory that resides on the physical RAM. Some portions of the process memory may have been paged to disk (if the system is overloaded or if it has just mapped a file and haven’t access the contents yet). The actual term refer to the amount of memory that the process has recently been using, and under load, the working set may be higher then the amount of memory actually in use, leading to thrashing. The OS will keep evicting pages to the page file and then loading them again, in a vicious cycle that typically kills the machines.

Now that we know all these terms, let’s take a a look at what RavenDB reports in some case:

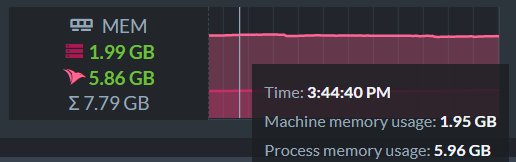

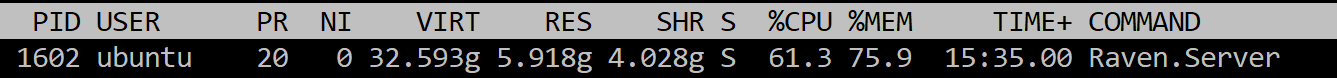

The total system memory is 8 GB (about 200MB are reserved for the hardware). RavenDB is using 5.96GB and the machine entire memory usage is 1.95GB. How can a single process in the machine use more memory than the entire machine?

The reason for that is that we aren’t always talking about the same thing. Here is the output of pertinent memory information from this machine (cat /proc/meminfo).

You can see that we have a total memory of 8GB, but only 140MB are free. In active use we have 2.2GB and a lot of stuff in inactive.

There is also the MemAvailable field, which says that we have 6.2GB available. But what does this means? It is a guesstimate of how much memory we can start using without starting to swap. Taking the values from top, it might be easier to understand:

There are about 6GB of cached data, but what is it caching? The answer is that RavenDB is making use of memory mapped files, so we gave the system extra swap space, so to speak. Here is what this looks like when looking at the RavenDB process:

In other words, large parts of our working set is composed of memory mapped files, and we don’t want to try to account that against the actual memory being in use in the system. Because it is very common for us to operate with almost no free memory, because that memory is being used (by the memory mapped files) and the OS knows that it can just make use of this memory if new demands comes in.

Comments

You really should consider writing an operating system, or at least make a linux-based distro. You can call it RhinocerOS. Or perhaps guide others to write one.

Quintonn, That is what Unikernels are for. :-)

Comment preview