Reviewing ResinPart IV

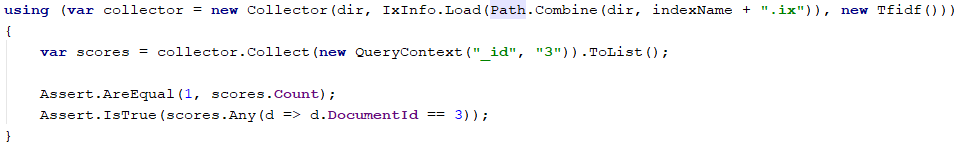

In the previous part, I looked at UpsertTransaction in Resin and speculated about how the queries work. In this one, I’m going to try to figure out how queries work. Our starting point is this:

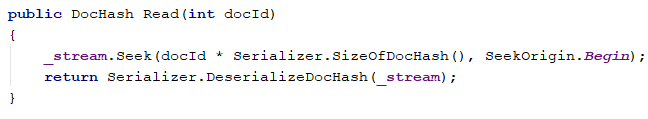

We start from the index header, and we’ll traverse down from there. One one the first things that happen in the Collector is the creation of the DocHashReader, whose sole purpose is to… read a document hash. It is doing it like this:

The problem is that there is really no need to write all this code. It would be simple to use:

It does the exact same thing, but with a lot less work all around.

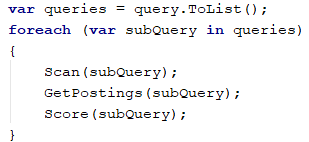

The core of the Collect method is:

For our purposes, we are running just a single query, so no need to worry about sub queries at this time. Looking at the Scan method, the first thing it does is to open the tri file. It looks like I missed a bunch of stuff there.

The field name hash is the one used in the key, not the name itself. That means that you aren’t limited to just stuff that is safe to use on the file system. There is also a “.six” file that I’ve not seen before, it is related to tries, and I’m skipping it for now because I want to have a separate post about them.

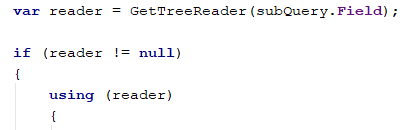

It is used like this:

The problem I have is that this means that the GetTreeReader will open a bunch of files, then immediately close them. That is going to be a lot of system calls that are being generated, which can probably be saved with some effort.

The really interesting bit is here:

This is where the magic happens. This is the core for actually searching over the tries and figuring out what values we actually have there.

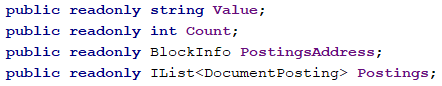

The result of this is a List<(string Field, Word Word)>. And Word contains:

Reminder, the Postings is actually the list of all the documents that contain this value in this field, and the number of times that this value appear in the document.

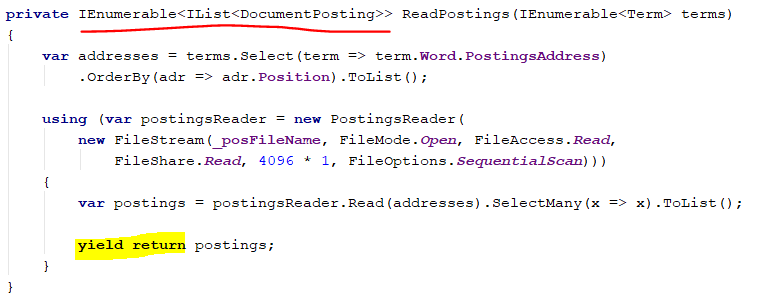

The next method is GetPostings, which starts by reading them:

The problem I have here is that this method looks like it has been refactored half way. It can only return a single list, and again, there is the over use of Linq operations and their allocations.

As an aside on code formatting, in many places in the code so far, I have chosen to minify the code without changing its meaning, because there is such a high overhead to the differences. I’m doing this fairly automatically, because it help me read and understand. Here is a before and after example, which was drastic enough to make me realize I’m doing this.

|

|

|

Functionally, those two are doing the same, and I fine the after option much more readable.

The Sum method here is pretty horrible, in the sense that it has high complexity, luckily, it is never called with more then one list, so that cost is hidden.

A fun exercise would be to compute the actual complexity with real inputs. I just looked at it and went “this gotta be expensive” then figured out that the code only ever call it with a single list, so I skipped it.

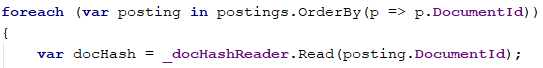

After getting the posting, we need to score the query. This is where we see the usage of the document hash. They are used to go from the document id to check if the document has been deleted. The actual scoring is Tf-Idf, so pretty standard and not interesting here.

It does bugs me to see things like this:

Sorting can be very expensive, and I’m pretty sure that it is not actually needed here, and it would improve performance quite impressively to remove it.

Okay, now we are almost done with the query, all that remains is investigating this line:

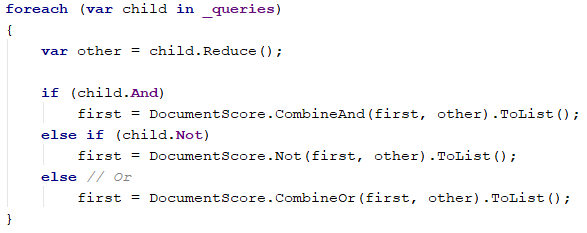

The unbounded result set is annoying, but I gave up that fight, I’m afraid. Let us see what Reduce does. In complex queries, I expect that it would merge all the subqueries and do filtering / intersection/etc.

And it does just that, which is great. I do wonder if scoring the results could be pushed after the query reducing, because that would reduce the amount of work that needs to be done, but that is a small optimizations, probably.

Okay, that is enough for this post. I now have a pretty good understanding on how queries actually work. Next, I’m going to look at some other pieces of the code that I haven’t looked at, then focus of the trie.

Comments

To be honest, I prefer a programming style with a lots of IF-statements because it is easier to make breakpoints and using a profiling tool, you can easily see when something unexpected happened.

Re: the sorting by documentId - I'd imagine it's doing that so that (in concert with the Read method you already shortened) it's trying to access the file sequentially/forwards. Whether that's an optimization, and whether it's worth doing here I'm not sure.

Comment preview