Reviewing ResinPart I

Resin is a “Cross-platform document database and search engine with query language, API and CLI”. It is written in C#, and while I admit that reading C# code isn’t as challenging as diving into a new language, a project that has a completely new approach to a subject that is near and dear to my heart is always welcome. It is also small, coming at about 6,500 lines of code, so that make for quick reading.

I’m reviewing commit ddbffff88995226fa52236f6dd6af4a48c833f7a.

As usual, I’m going to start reading the code in alphabetical file order, and then jump around as it make sense. The very first file I run into is Tests/AnalyzerTests where we find the following:

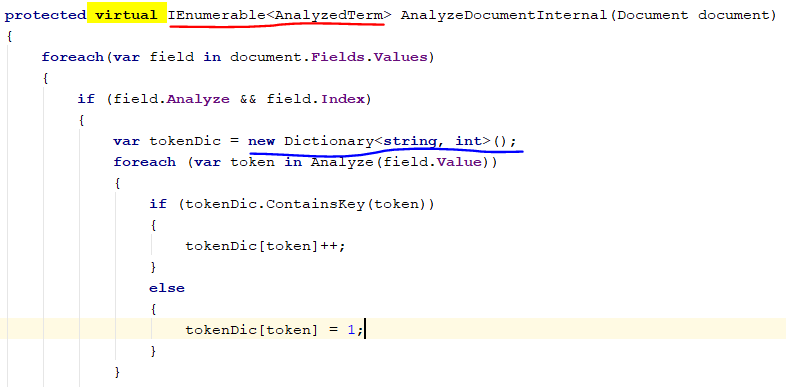

This is really interesting, primarily because of what it tells me. Analyzers are pretty much only used for full text search, such as Lucene or Noise. Jumping into the analyzer, we see:

This tell me quite a few things. To start with, this is a young project. The first commit is less then 18 months ago and I’m judging it with the same eye I use to looking at our own code. This code needs to be improved, for several reasons.

First, we have a virtual method call here, probably intended to be an extension point down the line. Currently, it isn’t used, and we pay the virtual call cost for no reason. Next we have the return value. IEnumerable is great, but this method is using yield, which means that we’ll have a new instance created per document. For the same reason, the tokenDic is also problematic. This one is created per field’s value, which is going to cost.

One of the first thing you want to have when you start worrying about performance is controlling your allocations. Reducing allocations in this case, by reusing the dictionary instance, or avoiding the yield would help. Lucene did a lot of stuff right in that regard, and it ensures that you can reuse instances wherever possible (almost always), since that can dramatically improve performance.

Other than this, we can also see that we have Analyze and Index features, for now I’m going to assume that they are identical to Lucene until proven otherwise. This was the analyzer, but what is going on with the tokenizer? Usually that is a lot more low level.

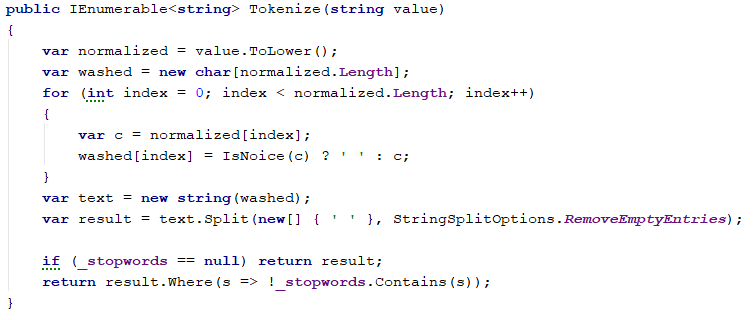

The core of the tokenizer is this method (I prettified it a bit to make it fit better on screen):

As far as I can tell so far, most of the effort in the codebase has gone into the data structures used, not to police allocations or get absolute performance. However, even so this would be one of the first places I would look at whenever performance work would start. (To be fair, speaking with the author of this code, I know there hasn’t been any profiling / benchmarking on the code).

This code is going to be at the heart of any indexing, and for each value, it is going to:

- Allocate another string with the lowered case value.

- Allocate a character buffer of the same size as the string.

- Process that character buffer.

- Allocate another string from that buffer.

- Split that string.

- Use a lambda on each of the parts and evaluate that against the stopwords.

That is going to have a huge amount of allocations / computation that can be saved. Without changing anything external to this function, we can write the following:

This will do the same, but at a greatly reduced allocation cost. A better alternative here would be to change the design. Instead of having to allocate a new list, send a buffer and don’t deal with strings directly, instead, deal with a spans. Until we .NET Core 2.0 is out, I’m going to skip spans and just use direct tokens, like so:

There are a few important things here. First, the code now don’t do any string allocations, instead, it is operating on the string characters directly. We have the IsStopword method that is now more complex, because it needs to do the check without allocating a string and while being efficient about it. How it left as an exercise for the reader, but it shouldn’t be too hard.

One thing that might not be obvious is that tokens list that we get as an argument. The idea here is that the caller code can reuse this list (and memory) between calls, but that would require a major change in the code.

In general, not operating on strings at all would be a great thing indeed. We can work with direct character buffers, which will allow us to be mutable. Again, spans would probably fit right into this and be of great help.

That is enough for now, I know we just started, but I’ll continue this in the next post.

Comments

We have the IsStopword method that is now more complex, because it needs to do the check without allocating a string and while being efficient about it.

Given the following restrictions:

- a method with the signature bool IsStopWord(string value, int offset, int len)

- a list of stopwords as a IEnumerable of string

- new string alloc not allowed

... I see no clear path towards a solid equals method

If stopwords were a IEnumerable of char arrays we could do SequenceEqual.

Did you think about this kind of optimizations when you first build RavenDB (even did you have all the knowledge for seing it) or you just applied the "premature optimization is a root of all devil" and optimized your codebase with the time ?

Marcus, Here you go:

Remi, The first version of RavenDB did a LOT of things in a highly unoptimized manner. I was focused on getting things sort of working, I didn't even know what I'm supposed to look at compare to what I'm seeing today.

How would you design the signature of the method with spans, considering their stack-only limitations?

That compare API call you make, I hadn't seen it before. I think I understand now why you would prefer to work with char buffers.

Svick, I haven't had the time to play properly with spans. The stack only nature is one of their advantages, but it does complicate things. Might be something like:

bool MoveNext(string data, ref Span<string> token);And the idea is that you send an empty span the first time, then we use the token span as the state to know where to start on the next call.

Dont you need to increase start in better-optimizer.cs (line 13)?

Markus, Probably, if this was real code I would be running unit tests to verify it.

C# uses callvirt for all instance methods:

Comment preview