The crash at the Unicode text

So a customer of ours has been putting RavenDB 4.0 through it paces, they have a large database with some pretty nasty indexes. They reported that when importing their existing data, they managed to crash RavenDB. The problem was probably some interaction of indexing and documents, but this was pretty consistent.

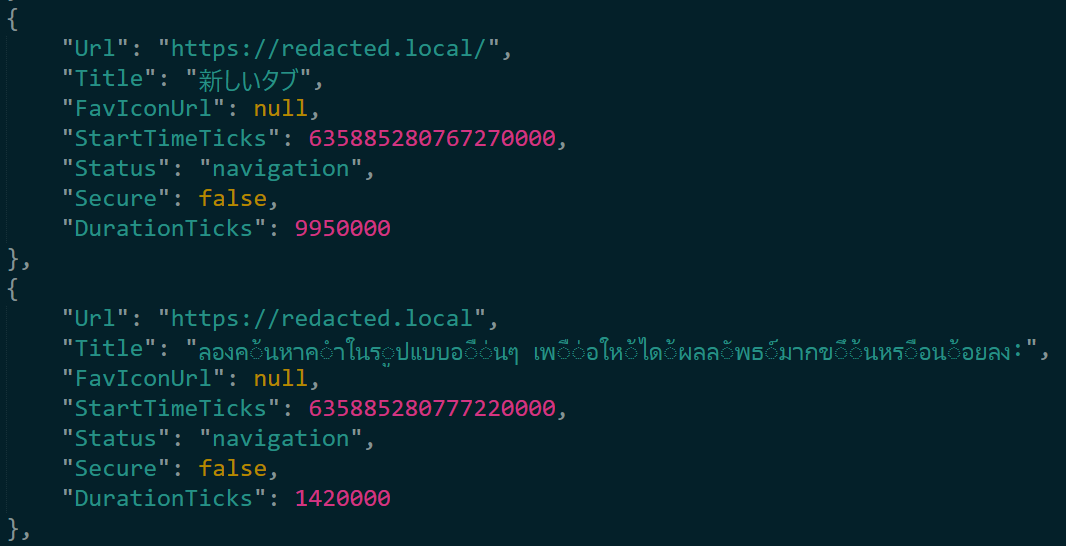

That was lucky, because I was able to narrow down the problem to the following document.

Literally just a week before getting this bug report we found a bug with multi byte Unicode characters (we were counting them in chars, but using that to index into a byte array), so that was our very first suspicion. In fact, review the relevant portions of the code, we were able to identify another location with a similar problem, and resolve it.

That didn’t solve the problem, however. I kept banging my head against the Unicode issue, until I finally just gave up and went to tracing the code very carefully. And then I found it.

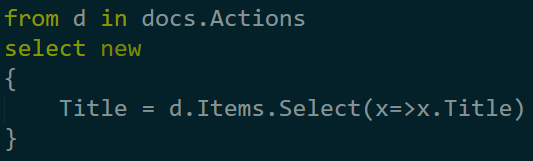

The underlying issue was that the data here is part of an array inside a document, and we had an index that looked like this:

The idea is that we gather all the titles from the items in the action, and allow to search on those. And somehow, the title from the second entry above was always pointing to invalid memory.

It took a bunch more investigation before I found out the root cause.

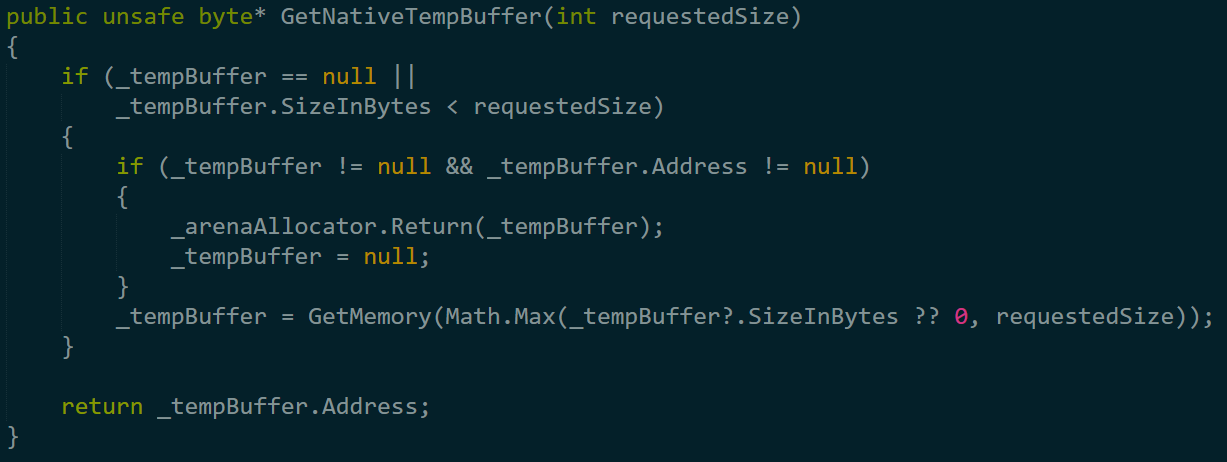

Here is where we found the problem:

But it is going to take a bit of explaining first. The second title is pretty long, when using UTF8 it is 176 bytes, which is long enough for the blittable format to try to compress it, and it was indeed successfully compressed to a smaller size.

So far, so good, and a pretty awesome feature that we have. But the problem was that when we index a document, we need to access its property, and if it is a compress property, we need to decompress it, and that means that we need to decompress it somewhere. And at the time, using the native temp buffer seemed perfect, after all, it was exactly the kind of thing that it was meant for.

But in this case, a bit further down the document we had another compressed field, one that was even larger, so we again asked for a temp buffer. But since this is a temp buffer, and the expectation is that this will be short lived usage, we returned the old buffer and allocated a new one. However, we still maintained reference to the old buffer, and when we tried to index that title, it spat out garbage (because the memory was reused) or just crashed, depending on how severe we set the memory protection mechanism.

It was actually something that we already figured out and fixed, but we haven’t yet merged that branch, so it was quite annoying when we found the root cause.

We’ll also be removed the temp buffer concept entirely, we’ll have a buffer that you can checkout and return, and not something that can vanish behind you back.

But I have to say, that bug was certainly misleading in the extreme. I was sure it had to do with Unicode handling.

Comments

Comment preview