Performance analysisSimple indexes

I outlined the scenario in my previous post, and I wanted to focus on each scenario one at a time. We’ll start with the following simple map index:

In RavenDB 4.0, we have done a lot of work to make this scenario as fast as possible. In fact, this has been the focus of a lot of architectural optimizations. When running a simple index, we keep very few things in managed memory, and even those are relatively transient. Most of our data is in memory mapped files. That means, no high GC cost because we have very few objects getting pushed to Gen1/Gen2. Mostly, this is telling Lucene “open wide, please” and shoving as much data inside as we can.

So we are seeing much better performance overall. But that isn’t to say that we don’t profile (you always do). So here are the results of profiling a big index run:

And this is funny, really funny. What you are seeing there is that about 50% of our runtime is going into those two functions.

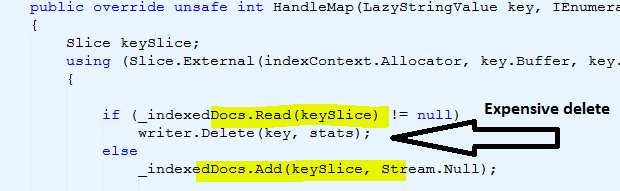

What you don’t know is why they are here. Here is the actual code for this:

The problem is that the write.Delete() can be very expensive, especially in the common case of needing to index new documents. So instead of just calling for it all the time, we first check if we have previously indexed this document, and only then we’ll delete it.

As it turns out, those hugely costly calls are still a very big perf improvement, but we’ll probably replace them with a bloom filter that can do the same job, but with a lot less cost.

That said, notice that the runtime cost of those two functions together is 0.4 ms under profiler. So while I expect bloom filter to be much better, we’ll certainly need to double check that, and again, only the profiler can tell.

More posts in "Performance analysis" series:

- (04 Oct 2016) Simple indexes

- (03 Oct 2016) The cost of StackOverflow indexes

![image_thumb[3] image_thumb[3]](https://ayende.com/blog/Images/Open-Live-Writer/Performance-analysis-Indexing-costs-in.0_8BB4/image_thumb[3]_thumb_1.png)

Comments

Can you do a deferred delete (deleteLater) and put the object in a delete queue? Later you can do a bulk delete. You are using more memory but might save some time. It requires that "bulk delete" is faster than n*delete. Moreover there is an issue about cache locality. Maybe it is better to reuse hot memory blocks by deleting one by one.

I guess from your description that "writer.Delete()" is more time consuming compared to "_indexedDocs.Read()".

Unless your OS isn't in english, isn't the time taking under 400k ms (i.e. 400 seconds) and not 0.4ms? Even if you add the time and divide by number of calls you still get (131,931 + 85,458)/ 269,910 = 0.805 so it's not clear where you're getting "the runtime cost of those two functions together is 0.4 ms under profiler". Did you mean each individual function is 0.4?

@philippecp you have to add the "Add" and "Read" so essentially you do (131931 + 85458) / (2 * 269910) = 0.402

Carsten, Afraid that there isn't a good way to do delete after the fact, and since we need to delete the old version before we add the new one, that wouldn't do us any good, anyway.

Comment preview