Performance analysisThe cost of StackOverflow indexes

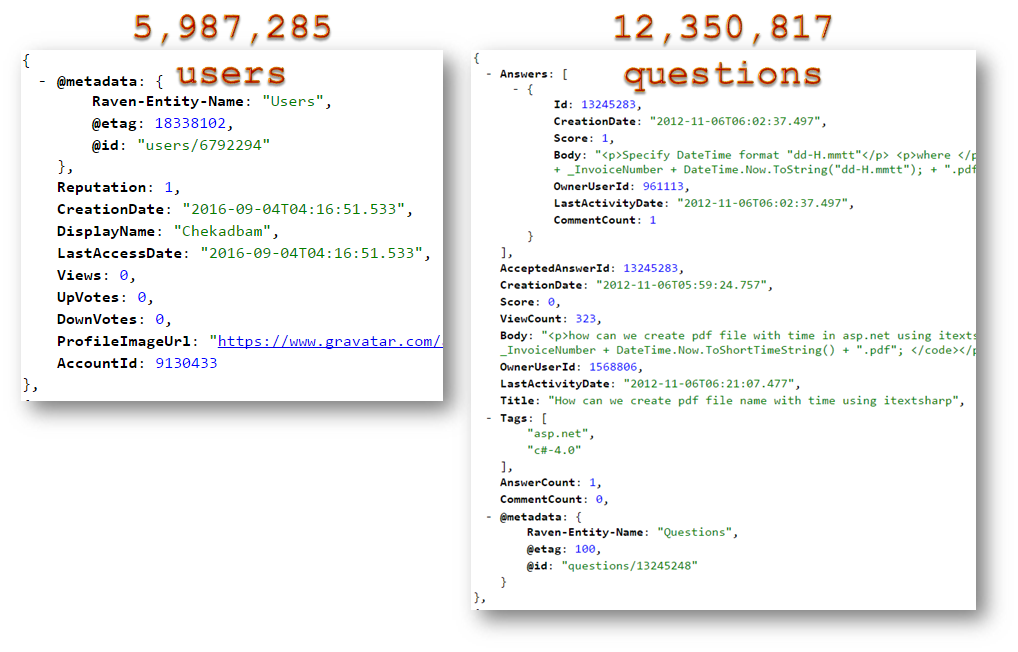

In this post, we are going to look at the relevant costs we have when testing out different indexes on the stack overflow data dump. In this case, we have the following two collections, and sample documents from each.

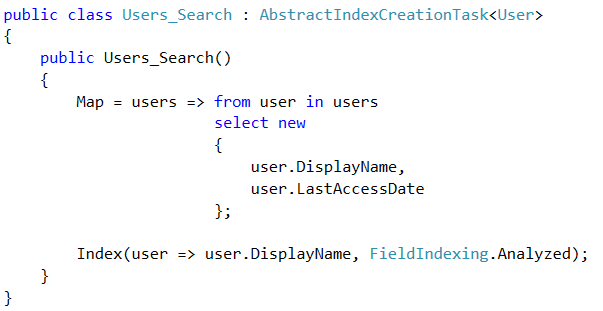

The first index we’ll look at is a simple full text index, simply mapping the users and asking to search over their data.

This index is pretty simple, both in how it works and what it would cause RavenDB to do. We do a simple scan over all the Users documents, and just index those two properties. Nothing much to see here, let us move on.

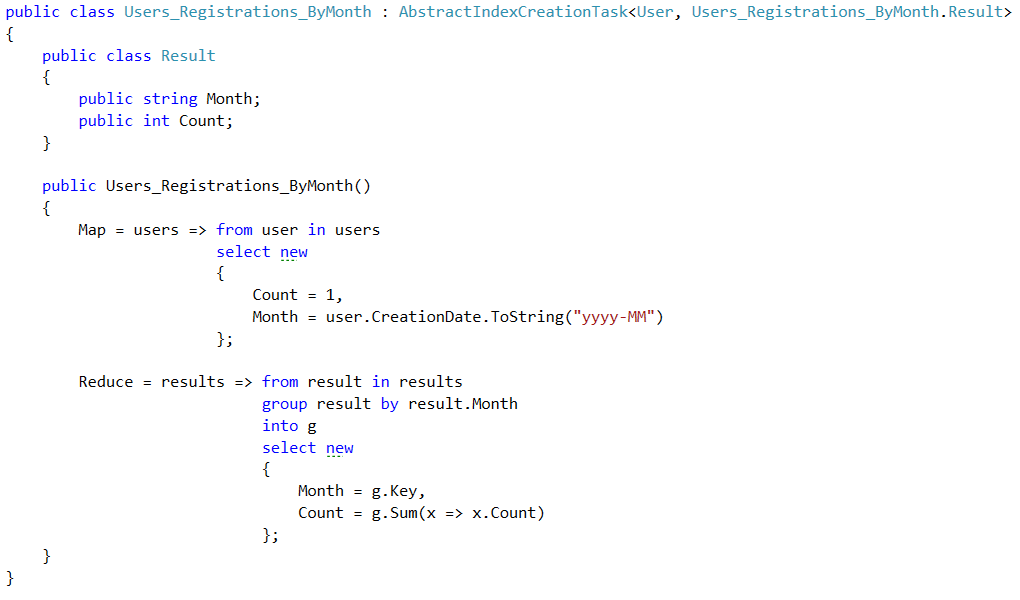

Here we have a map/reduce index, over users, to see how many signups StackOverflow had per month.

This gets more interesting as a performance analysis goes. While a map only index just needs to spill everything to Lucene, and let it do its work (more on that later), a map/reduce index has to keep track of quite a lot of internal state. And as it runs out, the costs associated with the index vary according to what it does, and the access pattern it has.

In this case, we’re going to be scanning the users in roughly the order they were inserted into the database, and that would match pretty closely to the aggregation by the registration month. That means that this is likely to be a pretty cheap index, since we scan the data and it is mostly going to be naturally grouped along the same lines that we are going to group it. It means that the working set we’ll need for this index is going to be fairly small. Once we have passed by a particular month, we won’t be accessing it again.

The number of reduce keys (the distinct number of values that we group by) is also going to be fairly small, in the order of 100 keys or so.

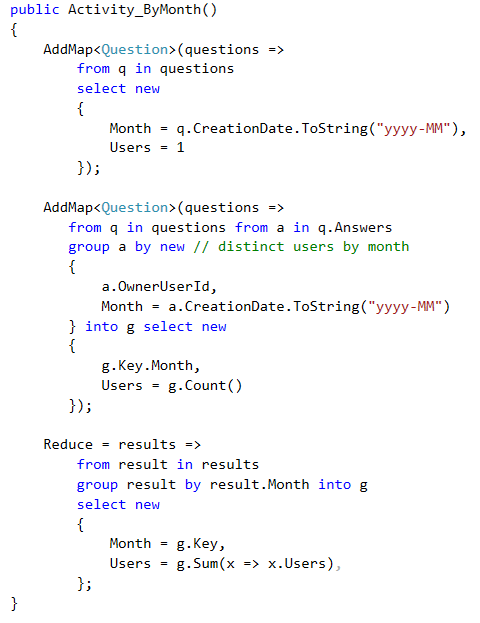

Now, let us move to a more complex index:

This one is aggregating information over questions twice, once for the questions, and once for the answers. In terms of sheer amount of data it works on, it is pretty big. However, in terms of actual work that is done, we still somewhat benefit so significantly from the ordering of the data. This is the case since while questions and answers are usually temporally close to one another, that isn’t always the case. That said, for the vast majority of cases, we’re likely to see quite a bit of locality, which will help the index. There is also the fact that we still have very few reduce key, only about 100 or so, which also helps.

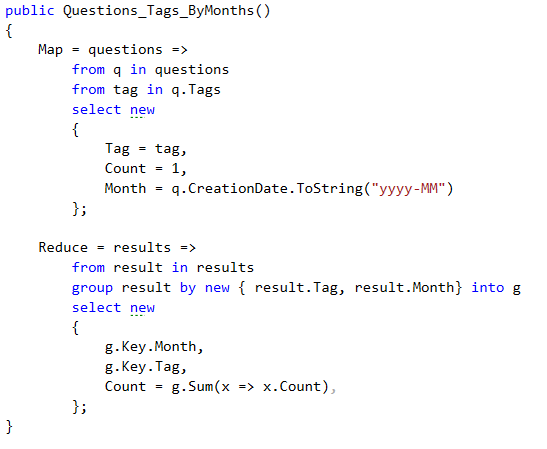

And now we are talking about putting the db through its paces. The problem here is that we are scanning all the indexes, and outputting an entry for each tag. There are 46,564 tags, and in total this index outputs 37,122,139 entries. However, there are just 3,364 tags that have more than a thousand questions, and they are responsible for 32,831,961 of the questions, so while there is a significant long tail, it is pretty small, in terms of actual number of questions.

Note that we are still scanning in a manner consistent with the index, so we’ll typically go over a set of questions that all belong to the same month. And each month will have roughly 3 million questions (obviously less the further back in time we go). So complex, but not too badly so. The number of reduce keys will be in the hundreds of thousands, but the number of entries per reduce key will be relatively small.

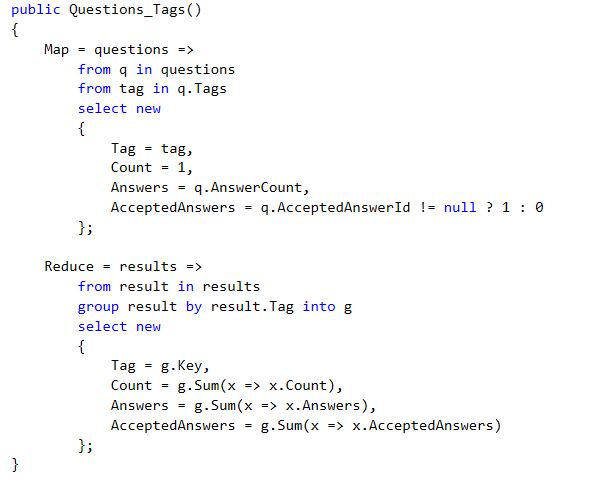

This one, however, is much more problematic:

On the face of it, it looks like it would be a simpler index than the previous two, but it lacks a very important property, it isn’t actually limited by anything related to the scanning order we have. What this means is that whenever we will scan the data for this index, we are going to find a lot of tags, so each batch will have to touch a lot of reduce keys, and they are going to be big reduce keys (with lots of entries), so that means that we’ll need to re-reduce large number of large reduce keys often.

Effectively, this index is forcing us to scatter values all over the place, then aggregate all the information about each of those values. It is likely to be one of the worst scenarios for us to work with. Now that we have the groundwork laid out, we can talk about how we are actually going to approach performance optimizations here.

More posts in "Performance analysis" series:

- (04 Oct 2016) Simple indexes

- (03 Oct 2016) The cost of StackOverflow indexes

Comments

this is very interesting, this gives a very good baseline to compare with other dbs (mongo,elk, and even other relational dbs such as mssql, mysql and postgres). this actually a good benchmark to compare with other raven versions (3,3.5,4) in terms of load time, index time and query time. can't wait for the results :)

It would be interesting to know actual times, db size. I've conducted similar benchmark using linqdb (https://github.com/ren85/linqdb_benchmark), I wonder how it compares.

Ren, That is very interesting, but note that you are comparing something that is very different. LinqDB creates indexes for all properties, and then you are querying along those indexes to figure out the answers. What RavenDB is doing is computing map/reduce values and building the final answers so it can both:

The goals and means are very different.

Those are still pretty impressive numbers.

Comment preview