Long running async and memory fragmentation

We are working on performance a lot lately, but performance isn’t just an issue of how fast you can do something, it is also an issue of how many resources we use while doing that. One of the things we noticed was that we are using more memory than we would like to, and even after we were freeing the memory we were using. Digging into the memory usage, we found that the problem was that we were suffering from fragmentation inside the managed heap.

More to the point, this isn’t a large object heap fragmentation, but actually fragmentation in the standard heap. The underlying reason is that we are issuing a lot of outstanding async I/O requests, especially to serve things like the Changes() API, wait for incoming HTTP requests, etc.

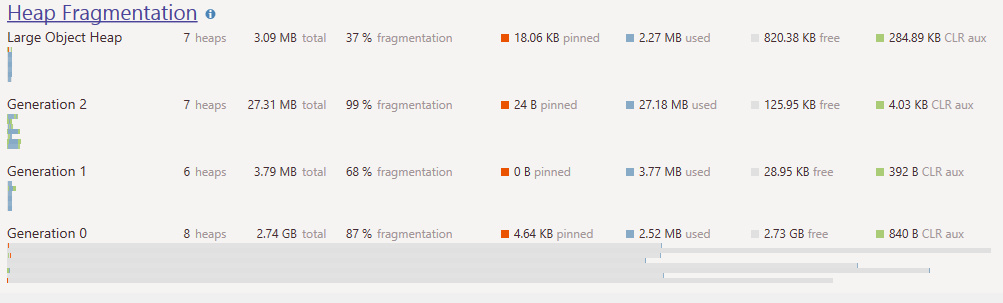

Here is what this looks like inside dotProfiler.

As you can see, we are actually using almost no memory, but heap fragmentation is killing us in terms of memory usage.

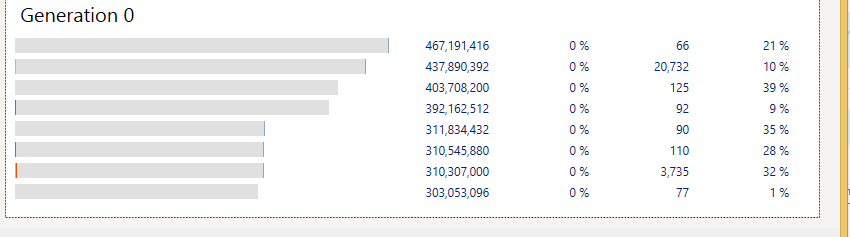

Looking deeper, we see:

We suspect that the issue is that we have pinned instances that we sent to async I/O, and that match what we have found elsewhere about this issue, but we aren’t really sure how to deal with it.

Ideas are more than welcome.

Comments

Do you control the network reads or is that hidden in an HTTP library? If you control the reads you can issue 1 byte reads from a big pre-allocated byte array.

Tobi, This is all done by OWIN, I'm afraid

Can you control the OWIN buffer sizes? You could increase them above the LOH limit to at least force the fragmentation out of the normal heaps... In any case it's an OWIN quality of implementation issue that they might be interested in fixing. They can, the techniques to do that are well-known.

Tobi, That is something that we are currently looking at, yes. The major problem is that we can't really identify what pieces are actually causing the fragmentation, and where is the code this is called

Do you know in which part of the OWIN stack this is happening? I assume you are using Microsoft.Owin and its ilk. Which host are you using? SystemWeb?

Providing a Memory dump would help to check it out in Windbg. To know how you did get into this state ETW allocation profiling with PerfView would tell you on a Win 8.1 machine what was going on. With PerfView you usually have a history of 1-2 minutes so you should capture the data when you suspect that you are hitting this situation.

Ryan, I suspect it is happening on Web Sockets and on the async operations that wait for a connection. We are using MS.Owin and System.Web, yes.

Alois, We have a dump (and a nice way to repro). But I don't have a good way to go from an instance to know if it is pinned or who pinned it.

Yes, it really looks like your pinned object fills the gen0. To view the pinned objects you can use either Windbg or PerfView. I would suggest PerfView as it shows you what objects are pinned and their likely origins.

In .Net you cannot do any IO (sync or async) that writes or reads from an managed memory buffer without pinning, otherwise the memory will get thrashed as the GC moves objects around.

Even a memory copy operations between managed and native memory will pin the managed buffer for the duration. Pinned handles are created even when the managed buffer is in the LOH.

The fact the GC cannot compact past pinned handles, looks like a severe limitation for IO heavy applications.

If you want to find where pinned handles are created, you only need to look for IO.

The only way to limit the fragmentation is with strict control over the managed memory buffers used for IO, either by using buffer pools or by allocating them in the LOH (when LOH compaction is not triggered manually .Net 4.5.1+)

First, which "async" I/O operations are you using -- there are two kinds?

The first kind is the "BeginSend", "BeginReceive", etc variety hanging off of System.Net.Socket. This model causes a lot of allocations under the hood, since it allocates an IAsyncResult, a wait handle, an Overlapped object, a delegate for the callback, etc. per call.

The second model are the "SendAsync", "ReceiveAsync", etc variety hanging off of System.Net.Socket. This model mitigates some of the issues with the previous model by taking a SocketAsyncEventArgs object in each call. This is optimized for high throughput scenarios in that you allocate a big pool of SocketAsyncEventArg objects up-front, use them for a call, "clear" out the state set on them, and then return them back to the pool when you're done, in order to be re-used. There are far fewer allocations in this model, which would certainly help with fragmentation.

Both models end up using System.Threading.Overlapped objects, and there's no way around this. It is the managed equivalent of the Win32 OVERLAPPED structure that is used in all async calls, so it's required. In both cases, the Overlapped object (well, really, its internal OverlappedData object) needs to be pinned, in addition to the buffer that you're reading into/writing from obviously. That said, if you go look at how this is all implemented, it becomes a bit more clear how you can use them more optimally.

Again, the "Begin/End" approach allocates these per-call, and also pins/unpins them per call. The "Async" approach allocates the overlapped and pins the buffer ONCE and then doesn't unpin the buffer until you CHANGE the buffer set on the SocketAsyncEventArgs object. So, if you change the buffer used by the SocketasyncEventArgs for each call, you'll end up re-allocating the Overlapped objects. Conversely, if you keep the same buffer, you'll avoid the Overlapped allocations, but you'll have the buffers pinned longer.

One final way to address that is by doing what a caller above suggested. Namely, allocate your buffers on the LOH heap. Usually you want to avoid this, but since the LOH isn't compacted (unless you ask for it as of .NET 4.5.1), it's FAR less painful to pin things there than it is on the other heaps, since the pinning doesn't get in the way during GC since no compaction takes place. In addition, it you allocate LARGE buffers and then use smaller "chunks" out of this buffer, then fragmentation shouldn't be an issue at all, since you'll just have one large, immovable buffer in the LOH. SocketAsyncEventArgs supports this too -- rather than calling SetBuffer, which takes a byte[], instead, allocate a LOH-sized byte[], split it up into ArraySegment<byte> chunks, and then set the BufferList property on the SocketAsyncEventArgs object to a single-item ArraySegment<byte>[] array.

Someone, We are using whatever it is that OWIN is using under the cover. At a guess, I would say that this is the BeginSend, since that is much easier to map to Tasks than the other mode. Thanks, we'll investigate that.

Yes, this is solvable, you are hitting the perfect GC storm.... They are medium lifetime objects, generally ending up in Gen2 and they have internal buffers which are GC pinned for the entire lifetime of the socket. If they are used in high volume with any churn (disconnects/connects); then 50%+ of the apps time will be spent in GC; especially if using OWIN which is raw on HttpListener.

To get round this you need to upfront allocate the websocket internal buffers and object pool them. You can initialise the OWIN websockets using this added method: https://katanaproject.codeplex.com/workitem/182

If you are using .NET < 4.5.2 you will need to allocate lots of little buffers due to this bug https://connect.microsoft.com/VisualStudio/feedback/details/812310/bug-websockets-can-only-use-0-offset-internal-buffer

If you are using 4.5.2+ this bug has been fixed and you can upfront allocate one large buffer > 85k and get it immediately put on LoH so the GC doesn't have to keep checking it, using pools of the offsets and lengths.

You should find the performance you can get out of websockets after doing this is quite impressive and stop effecting GC

To find out who pinned your objects you can use PerfView which has (surprise) an analysis tab for "Pinning at GC Time Stacks". You need to enable:

x Kernel Base x .NET x .NET Sample Alloc and in the Additional Provider box you need to add clrPrivate

Only with the clrPrivate provider you get a call stack for every object at pinning time.

You can spend days with guessing or you can spend minutes with measuring and one hour to check out the call stacks.

@Ayende, ClrMD is an awesome library if you really want to drill down in your dump file or live process to find the root cause of this fragmentation. You can enumerate the pinned objects and find what is referencing them easily.

https://github.com/Microsoft/dotnetsamples/tree/master/Microsoft.Diagnostics.Runtime/CLRMD

Here is an extension over ClrMD I made to use it in LINQPad: https://github.com/JeffCyr/ClrMD.Extensions

Jeff, I know about ClrMd, but your library looks awesome, much easier than working directly with it.

Its your websocket internal buffers which are pinned as they use the Winsock api; the trick is to get them either upfront in at the start of the heap; do some manual GCs to Gen2 them; or put them in LoH and use ArraySegment as offsets into the large buffer.

When a websocket closes, return its buffer to your object pool and reusse.

Eventstore uses a BufferManager who manages larger array segments to prevent fragmentation.

https://github.com/EventStore/EventStore/blob/dev/src/EventStore.BufferManagement/BufferManager.cs

Thomas, Yes, but the problem is just getting the right buffer to the async calls.

Comment preview