Troubleshooting, when F5 debugging can’t help you

You might have noticed that we have been doing a lot of work on the operational side of things. To make sure that we give you as good a story as possible with regards to the care & feeding of RavenDB. This post isn’t about this. This post is about your applications and systems, and how you are going to react when !@)(*#!@(* happens.

In particular, the question is what do you do when this happens?

This situation can crop up in many disguises. For example, you might be seeing a high memory usage in production, or experiencing growing CPU usage over time, or see request times go up, or any of a hundred and one different production issues that make for a hell of a night (somehow, they almost always happen at nighttime)

Here is how it usually people think about it.

The first thing to do is to understand what is going on. About the hardest thing to handle in this situations is when we have an issue (high memory, high CPU, etc) and no idea why. Usually all the effort is spent just figuring out what and why.. The problem with this process for troubleshooting issues is that it is very easy to jump to conclusions and have an utterly wrong hypothesis. Then you have to go through the rest of the steps to realize it isn’t right.

So the first thing that we need to do is gather information. And this post is primarily about the various ways that you can do that. In RavenDB, we have actually spent a lot of time exposing information to the outside world, so we’ll have an easier time figuring out what is going on. But I’m going to assume that you don’t have that.

The end all tool for this kind of errors in WinDBG. This is the low level tool that gives you access to pretty much anything you can want. It is also very archaic and not very friendly at all. The good thing about it is that you can load a dump into it. A dump is a capture of the process state at a particular point in time. It gives you the ability to see the entire memory contents and all the threads. It is an essential tool, but also the last one I want to use, because it is pretty hard to do so. Dump files can be very big, multiple GB are very common. That is because they contain the full memory dump of the process. There is also mini dumps, which are easier to work with, but don’t contain the memory dump, so you can watch the threads, but not the data.

The .NET Memory Profiler is another great tool for figuring things out. It isn’t usually so good for production analysis, because it uses the Profiler API to figure things out, but it has a wonderful feature of loading dump files (ironically, it can’t handle very large dump files because of memory issues![]() ) and give you a much nicer view of what is going on there.

) and give you a much nicer view of what is going on there.

For high CPU situations, I like to know what is actually going on. And looking at the stack traces is a great way to do that. WinDBG can help here (take a few mini dumps a few seconds apart), but again, that isn’t so nice to use.

Stack Dump is a tool that takes a lot of the pain away for having to deal with that. Because it just output all the threads information, and we have used that successfully in the past to figure out what is going on.

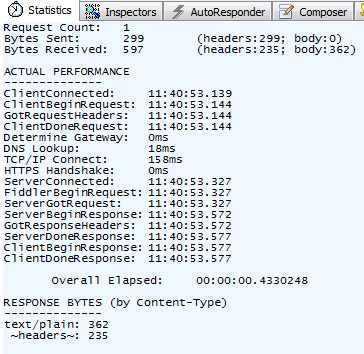

For general performance stuff “requests are slow”, we need to figure out where the slowness actually is. We have had reports that run the gamut from “things are slow, client machine is loaded” to “things are slow, the network QoS settings throttle us”. I like to start by using Fiddler to start figuring those things out. In particular, the statistics window is very helpful:

The obvious things are the bytes sent & bytes received. We have a few cases where a customer was actually sending 100s of MB in either of both directions, and was surprised it took some time. If those values are fine, you want to look at the actual performance listing. In particular, look at things like TCP/IP connect, time from client sending the request to server starting to get it, etc.

If you found the problem is actually at the network layer, you might not be able to immediately handle it. You might need to go a level or two lower, and look at the actual TCP traffic. This is where something like Wire Shark comes into play, and it is useful to figure out if you have specific errors at that level (for example, a bad connection that cause a lot of packet loss will impact performance, but things will still work).

Other tools that are very important include Resource Monitor, Process Explorer and Process Monitor. Those give you a lot of information about what your application is actually doing.

One you have all of that information, you can form a hypothesis and try to test it.

If you own the application in question, the best way to improve your chances of figuring out what is going on is to add logging. Lots & lots of logging. In production, having the logs to support what is going on is crucial. I usually have several levels of logging. For example, what is the traffic in/out of my system. Next there is the actual system operations, especially anything that happens in the background. Finally, there are the debug/trace endpoints that will expose internal state and allow you to tweak various things at runtime.

Having good working knowledge on how to properly utilize the above mention tools is very important, and should be considered to be much more useful than learning a new API or a language feature.

Comments

You have listed many useful tools, but I think they are much more useful to you than your customers - users of RavenDB. I mean, having a stack dump will not tell me much if I don't know application internals or I'm not a developer. And besides, when things go bad and the application is already dead it's too late for testing hypotheses - this is not a scientific method (unless you're a biologist dissecting dead frogs). The key, imho, to making informed decisions and developing more "scientific" method of performance management, is having a high quality archive of key application and system performance metrics that will allow you to see the changes over time and perform analyses of what's the impact of various events on application behavior. You don't need the stack dump to discover that you'll run out of memory in 2 hours or that your application performance drops when database backup is in progress. So I'd start my tool list with monitoring and time-series analysis tools such as Cacti, Nagios, RRDTool, etc.

Rafal, Note that this isn't actually a post about RavenDB. And this isn't about how you find that you have a problem. It is what to do when you find out that you have a problem and how to resolve it.

The statement "Usually all the effort is spent just figuring out what and why" is quite valid and the first thing to do is figure out what the problem is. Unfortunately we sometimes fall into the trap of frantically searching for a solution to the problem, before finding the cause of the problem.

Ok, this applies to all applications as well, RavenDB is not an exception here. My experience is a little different than yours - usually crashes happen in the middle of a day, during busiest working hours (but I'm doing business software), and most attempts to find the problem by debugging the production systems did not work. Mostly because the priority in such situation wasn't finding the cause of the problem - the priority was to bring the application back to life no matter how. First the administrators are notified about the problem and they can do many things to handle the situation before calling developers, for example they can restart the web server. And then it's too late for any memory or thread stack dumps - basically, you're left with nothing if you don't have other data source to look at. This is why I'm not enthusiastic about debugging in production

A few years ago I spent quite some time getting to know WinDBG. I've used WinDBG extensively for troubleshooting all sorts of problems. I can honestly say that it has really opened my eyes to the internals of the CLR. Whether it is your code, or a 3rd party, I've quite easily found bottlenecks in system and been able to work around them.

To be honest, you won't pick up WinDBG in an hour. But it is extremely valuable and I have been placed on a few clients just to troubleshoot issues with a crash dump and WinDBG alone - with no context :-/

Tess Ferrandez did an excellent set of tutorials for beginners to get started with WinDBG (http://blogs.msdn.com/b/tess/).

@Rafal - I come across this concern a lot.

A tool like DebugDiag (http://www.microsoft.com/en-gb/download/details.aspx?id=42933) can be set up to monitor a process and capture it when certain conditions occur, including when it crashes. You can also snapshot it at certain intervals, so if memory exceed 5GB, take a dump and continue taking dumps for every 1GB. When you have much more confidence with something like WinDBG, these setup criteria can really help you dive straight in.

DebugDiag can also run some pre-defined analysis for you. Tracing DataTables (which are just a huge structure of pointers) by hand is extremely tedious. DebugDiag knows how to navigate the pointer structure for you. All scripts are basically hitting WinDBG's core DLLs for you.

Apologies,

Tess Ferrandez blog specific to WinDBG is here (http://blogs.msdn.com/b/tess/archive/2008/02/04/net-debugging-demos-information-and-setup-instructions.aspx).

She moves around a bit at Microsoft, but considering these tutorials are from 2008, they are still valid ... for the most part .. :)

Our main pain has come from intermittent issues, where client insists there is a problem but cannot reproduce the exact scenario. While I'm here, does anyone have something better than splunk for iis weblogs? We have found it to be a resource hog.

@peter.

Have you tried [Logstash with ES and Kibana](http://www.elasticsearch.org/overview/]?

Before trying to find the what and why, I prefer to find a way to reproduce the problem quickly and in a deterministic way. There's nothing worse than trying to debug a problem which happens intermittently.

Two MS perf tools I commonly use which provide better "over time" analysis (rather than a dump file's "point in time" perspective) are:

PerfView (http://www.microsoft.com/en-au/download/details.aspx?id=28567) Tutorials (http://channel9.msdn.com/Series/PerfView-Tutorial)

And

Windows Performance Toolkit - the successor of XPerf (http://www.microsoft.com/en-eg/download/details.aspx?id=39982) Tutorials ep 40-50 (http://channel9.msdn.com/Shows/Defrag-Tools)

Both can generate lots of disk IO (as in they can log hundreds of MB of data), but are usable in production environments with no installation required. And the graphing / pivoting / filtering functionality of the WPT is nothing short of amazing.

Windows Performance Toolkit is the best tool for the job here - specifically, Windows Performance Recorder and Windows Performance Analyzer. WPR can be run in production safely (only adds about 5% overhead) for short bursts, and then WPA used to analyze the results offline. If you want to see where you are spending CPU time, you can generate flamegraphs using Bruce Dawson's port of flamegraph http://randomascii.wordpress.com/2013/03/26/summarizing-xperf-cpu-usage-with-flame-graphs/

PerfView is the most underestimated tool. It can help you to troubleshoot high GC issues (90% time spent in GC?) by looking at the allocation call stacks. Besides this it can also load memory dumps which makes it an invaluable tool to troubleshoot high managed memory issues. For remote diagnostics it is valuable to be able to take a Memory dump on the customer machine and let perfview process it to a .gcDump file which contains the managed object graph. These files are tiny and can be sent much faster over the network for deeper analysis in the Headquarter.

@Russ: You can generate Flamegraphs more configurable with TraceEvent: http://geekswithblogs.net/akraus1/archive/2013/06/10/153104.aspx But you should be aware that Flamegraphs although nice will not be the solution to your perf problems. They can only give you a different view where you can potentially find some specific CPU issues faster as without them.

Came here to point out how incredibly informative Tess Ferrandez's blog is for WinDbg and the Channel9 Defrag Tools series for pretty much everything else you mentioned and Dominic and Murray beat me to it.

Visual Studio includes many tools to help with diagnosing issues in production. The talk “Diagnosing Issues in Production Environments with Visual Studio 2013” TechEd 2014 (http://channel9.msdn.com/Events/TechEd/NorthAmerica/2014/DEV-B366#fbid=) gives a good overview of the available tools. Specifically remote debugging with Visual Studio (@5:32), using Visual Studio’s standalone profiler to collect reports in production (analysis is then done back in Visual Studio--@23:09), using Application Insights and/or IntelliTrace to diagnose errors, performance (@35:00), and memory issues (@48:07), and dump debugging in Visual Studio (@58:52).

If you have feedback on the capabilities of Visual Studio please contact us at vsdbgfb@microsoft.com

Comment preview