The Lucene formula: TF & IDF

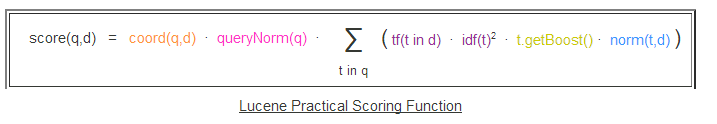

The basis of how Lucene work is tf–idf, short for term frequency–inverse document frequency. You can read about it here. It is pretty complex, but it is explained in detailed in the Lucene docs. It all fall down to:

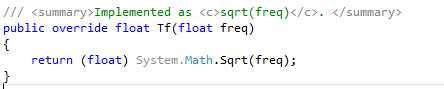

For now, I want to look at the tf() function. The default implementation is in DefaultSimilarity.Tf, and is defined as:

That isn’t really helpful, however. Not without knowing what freq is. And I’m pretty sure that Sqrt isn’t cheap, which probably explains this:

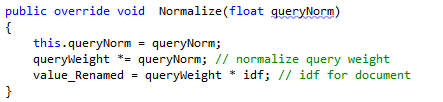

So it caches the score function, and it appears that the tf() is purely based on the count, not on anything else. Before I’ll go and find out what is done with the score cache, let’s look at the weight value. This is calculated here:

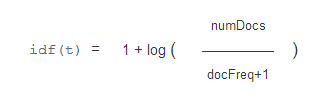

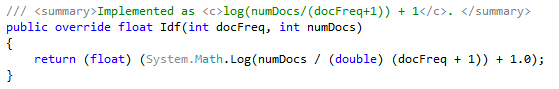

And idf stands for inverse document frequency. That is defined by default to be:

And the actual implementation as:

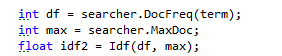

And the caller for that is:

So, we get the document doc frequency of a term, that is, in how many documents this term shows, and the number of all the documents, and that is how we calculate the idf.

So far, so good. But how about that doc frequency? This is one of the things that we store in the terms info file. But how does that get calculated?

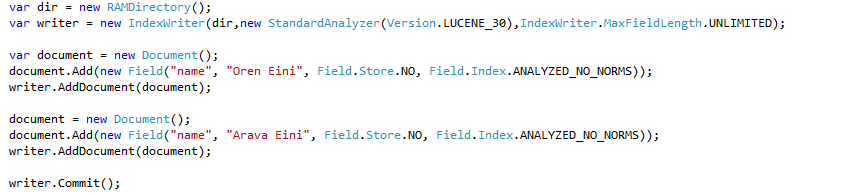

I decided to test this with:

This should generate 3 terms, two with a frequency of 1 and one with a frequency of 2. Now, to figure out how this is calculated. That part is a real mess.

During indexing, there is a manually maintained hash table that contains information about each unique term, and when we move from one document to another, we write the number of times each term appeared in the document. For fun, this is written to what I think is an in memory buffer for safe keeping, but it is really hard to follow.

Anyway, I now know enough about how that works for simple cases, where everything happens in a single segment. Now let us look what happens when we use multiple segments. It is actually quite trivial. We just need to sum the term frequency each term across all segments. This gets more interesting when we involve deletes. Because of the way Lucene handle deletes, it can’t really handle this scenario, and deleting a document do not remove its frequency counts for the terms that it had. That is the case until the index does a merge, and fix everything that way.

So now I have a pretty good idea about how it handled the overall term frequency. Note that this just gives you the number of times this term has been seen across all documents. What about another important quality, the number of times this term appears in a specific document? Those are stored in the frq file, and they are accessible during queries. This is then used in conjunction with the overall term frequency to generate the boost factor per result.

Comments

as a long time user of lucene.net this is actually very interesting. i've never had reason to "go down into the basement" and see whats happening since its always worked fine from up top, but for completeness its nice to see what exactly a library or piece of code you've included in your project is actually doing, thanks for doing this

Great post, always interesting to understand how the code is working behind the scenes.

Bit off topic but do you know what the current state of Lucene.Net development is?

Experiencing some issues in the latest version using geospatial search, from what I understand I'll need to use the older 2.9.4 version to get this up and running.

I've checked the JIRA and can't see any recent activity.

Steve, You need to check the mailing list for that.

Thanks Oren, will do.

Are you still activly contributing?

Steve, To Lucene? I'm not contributing to that.

Indeed. Looks like it could do with a few more comitters.

I expect at some point you might need to work lucene in order for Raven to make use of it's new features?

Comment preview