The Lucene disk format

I realized lately that I wanted to know a lot more about exactly how Lucene is storing data on disk. Oh, I know the general stuff about segments and files, etc. But I wanted to know the actual bits & bytes. So I started tracing into Lucene and trying to figure out what it is doing.

And, by the way, the only thing that the Lucene.NET codebase is missing is this sign:

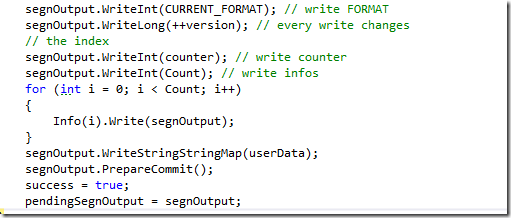

At any rate, this is how Lucene writes the segment file. Note that this is done in a CRC32 signed file:

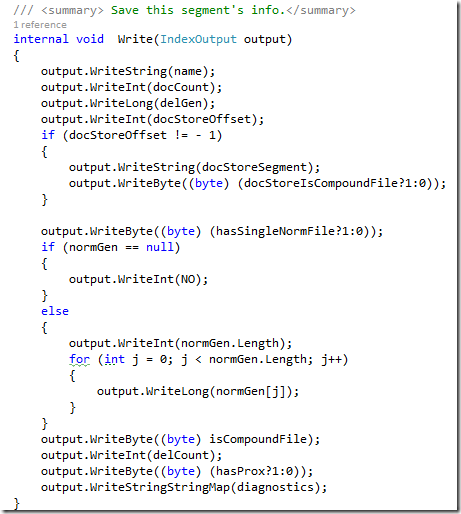

And the info write method is:

Today, I would probably use a JSON file for something like that (bonus point, you know if it is corrupted and it is human readable), but this code was written in 2001, so that explains it.

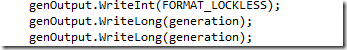

This is the format of the format of a segment file, and the segments.gen file is generated using:

Moving on to actually writing data, I created ten Lucene documents and wrote them. Then just debugged through the code to see what will happen. It started by creating _0.fdx and _0.fdt files. The .fdt is for fields, the fdx is for field indexes.

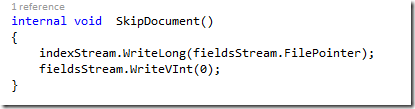

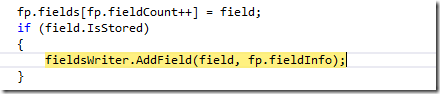

Both of those files are used when writing the stored fields. This is the empty operation, writing an unstored field.

This is how fields are actually stored:

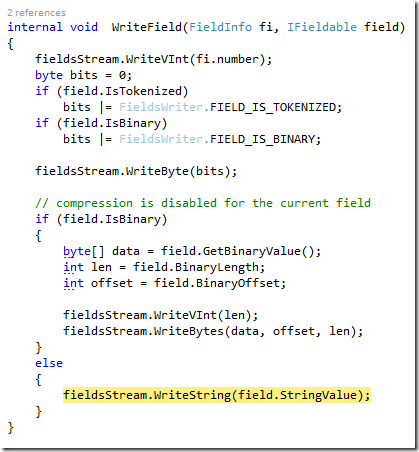

And then it ends up in:

Note that this particular data goes in the fdt file, while the fdx appears to be a quick way to go from a known document id to the relevant position in the fdx file.

As I was going through the code, I did some searches, and found a very detailed explanation of the actual file format in the docs. That is really nice and quite informative, however, just seeing how the “let us take the documents and make them searchable” part is quite interesting. Lucene has a lot of chains of responsibilities going through. And it is also quite interesting to see the design choices that were made.

Unfortunately, Lucene is very much wedded to its file format, and making changes to it isn’t going to be possible, which is a shame, since it impacts quite a lot of the way Lucene works in general.

Comments

Lucene 4, in Java, has a pluggable postings format.

Anyway, I don't agree with you about using JSON. There are situations where even the integer compression algorithm it uses makes all the difference in the world. I have a 10TB Lucene index that would not be possible if it was stored in JSON.

Juan, I wasn't talking about the actual data files. I'm talking the segments file. There is very little need to try to save space there, and making it more human readable is a major plus.

Comment preview