RavenDB Map/Reduce optimizations

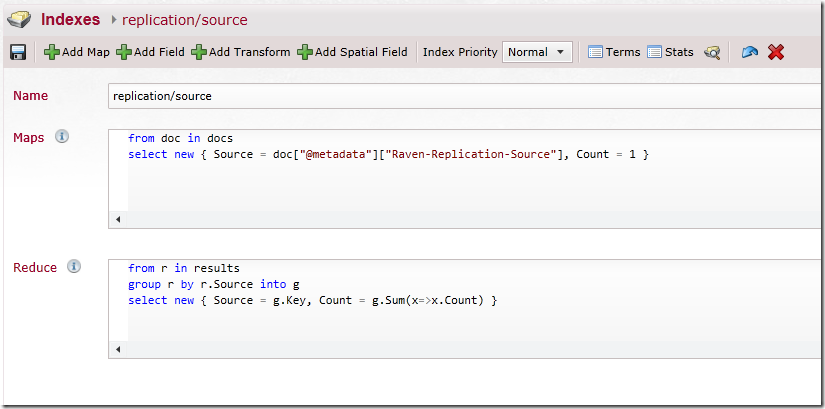

So I was diagnosing a customer problem, which required me to write the following:

This is working on a data set of about half a million records.

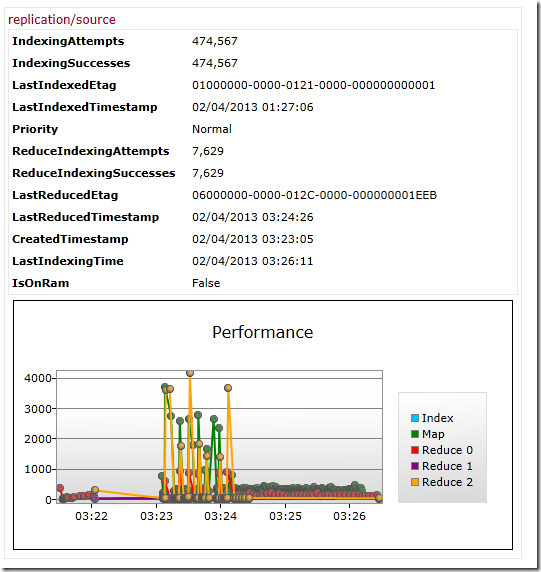

I took a peek at the stats and I saw this:

You can ignore everything before 03:23, this is the previous index run. I reset it to make sure that I have a clean test.

What you can see is that we start out with a mapping & reducing values. And you can see that initially this is quite expensive. But very quickly we recognize that we are reducing a single value, and we switch strategies to a more efficient method, and we suddenly have very little cost involved in here. In fact, you can see that the entire process took about 3 minutes from start to finish, and very quickly we got to the point where are bottle neck was actually the maps pushing data our way.

That is pretty cool.

Comments

I was looking at the graph first and thought, "Holy crap, that's a lot of work." Then I saw that it was done in only a second, and realized you weren't actually pointing out a problem, but some kind of trickery you do. Then I read the post. :) Good work, team.

Awesome...

Sometimes you remind me of Hanibal Smith from the A-Team:

It's somehow similar to your trademark "it just works"

Comment preview