Rob’s SprintThe cost of getting data from LevelDB

We are currently investigating the usage of LevelDB as a storage engine in RavenDB. Some of the things that we feel very strongly about is transactions (LevelDB doesn’t have it) and performance (for a different definition of the one usually bandied about).

LevelDB does have atomicity, and the rest of CID can be built atop of that without too much complexity (already done, in fact). But we run into an issue when looking at the performance of reading. I am not sure if that is unique or not, but in our scenario, we typically deal with relatively large values. Documents of several MB are quite common. That means that we are pretty sensitive to memory allocations. It doesn’t help that we have very little control on the Large Object Heap, so it was with great interest that we looked at how LevelDB did things.

Reading the actual code make a lot of sense (more on that later, I will probably go through a big review of that). But there was one story that really didn’t make any sense to us, reading a value by key.

We started out using LevelDB Sharp:

Database.Get("users/1");

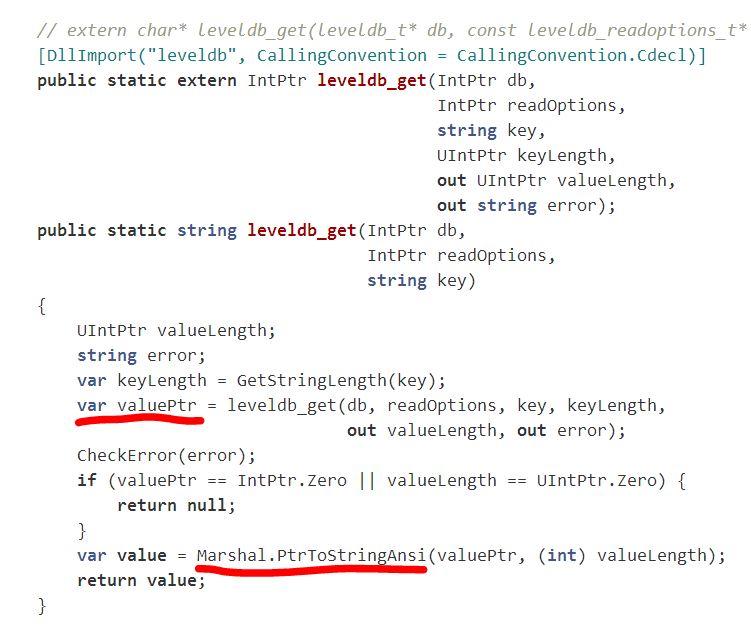

This in turn result in the following getting called:

A few things to note here. All from the point of view of someone who deals with very large values.

- valuePtr is not released, even though it was allocated by us.

- We copy the value from valuePtr into a string, resulting in two copies of the data and twice the memory usage.

- There is no way to get just partial data.

- There is no way to get binary data (for example, encrypted)

- This is going to be putting a lot of pressure on the Large Object Heap.

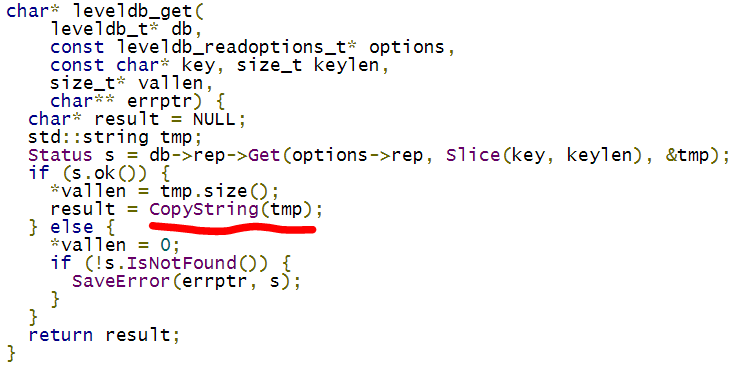

But wait, it actually gets better. Let us look at the LevelDB method that get called:

So we are actually copying the data multiple times now. For fun, the db->rep->Get() call also copy the data. And that is pretty much where we stopped looking.

We are actually going to need to write a new C API and export that to be able to make use of that in our C# code. Fun, or not.

More posts in "Rob’s Sprint" series:

- (08 Mar 2013) The cost of getting data from LevelDB

- (07 Mar 2013) Result Transformers

- (06 Mar 2013) Query optimizer jumped a grade

- (05 Mar 2013) Faster index creation

- (04 Mar 2013) Indexes and the death of temporary indexes

- (28 Feb 2013) Idly indexing

Comments

Reading the limitations list, do you really think it would be a good match for RavenDB?

Specifically:

Only a single process (possibly multi-threaded) can access a particular database at a time.

@Rei - AFAIK Esent (as currently used by RavenDB) is also accessible from one process at a time.

@James: yep, it does seem so "... The database file cannot be shared between multiple processes simultaneously..."

source: http://blogs.msdn.com/b/windowssdk/archive/2008/10/23/esent-extensible-storage-engine-api-in-the-windows-sdk.aspx

Leveldb handles concurrency for most operations across threads - which is what is required.

Why write a new API? Why not contribute changes to the existing projects instead?

Matt - it's a rabbit hole - when you only need 10% of the functionality and you'd have to do 100% of the work to get that 10% into the other projects.

Especially the LevelDB C api - do you really think they're going to take pull requests for this?

Here's something from the LevelDB benchmarks:

LevelDB doesn't perform as well with large values of 100,000 bytes each. This is because LevelDB writes keys and values at least twice: first time to the transaction log, and second time (during a compaction) to a sorted file. With larger values, LevelDB's per-operation efficiency is swamped by the cost of extra copies of large values.

JDice, Sure, but that is something that you are going to have in any ACID db with large values.

Instead of going the platform invoke way. Work with Managed C++ and the IJW. It will not only be easier to integrate but also perform better.

Ido, The problem is that managed C++ won't work with Mono.

Gotcha,

Take a look at this solution, looks interesting... http://tirania.org/blog/archive/2011/Dec-19.html

Comment preview