Why TCP is evil and HTTP is king

Nitpicker corner: If you tell me that HTTP is built on TCP I’ll agree, then point out that this is completely irrelevant to the discussion.

I got asked why RavenDB uses HTTP for transport, instead of TCP. Surely binary TCP would be more efficient to work with, right?

Well, the answer it complex, but it boils down to this:

Huh? What does Fiddler has to do with RavenDB transport mechanism?

Quite a lot, actually. Using HTTP enable us to do a lot of amazing things, but the most important thing it allows us to do?

It is freaking easy to debug and work with.

- It has awesome tools like Fiddler that are easy to use and understand.

- We can piggyback on things like IIS for hosting easily.

- We can test out scaling with ease using off the shelf tools.

- We can use hackable URLs to demo how things work at the wire level.

In short, HTTP it human readable.

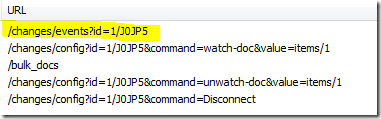

For that matter, I just remapped the Changes API solely for the purpose of making it Fiddler friendly.

Coming back again to building pro software, making sure that your clients and your support team can diagnose things easily.

Compare this:

To this:

There is an entire world of difference between the quality that you can give between the two.

And that is why RavenDB is using HTTP, because going with TCP would mean writing a lot of our own tools and making things harder all around.

Comments

You should look two rows down in Wireshark, on "GET / HTTP/1.1" - that'll give you the complete, readable, HTTP request.

Nevermind, i'm just tired :)

I will also add: HTTP stateless, TCP stateful. That is why HTTP can scale so well.

There should really be a lot more emphasis on the words "...making sure that your clients and your support team can diagnose things easily."

I feel compelled to tell you that HTTP is built on TCP. :-)

Omer Mor, I agree, and this is completely irrelevant to this discussion.

@Omer It is not. Current implementations use TCP but you can use any underlying transport as long as you can simulate request and response. HTTP spec is transport agnostic.

For example you can have an implementation that uses file and simulates request and response using matching file names.

Indeed. Between ease of debugging (been a fan of Fiddler for a long, long time now) and load balancing, HTTP as a transport is a no-brainer for nearly all uses.

This doesn't mean TCP is evil, it just means HTTP is far more supportable and a better choice. With TCP you have to write your own protocol on top of it, whereas HTTP is already defined and has lots of tools available and makes more sense.

@Ryan...I was going to say the same thing. It's largely a matter of having readily available and easy to use tools instead of having to build your own (and support/document/train users on them). To me, this looks a lot like a good example of the "unix philosophy" working well.

Unless you use HTTP specifics in the server layer itself, you could always have multiple endpoints listening for both http and tcp, real client-server talk will be over tcp while debugging/demo-ing can use http

but the real question here: what will you gain? not much I say.

the overhead of http over TCP is not very large to begin with - if you keep headers to the minimum required then it negligible if non existent. And you already use keep-alive and http streaming in the system so even the reconnect overhead van be mitigated, but allowing http stateless semantics helps a lot with scalability and HA.

bottom line - HTTP is certainly the right way to go here. Especially since at the bottom of all things the underlying storage is disk and IO costs will make the http overhead (if it exists at all) to a non-issue. Only in systems that are writing to memory, a binary-over-tcp make sense and might worth the extra effort (e.g. Memcache, Gigaspaces, MongoDB, etc.)

Yes, but can HTTP perform as well as TCP? I guess if performance is adequate enough, then next you consider other things like debugging in your case. :)

Why restrict yourself to http or tcp. Why not use WCF and let it handle the transport for you? Then you can let the consumer decide what transport to use. Default it to http of course.

While I completely understand what you're getting at Ayende - I think the point is rather moot. It's akin to saying "Machine Code is Evil and High Level Languages are King".... One ultimately boils down to the other, the high level abstraction was always intended to be easier. Let the abstraction layer generate the low level TCP/Machine Code - it's nothing new...

Pretty broad comment to make and impossible to defend. In the context of the question (why does RavenDB use http) is one thing, in the context of any other particular application on the planet, it might not be. I have worked on a number of high thru-put requirement applications. In all cases, HTTP ground to a halt and TCP (via WCF as mentioned above) handled the communication w/ plenty more 'pipe' available to scale up. Moral to the store, don't make broad statements that everyone knows are unfounded and downright not true.

Magellings, Small test scenarios such as Google and Amazon suggest that it might be possible to scale HTTP.

Stephen, Seriously?! You really want to bring WCF into the discussion?

Sure, here is why I don't use it. WCF is so complex, it dwarf anything that you use next to it. I have no desire or intention to spend days or weeks configuring things and trying to figure out why something that should work doesn't.

I don't pretend to know RavenDB's needs when implementing a transport. I'm sure it's far more complex than anything I would use or need.

But for me I've had a different experience with WCF and have found it fairly intuitive to use and love how configurable it's been. The only complex thing I've ran into is implementing forms authentication through it.

I was mentioning WCF because it would give users of RavenDB more choices in how they can communicate with RavenDB.

Would you consider the http implementation you have and WCF (Might be double work though)? Just a thought?

Stephen, It would be five times the work, and a really complex one at that. Just as a hint, 304 support is key for many RavenDB scenarios, doing that with WCF is awkward.

You should be able to enhance Wireshark to read binary structures from TCP sniffinf /tcpdump recordings. But this is a bit more complex and not as-out-off the box as Feedler.

Never use hackable URLs. You can just about get away with them for testing but they should not be allowed in production code. Not only can you use them for experimenting, but so can your customers / black hat hackers etc.. I have applications that I have (legitimately) written to interface with backend databases that exploit the fact that the website doesn't distinguish between GET and POST request so the URLs are hackable. Anyone who has done more than 5 mins HTML /.HTTP can emulate a POST or a GET request but it will stop rank amateurs. Also, having the query string in the URL fills up the browser history; that is an inconvenience for people using your site but an absolute disaster for them when they try to go back to some other site because your transactions have blown their half remembered URL for the other site out of the history stack.

j5c, Seriously?!?! You really rely on this sort of "security" ?

The point about testing / scalability / hosting is well-made. I'm not sure the human-readable bit is so good, though.

HTTP and TCP are fundamentally different things. TCP is a transport protocol, HTTP is an application protocol. You can't use TCP as the protocol for your application - you will always need to put another application-layer protocol on top of it. If you're using "binary TCP" then you have to have some sort of agreement at each end about what the "binary" payload of the TCP packet means, and there you've defined an application-layer protocol. The fact of the matter is that you do use "binary TCP" as your application protocol. You don't have to, but I'd bet my oldest son that you do. HTTP requires a transport, and for pretty nearly every situation in existence that transport is TCP.

So the correct reason why you don't use "binary TCP" as your application protocol is that you can't - it's not an application protocol.

The trade-off between HTTP and a custom application protocol is another matter, and if HTTP works for you then you've probably made the right choice for all the reasons you've stated. I would point out that it's pretty easy to add custom protocol parsers to Wireshark that will make your custom binary protocol at least as readable as your HTTP sessions (I support reverse-engineered drivers for several application protocols and use this technique to great advantage). But your points about hosting, testability and scalability are all well made. You can of course provide all those tools for your own protocol too, but if they're ready-made then you're on to a winner.

Correction: Should have written: 'The fact of the matter is that you do use "binary TCP" in your application. You don't have to...'

It actually sounds more like an advert for 'look how wonderful fiddler is'. Just because something doesn't suit your scenario doesn't equate to it being evil. You make a good point about how it suits you, but TCP has much wider uses and performance wise - much more opportunities than HTTP- IMHO. Also depending of your development platform - I would want my application to be transport independent and use a layer to match the code to the transport. I use IP over Infinband between my desktop and server, but using Infiniband directly would be mush faster.

Tom, did you see the nitpicker text above the post?

I'm sorry but I have to say that somehow a stupid comparison... It's just like saying "Why Assembly is evil and C++ is king". Of course C++ (HTTP) is king, they are in higher layers and are human readable.

Let me launch another discussion: "Why TDM Frames are evil and TCP is king!"

5 minutes of my life i'm never gonna get back...

PG, Please send me your details and I'll refund your money for this blog.

I'm missing two aspects that nobody talks about! 1. Security - How easy is it to use Fiddler to interfere with the valid communication and crash the system? If it easy for you to read it is also easy for a spoiler to spoil; 2. Stability - How easy is it to launch DSA on HTTP? Since HTTP is stateless and mostly anonymous you can do a lot of mischief which is easily prevented in TCP. So in conclusion HTTP is for sissies and TCP for pros!!!

I am afraid this article is a great case of comparing apples and oranges. Each of the different layers of the ISO stack have specific functions. A much more interesting question is why HTTP vs XML. Both of these reside at the same layer.

1) Security - does NOT rely on obscurity. 2) You are aware that HTTP is built on TCP, right? Any HTTP attack is also a valid TCP attack.

1) Security has nothing to do with obscurity but with how easy is it to manipulate data and still be valid (A small reminder would be SQL injections in web sites). 2) Though the underlying transport protocol is TCP the HTTP server ACCEPTS any junk request coming from anyone as opposed to a properly defined TCP server (Listener) that will drop invalid requests (Causing a WAIT_TIMEOUT on the client) making it much more difficult to attack.

AS, 1) TCP is just as easy to manipulate. 2) So does TCP. Easiest thing in the world is to send a single byte per second, thus keeping the server busy. By the time you have enough info to know something is wrong, you area already down.

You're talking about different layers of the OSI model - you cannot compare them as one sits on top of the other. You are talking about writing a custom protocol - this then sits at the same layer as HTTP... on top of TCP !!

To some extent, this seems like a nonsensical comparison. TCP and HTTP live in different layers of the OSI model, and serve different purposes.

Asking which one is better is akin to asking "what is better - the tire, or the specific rubber composition that the tire is made out of?"

That said, TCP is just as debuggable as HTTP. Wireshark gives you everything you need to know to decode layers 2, 3, 4.

And using HTTP as a transport (basically a layer 3 protocol) is actually causing just as many problems as it solves. For one, it's a firewall concern - you can't just block a port to fend off a large share of attacks; you have to do far more CPU-intensive deep analysis to figure out what is or is not legitimate.

HTTP is also far more verbose, and thus bandwidth-consuming, than TCP.

That said, in this example, HTTP is actually the better choice. Applications communicating using an application-layer protocol? Nothing wrong with that!

This article is silly. It a rehash of the same old human readable is better than binary for a protocol.

So yes a human readable protocol sitting on top of TCP (HTTP) is much easier to debug than some fictitious binary protocol.

And yes it is usually easier to re-use some one else's already written and debugged code than 'rolling your own'

Yawn...

How about using OData as the transport? That would offer developers a number of libraries in several languages with which to connect to the database. It uses HTTP as well, to boot.

Mike, And what would it offer us as the developers? Except additional complexity? How do you express "backup this database" in OData? How do you express "patch this set of documents" ?

I prefer to load my data on an external drive and volkswagon bus it to the destination. Or fed ex it.

Only way to guarantee sufficient bandwidth

I thought April first was four months ago. You guys are kidding, arn't you?

Are you guys serious? The old saying "When people suspect you don't know anything, don't confirm their suspicions by opening your mouth" comes to mind.

AP, I'm with you mate, at least this wasted 5min saved me from wasting more time on RavenDB, if this is how they make decisions ...

The irony of course is that HTTP is so limiting that various parties are hard at work trying to build a new version of it that will handle the modern web (eg, SPDY), and the WHAT WG has devised an entire new "lower level" protocol on top of HTTP to get around HTTP's stateless nature: Web sockets.

If your traffic is sporadic and always client-initiated, then sure, HTTP is very debuggable compared to raw TCP. And if you want server-sent events, or lots of traffic that doesn't destroy your server with wasted overhead and zillions of created and destroyed TCP connections (which every HTTP request uses), then you use web sockets on top fo HTTP and... get right back to where you were with raw TCP, but with a more complex setup process and no ability to differentate at the network level between application traffic and web traffic.

Right tool for the job. Implementing SMTP over HTTP (had HTTP existed at the time) for instance would be horribly slow and stupid, and all you'd get is another layer of indirection since SMTP itself wouldn't be any less convolutd. It would just have an extra wrapper layer in it.

TCP is not evil. It's a particular level of abstraction, and a very useful one for what it's good for. Tunneling your application protocol through another application protocol (HTTP) has pros and cons, figure out which fit your use case and be happy. Sometimes it works.

Absolutism like this is just flame bait, but you forgot the most important part of getting visit-based ads on your site to generate revenue. :-)

Larry, SPDY is interesting, sure, but it is highly targeted at the web page scenario beyond anything else. Web Sockets are there to answer a drastically different problem set (TCP communication to firewalled sites).

RavenDB is a database server, it is pretty much ALWAYS responding to client requests. Traffic is anything but sporadic. I think you are missing the part about Keep-Alive, which resolve the issue of creating/destroying TCP connections.

Server Sent Events are actually really easy to work with using HTTP. I created one in half a day's work. And it still contains all of the features that make HTTP great.

SMTP in particular is actually very well suited to HTTP transport layer, since it is all request / response.

POP3, in contrast, requires statefull connection (to know when to RSET the read flags) and would be a PITA to build over HTTP.

Also, I don't think that the ads ever paid even the cost of hosting this site.

I'd like to introduce another discussion:

A starfighter aircraft versus aviation fuel - which is the better.

An equally pointless discussion - I don't know why you all wasted your time on this.

I like you're guts for moving to HTTP. HTTP is a proven communication mechanism which everyone is learning to communicate more on in real time. The statements about security to me seem a little over protective as realtime secure communications are already happening (think of all the cloud providers that your DB sits on that communicate via REST). You won't have the option to restrict certain access per port but this being said if you had both a TCP and HTTP option what percentage of devs do you think would use HTTP over TCP. I would rather spend more time in Fiddler than Wireshark and more importantly I can see a lot more utilities coming out for supporting HTTP which details my operations more effectively. Cheers

"SMTP in particular is actually very well suited to HTTP transport layer, since it is all request / response."

Except that HTTP isn't a transport layer. It's an Application layer. You're talking about layering another application layer on top of an Application layer.

Which is fine. There's nothing inherently wrong with that, and the entire field of "web services" is based on doing exactly that (for mixed reasons of variable validity). It may be a good call in your case, I'm not sure.

My point is that "TCP bad, HTTP good" is a brain-dead over-simplification that ignores the actual architecture in place, the trade-offs involved in either direction, and a dozen other considerations that you are brushing aside. And like any such absolutist statement, if followed absolutely will backfire sooner rather than later and leave you with a broken and non-functional architecture.

My concern is with the ignorant devs who will read this post and think that they should always do everything over HTTP, and then code up something that destroys their company because they used the wrong tool for the job, because you just told them "TCP bad, HTTP good".

The reality of architectural trade-offs is always more complex than that.

Comment preview