Implications of design decisions: Read Striping

When using RavenDB replication, you have the option to do something that is called “Read Striping”. Instead of using replication only for High Availability and Disaster Recovery, we can also spread our reads among all the replicating servers.

The question then becomes, how would you select which server to use for which read request?

The obvious answer is to do something like this:

Request Number % Count of Servers = current server index.

This is simple to understand, easy to implement and oh so horribly wrong in a subtle way that it is scary.

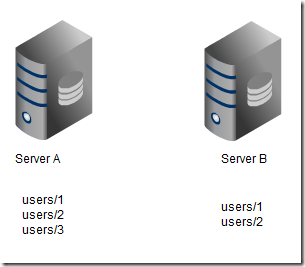

Let us look at the following scenario, shall we?

- Session #1 loads users/3 from Server A

- Session #1 then query (with non stale results) from Server B

- The users/3 document hasn’t been replicated to Server B yet, so Server B sends back a reply “here are my (non stale) results)”

- Session #1 assumes that users/3 must be in the results, since they are non stale, and blows up as a result.

We want to avoid such scenarios.

Werner Vogels, Amazon’s CTO, has the following consistency definitions:

- Causal consistency. If process A has communicated to process B that it has updated a data item, a subsequent access by process B will return the updated value and a write is guaranteed to supersede the earlier write. Access by process C that has no causal relationship to process A is subject to the normal eventual consistency rules.

- Read-your-writes consistency. This is an important model where process A after it has updated a data item always accesses the updated value and never will see an older value. This is a special case of the causal consistency model.

- Session consistency. This is a practical version of the previous model, where a process accesses the storage system in the context of a session. As long as the session exists, the system guarantees read-your-writes consistency. If the session terminates because of certain failure scenarios a new session needs to be created, and the guarantees do not overlap the sessions.

- Monotonic read consistency. If a process has seen a particular value for the object any subsequent accesses will never return any previous values.

- Monotonic write consistency. In this case the system guarantees to serialize the writes by the same process. Systems that do not guarantee this level of consistency are notoriously hard to program.

What is the consistency model offered by this?

Request Number % Count of Servers = current server index.

As it turned out, it is pretty much resolves into “none”. Because each request may be re-directed into a different server, there is no consistency guarantees that we can make. That can make reasoning about the system really hard.

Instead, RavenDB choose to go another route, and we use the following formula to calculate which server we will use.

Session Number % Count of Servers = current server index.

By using a session, rather than a request, counter to decide which server we will use when spreading reads, we ensure that all of the requests made within a single session are always going to the same server, ensuring we have session consistency. Within the scope of a single session, we can rely on that consistency, instead of the chaos of no consistency at all.

It is funny, how such a small change can have such profound implication.

Comments

Simple solutions are always the best. Where does the session number come from? Is that similar a connection ID? What about using a website to update/read data. i.e. Could a load balancer affect your session number, by spreading your access over two webservers?

Simon, Session id comes from a simple int32 that gets incremented on every session. It is not a connection id.

Writes usually goes to the master, always, and the way RavenDB works, you do all the reads up front, then do a single write.

but if you have a session per http request then the next request from the same user is quite likely to go to another server, isn't it? And maybe there will be no 'kaboom' but the user will be quite surprised when he won't see the changes he has just made...

The Session is an object that you create in code. It lives until you dispose of it. Therefore, you have control over the duration of your "consistency".

Rafal,

That would only be an issue if the user spent less time on the screen than it took for replication to occur. And even so, usually it isn't a big deal. We don't always see our comments on the blog post right after we make them.

"Systems that do not guarantee this level of consistency are notoriously hard to program."

I am sure thart should say "Systems that guarantee this level of consistency are notoriously hard to program." ?

OK, I read up a little on Monotonic writes and what the sentence means is that programming against systems without this guarantee is notoriously difficult.

Joao, not everyone is doing blogs ;) This 'session consistency' is a very weak guarantee for web applications when subsequent requests are very likely to be served from different replicas. Also, this approach requires bidirectional replication with very low latency, which will likely cause problems. 'User session' consistency would be a much better guarantee (the same database handling all requests from an user/web client). For example, some load balancers use client IP address to direct that client's request to same web server.

Rafal, You have control over that as a user, you can decide whatever to pass the same replication base whenever using the same user.

Comment preview