RavenDB, .NET memory management and variable size obese documents

We just got a support issue from a customer, regarding out of control memory usage of RavenDB during indexing. That was very surprising, because a few months ago I spent a few extremely intense weeks making sure that this won’t happen, building RavenDB auto tuning support.

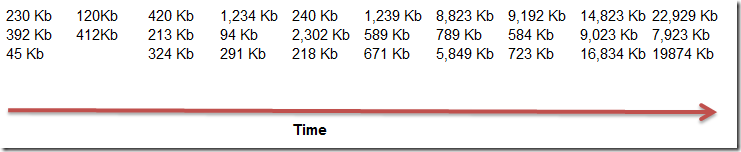

Luckily, the customer was able to provide us with a way to reproduce things locally. And that is where things get interesting. Here are a few fun facts, looking at the timeline of the documents, it was something like this:

Note that the database actually had several hundreds of thousands of documents, and the reason I am showing you this merely to give you some idea about the relative sizes.

As it turned out, this particular mix of timeline sizes is quite unhealthy for RavenDB during the indexing period. Why?

RavenDB has a batch size, the number of documents that would be indexed in a particular batch. This is used to balance between throughput and latency in RavenDB. The higher the batch, the higher the latency, but the bigger the throughput.

Along with the actual number of documents to index, we also have the need to balance things like CPU and memory usage. RavenDB assumes that the cost of processing a batch of documents is roughly related to the number of documents.

In other words, if we just used 1 GB to index 512 documents, we would probably use roughly 2 GB to index the next 1,024 documents. This is a perfectly reasonable assumption to make, but it also hide an implicit assumption in there, that the size of documents is roughly the same across the entire data set. This is important because otherwise, you have the following situation:

- Index 512 documents – 1 GB consumed, there are more docs, there is more than 2 GB of available memory, double batch size.

- Index 1,024 documents – 2.1 GB consumed, there are more docs, there is more than 4 GB of available memory, double batch size.

- Index 2,048 documents – 3 GB consumes, there are more docs, there is enough memory, double batch size.

- Index 4,092 documents - and here we get to the obese documents!

By the time we get to the obese documents, we have already increased our batch size significantly, so we are actually trying to read a LOT of documents, and suddenly a lot of them are very big.

That caused RavenDB to try to consume more and more memory. Now, if it HAD enough memory to do so, it would detect that it is using too much memory, and drop back, but the way this dataset is structured, by the time we get there, we are trying to load tens of thousands of documents, many of them are in the multi megabyte range.

This was pretty hard to fix, not because of the actual issue, but because just reproducing this was tough, since we had other issues just getting the data in. For example, if you were trying to import this dataset in, and you choose a batch size that was greater than 128, you would also get failures, because suddenly you had a batch of documents that were extremely large, and all of them happened to fall within a single batch, resulting in a error saving them to the database.

The end result of this issue is that we now take into account actual physical size in many more places inside RavenDB, and that this error has been eradicated. We also have much nicer output for the smuggler tool ![]() .

.

On a somewhat related note. RavenDB and obese documents.

RavenDB doesn’t actually have a max document size limitation. In contrast to other document databases, which have a hard limit at 8 or 16 MB, you can have a document as big as you want*. It doesn’t mean that you should work with obese documents. Documents that are multi megabytes tend to be… awkward to work with, and they generally aren’t respecting the most important aspect of document modeling in RavenDB, follow the transaction boundary. What does it means that it is awkward to work with obese documents?

Just that, it is awkward. Serialization times are proportional to the document time, as are retrieval time from the server, and of course, the actual memory usage on both server and client are impacted by the size of the documents. It is often easier to work with smaller documents that a few obese ones.

* Well, to be truthful, we do have a hard limit, it is somewhere just short of the 2 GB mark, but we don’t consider this realistic.

Comments

In what build of RavenDB was this fix made?

Patrik, 2030

2030? is not 960 the last one? https://github.com/ravendb/ravendb/tree/build-960

Andres, That is an unstable build that we are working on for 1.2

oh, and what happen with conservative people that prefer stable versions? it is still broken? I have problems with smuggler too...

Andres, This affect only the heuristics. For the stable version, you need to configure the batch size to a low level.

Comment preview