Geo Location & Spatial Searches with RavenDB–Part I–Setup

One of the things that we are putting into the new version of the RavenDB Website is some smarts about courses. We want to be able to figure out where a visitor is coming from, and show him relevant courses near him.

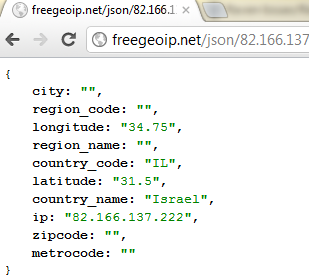

This is a fairly standard feature, I guess. But I think we solved this in an interesting fashion. First, we had to figure out how to know where a visitor is coming from. This is done via geo location. There are plenty of geo location API, such as:

The problem is that a service like that is limited to ~1000 queries per hour, and we didn’t want that. Other option include paid services, again, with either hard limits on the number of queries per hour or an open credit line. Neither option was very interesting to us.

Instead, we thought we would try something out. Max Mind is offering a database that can be used for geo location purposes. They have two options, a free database and a paid option. I took the free version for a spin.

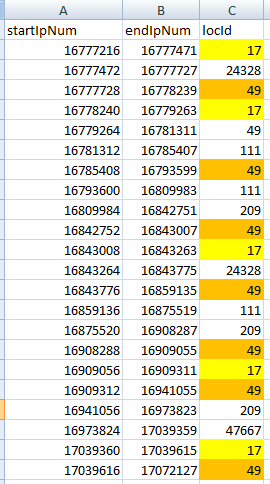

Here is the GoeLiteCity-Blocks information:

Note that we have repeated rows per location, which allows us to map multiple ranges for each location.

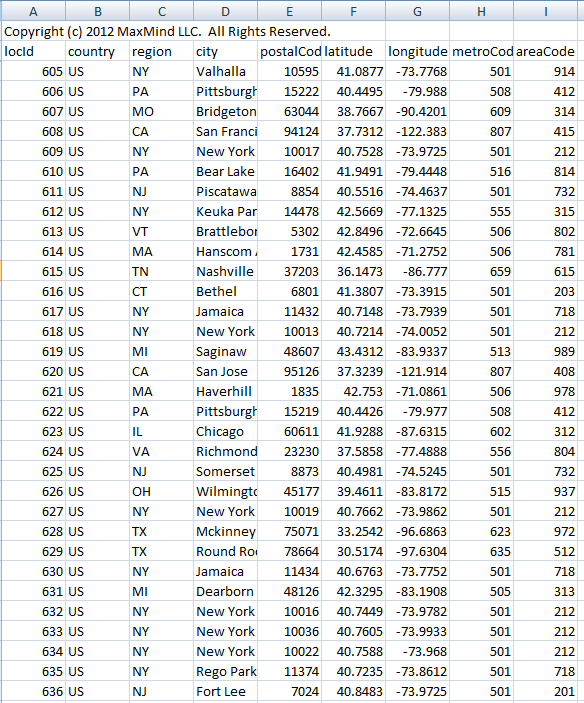

And finally, we have GoLiteCity-Location, which map a location id to an actual location:

Anyone who used a relational database should be familiar with the data format, right?

I found it really interesting that MaxMind state on their site:

Note that queries made against the CSV data imported into a SQL database can take up to a few seconds. If performance is an issue, the binary format is much faster, and can handle thousands of lookups per second.

They actually have a custom binary format to allow faster queries, but I think that I can do something about this. In the next post in this series, I’ll talk about how to import this data into RavenDB and the model concerns that we have with it.

Comments

Before you start showing a RavenDB solution, could you explain what exactly is the problem? - what queries do you need to run - which queries can take up to few seconds? - sql databases usually store the data in binary format, so how is their custom binary format different/better? - what about indexing the data in SQL database? - its only 168 k rows - could you elaborate on how to make queries so slow?

Rafal, I want to locate a location based on ip range. I don't know what sort of perf issues they had with RDBMS, I am just reporting exactly what they had there.

Heh interesting :)

I did something like this in 2009 : )

IPAddressExtensions - extending the System.Net.IPAddress class http://ipaddressextensions.codeplex.com/

eg. using System.Net; using WorldDomination.Net;

string userHostIpAddress = "203.1.2.3"; IPAddress ipAddress; if (IPAddress.TryParse(userHostIpAddress, out ipAddress)) { string country = ipAddress.Country(); // return value: UNITED STATES string iso3166TwoLetterCode = ipAddress.Iso3166TwoLetterCode(); // return value: US }

I grabbed a csv file similar to what you did Ayende. Embedded it into the dll and off you go :)

This was a cheap way to find the county of a person who hits the website without having to rely on 3rd party api's.

Of course, the data is now old (that was 2009!). But i did make it extensible so instead of using the embedded zipped file, u can provide your own (if it's in the correct csv format).

@Rafal: My usage was to redirect or display a website, for the country the connecting person, is guessed to be in (via their connecting IP).

:)

Caveat: this was back in 2009 .. where i sucked even more at coding than I do now .. so please be kind @ the nasty code. Even i'm scared to look at it :P

the problem with the data imported into mysql, it's that if you use the suggested query you must make a query over 2 fields with 2 open ranges, and that it's expensive.

You could optimize the query as said in http://odkq.com/geolitecity or even or even using mysql spatial indexes (rtrees) as said in http://blog.jcole.us/2007/11/24/on-efficiently-geo-referencing-ips-with-maxmind-geoip-and-mysql-gis/ I suppose that the ayende solution it's very similar, taking in acount that they added spatial support to lucene.net

Why the binnary format is faster? because when you optimize just for a one kind of query, you can optimize the format for being efficient for just that. Maxmind have a open source c# client in their website, so if you are curius you cand download the code and check the specifics.

For IP lookup - a clustered index on startIpNum and pick first row greater than or equal to visitor ip...

@Rafal: Simply putting an index on both of the two columns start and end will not help. You have to query it this way: SELECT ... FROM .. WHERE MyIP >= Start AND MyIP <= END... The server then has a resultset for the first condition and a resultset for the second and has to merge it. In the worst situation both resultsets have the size NumRows / 2 which have to be compared.... An article in the current msdn-magazin covers exactly this problem: http://msdn.microsoft.com/en-us/magazine/jj133823.aspx

One solution: use a database with native support for spatial data types and spatial indexes... like SQL08, Oracle, MySQL, SQLLite, etc.

I wouldn't be surprised if someone has a script to map the geo script into native data types.

@TomCollins Yes, I thougth about that and about an algorithm that would make the search faster, but failed to find something that would be faster/more efficient using Lucene instead of SQL db. Hopefully Ayende will show us some clever tricks...

IP addresses are allocated in blocks, so an obvious optimization would be to index based on the first two octets. One /16 might be split among several ISPs, I grant, and some ranges will be split across multiple /16s, so you'll need to split them.

Not as simple and clever as Ajai's solution, though.

You may have missed the C# library they already have (that leverage's the binary format).

See http://www.maxmind.com/app/csharp

@Rafal see http://www.mhaller.de/archives/156-Spatial-search-with-Lucene.html for 1 example of how to do this more efficiently with Lucene

Comment preview