I spent 4 hours talking about the different No SQL approaches, you can find the video here.

Column family databases are probably most known because of Google’s BigTable implementation. The are very similar on the surface to relational database, but they are actually quite different beast. Some of the difference is storing data by rows (relational) vs. storing data by columns (column family databases). But a lot of the difference is conceptual in nature. You can’t apply the same sort of solutions that you used in a relational form to a column database.

That is because column databases are not relational, for that matter, they don’t even have what a RDBMS advocate would recognize as tables.

Nitpicker corner: this post is about the concept, I am going to ignore actual implementation details where they don’t illustrate the actual concepts.

Note: If you want more information, I highly recommend this post, explaining about data modeling in a column database.

The following concepts are critical to understand how column databases work:

- Column family

- Super columns

- Column

Columns and super columns in a column database are spare, meaning that they take exactly 0 bytes if they don’t have a value in them. Column families are the nearest thing that we have for a table, since they are about the only thing that you need to define upfront. Unlike a table, however, the only thing that you define in a column family is the name and the key sort options (there is no schema).

Personally, I think that column family databases are probably the best proof of leaky abstractions. Just about everything in CFDB (as I’ll call them from now on) is based around the idea of exposing the actual physical model to the users so they can make efficient use of that.

- Column families – A column family is how the data is stored on the disk. All the data in a single column family will sit in the same file (actually, set of files, but that is close enough). A column family can contain super columns or columns.

- A super column is a dictionary, it is a column that contains other columns (but not other super columns).

- A column is a tuple of name, value and timestamp (I’ll ignore the timestamp and treat it as a key/value pair from now on).

It is important to understand that when schema design in a CFDB is of outmost importance, if you don’t build your schema right, you literally can’t get the data out. CFDB usually offer one of two forms of queries, by key or by key range. This make sense, since a CFDB is meant to be distributed, and the key determine where the actual physical data would be located. This is because the data is stored based on the sort order of the column family, and you have no real way of changing the sorting (except choosing between ascending or descending).

The sort order, unlike in a relational database, isn’t affected by the columns values, but by the column names.

Let assume that in the Users column family, in the row “@ayende”, we have the column “name” set to “Ayende Rahine” and the column “location” set to “Israel”. The CFDB will physically sort them like this in the Users column family file:

@ayende/location = “Israel” @ayende/name = “Ayende Rahien”

This is because the sort “location” is lower than “name”. If we had a super column involved, for example, in the Friends column family, and the user “@ayende” had two friends, they would be physically stored like this in the Friends column family file:

@ayende/friends/arava= 945 @ayende/friends/rose = 14

Remember that, this property is quite important to understanding how things work in a CFDB. Let us imagine the twitter model, as our example. We need to store: users and tweets. We define three column families:

- Users – sorted by UTF8

- Tweets – sorted by Sequential Guid

- UsersTweets – super column family, sorted by Sequential Guid

Let us create the user (a note about the notation: I am using named parameters to denote column’s name & value here. The key parameter is the row key, and the column family is Users):

cfdb.Users.Insert(key: “@ayende”, name: “Ayende Rahine”, location: “Israel”, profession: “Wizard”);

You can see a visualization of how below. Note that this doesn’t look at all like how we would typically visualize a row in a relational database.

Now let us create a tweet:

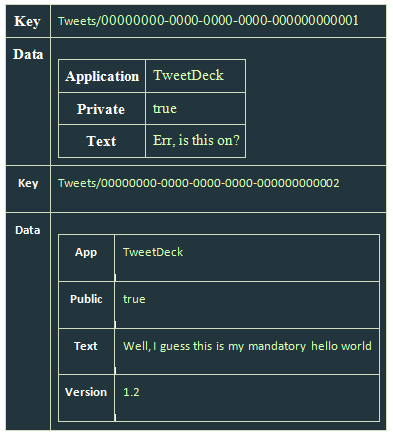

var firstTweetKey = “Tweets/” + SequentialGuid.Create(); cfdb.Tweets.Insert(key: firstTweetKey, application: “TweekDeck”, text: “Err, is this on?”, private: true); var secondTweetKey = “Tweets/” + SequentialGuid.Create(); cfdb.Tweets.Insert(key: secondTweetKey, app: “Twhirl”, version: “1.2”, text: “Well, I guess this is my mandatory hello world”, public: true);

And here is how it actually looks:

There are several things to notice here:

- In this case, the key doesn’t matter, but it does matter that it is sequential, because that will allow us to sort of it later.

- Both rows have different data columns on them.

- We don’t actually have any way to associate a user to a tweet.

That last bears some talking about. In a relational database, we would define a column called UserId, and that would give us the ability to link back to the user. Moreover, a relational will allow us to query the tweets by the user id, letting us get the user’s tweets. A CFDB doesn’t give us this option, there is no way to query by column value. For that matter, there is no way to query by column (which is a familiar trick if you are using something like Lucene).

Instead, the only thing that a CFDB gives us is a query by key. In order to answer that question, we need the UsersTweets column family:

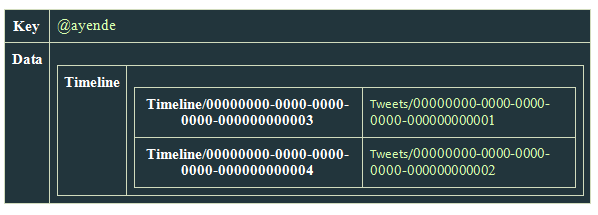

cfdb.UsersTweets.Insert(key: “@ayende”, timeline: { SequentialGuid.Create(): firstTweetKey } );

cfdb.UsersTweets.Insert(key: “@ayende”, timeline: { SequentialGuid.Create(): secondTweetKey } );

On the CFDB, it looks like this:

And now we need more explanation about the notation. Here we insert into the UsersTweets column family, to the row with the key: “@ayende”, to the super column timeline two columns, the name of each column is a sequential guid, which means that we can sort by it. What this actually does is create a single row with a single super column, holding two columns, where each column name is a guid, and the value of each column is the key of a row in the Tweets table.

Question: Couldn’t we create a super column in the Users’ column family to store the relationship? Well, yes, we could, but a column family can contain either columns or super columns, it cannot contain both.

Now, in order to get tweets for a user, we need to execute:

var tweetIds =

cfdb.UsersTweets.Get(“@ayende”)

.Fetch(“timeline”)

.Take(25)

.OrderByDescending()

.Select(x=>x.Value);

var tweets = cfdb.Tweets.Get(tweetIds);

In essence, we execute two queries, one on the UsersTweets column family, requesting the columns & values in the “timeline” super column in the row keyed “@ayende”, then execute another query against the Tweets column family to get the actual tweets.

Because the data is sorted by the column name, and because we choose to sort in descending order, we get the last 25 tweets for this user.

What would happen if I wanted to show the last 25 tweets overall (for the public timeline)? Well, that is actually very easy, all I need to do is to query the Tweets column family for tweets, ordering them by descending key order.

Nitpicker corner: No, there is not such API for a CFDB for .NET that I know of, I made it up so it would be easier to discuss the topic.

Why is a CFDB so limiting?

You might have noticed how many times I noted differences between RDBMS and a CFDB. I think that it is the CFDB that is the hardest to understand, since it is so close, on the surface to the relational model. But it seems to suffer from so many limitations. No joins, no real querying capability (except by primary key), nothing like the richness that we get from a relational database. Hell, Sqlite or Access gives me more than that. Why is it so limited?

The answer is quite simple. A CFDB is designed to run on a large number of machines, and store huge amount of information. You literally cannot store that amount of data in a relational database, and even multi-machine relational databases, such as Oracle RAC will fall over and die very rapidly on the size of data and queries that a typical CFDB is handling easily.

Do you remember that I noted that CFDB is really all about removing abstractions? CFDB is what happens when you take a database, strip everything away that make it hard to run in on a cluster and see what happens.

The reason that CFDB don’t provide joins is that joins require you to be able to scan the entire data set. That requires either someplace that has a view of the whole database (resulting in a bottleneck and a single point of failure) or actually executing a query over all machines in the cluster. Since that number can be pretty high, we want to avoid that.

CFDB don’t provide a way to query by column or value because that would necessitate either an index of the entire data set (or just in a single column family) which in again, not practical, or running the query on all machines, which is not possible. By limiting queries to just by key, CFDB ensure that they know exactly what node a query can run on. It means that each query is running on a small set of data, making them much cheaper.

It requires a drastically different mode of thinking, and while I don’t have practical experience with CFDB, I would imagine that migrations using them are… unpleasant affairs, but they are one of the ways to get really high scalability out of your data storage.

Waiting expectantly to the commenters who would say that relational databases are the BOMB and that I have no idea what I am talking about and that I should read Codd and that no one really need to use this sort of stuff except maybe Google and even then only because Google has no idea how RDBMS work (except maybe the team that worked on AdWords).

During the course of this series, I got a lot of people declaring: “But you can do that with RDMBS if you use XYZ”. The problem inherit in this statement is that it ignore the fact that if you really want to, you can use a hammer to put screws, it isn’t nice or efficient, but you can do it.

I run across this Dare Obasanjo’s post that explains the actual problem in beautiful terms:

What tends to happen once you’ve built a partitioned/sharded SQL database architecture is that you tend to notice that you’ve given up most of the features of an ACID relational database. You give up the advantages of the relationships by eschewing foreign keys, triggers and joins since these are prohibitively expensive to run across multiple databases. Denormalizing the data means that you give up on Atomicity, Consistency and Isolation when updating or retrieving results. And the end all you have left is that your data is Durable (i.e. it is persistently stored) which isn’t much better than you get from a dumb file system. Well, actually you also get to use SQL as your programming model which is nicer than performing direct file I/O operations.

It is unsurprising that after being at this point for years, some people in our industry have wondered whether it doesn’t make more sense to use data stores that are optimized for the usage patterns of large scale websites instead of gloriously misusing relational databases. A good example of the tradeoffs is the blog post from the Digg team on why they switched to Cassandra. The database was already sharded which made performing joins to calculate results of queries such as “which of my friends Dugg this item?” to be infeasible. So instead they had to perform two reads from SQL (all Diggs on an item and all of the user’s friends) then perform the intersection operation on the PHP front end code.

I can’t use most of the relational database traditional advantages anyway. Not the moment that I step out of a single machine boundary. At that point, I have to re-evaluate what I am doing to see if it make sense to have to deal with the traditional relation database disadvantages. In many cases, the answer is no, and a solution that fit the problem better can be found.

This is where the NoSQL databases started. I think that once they gotten mature enough, they have advantages for smaller scale solutions, but that is a problem for another day.

Yesterday I talked about graph databases, outlining what they are and how they work. One of the interesting things about this series is that in many cases, I am posing a question (to myself), trying to answer it, then go and find out what other people do.

When thinking about scaling scenarios for a graph database, I had the following scenario in mind, a graph of nodes that is spread across multiple servers, where each member in the graph may reside on any machine in the system. The following diagram demonstrate what I am thinking about, each rectangle represent a different machine in the system:

Why is this important?

A single machine solution is obviously a barrier to scaling (and safety, but that is another concern. In a graph database, having relations between the node is the point, that makes sharding a bit more complicated, because unless you store the entire graph on a single machine, you are forced to query across machine boundaries. And you can’t store a graph in a single machine, for the simple reason that it is unlikely that you can limit a graph to be that small. Think about the implications of Six Degrees of Separation for graph databases and it will be clear what the problem is. In real world graphs, everyone is connected to everyone.

The problem with breaking the entire graph across machines is that now it is much more expensive to do graph traversals. The following query, which previous run on a single machine:

new GraphDatabaseQuery { SourceNode = ayende, MaxDepth = 3, RelationsToFollow = new[]{"As Known As", "Family", "Friend", "Romantic", "Ex"}, Where = node => node.Location == ayende.Location, SearchOrder = SearchOrder.BreadthFirst }.Execute();

Now need to touch 3 different machines. Worse, it isn’t the number of machines that impacts that, but the spread of graph nodes across machines in the system.

After spending some time thinking about it, I came to the conclusion that I can’t envision any general way to solve the problem. Oh, I can think of several ways of reduce the problem:

- Batching cross machine queries so we only perform them at the close of each breadth first step.

- Storing multiple levels of associations (So “users/ayende” would store its relations but also “users/ayende”’s relation and “users/arik”’s relations).

The solution most likely to be successful is limiting the depth of cross machine node searches. In many cases, that is acceptable, I think. If we put the depth limit on 3, we can still give pretty good answers in a reasonable time frame. But the only way this can be made to work is with good batching support.

The algorithm may look like:

public IEnumerable<Node> DistributedTraverse(Node sourceNode, int depth, string relationToFollow, Func<Node, filter> predicate) { if(depth == 0) // feeling defensive yield break; var related = GetRelatedNodes(sourceNode.ShardName, relationToFollow, predicate); foreach(var result in related) yield return result; if(depth == 1) // don't even bother asking down the line { yield break; } foreach(var relationsByShard in related.GroupBy(x=>x.ShardName)) { var shard = GetShardProxy(relationsByShard.Key); var results = shard.BatchedQuery(sourceNodes: relationsByShard.ToArray(), depth - 1,relationToFollow, predicate); foreach(var result in results) yield return result; } }

This give us a maximum amount of (depth * number_of_machines_in_cluster) – depth remote calls: With a depth of 3 and 3 machines in the cluster, we would have a max of 6 calls.

With that theory out of our heads, let us examine how real world Graph DBs tried to resolve this issue…

Neo4j (which seems to be pretty much the default for Graph DBs) doesn’t handle this currently, there are some hints that they intend to offer cluster wide replication, but nothing about design or implementation details. Neo4j does offer write-master/read-slaves approach for scaling out, which is really nice, but even that approach is limited at one point, and in this post, I am focusing on what happen when you go beyond that point.

FlockDB (which is what is used by twitter) does include, as part of its design goals: “horizontal scaling including replication”. However, FlockDB isn’t trying to solve the problem outlined above, indeed, graph traversal is a non goal for it. FlockDB is more about finding one level of relations very fast than anything else.

In summary, I believe that while you can shard a graph database, it place a very lot limit on the type of graph walking queries you can make. Now, just to give you an idea, Neo4j, for example, appears to be able to routinely handle billions on nodes on a single machines, so you might no need to scale higher than that..

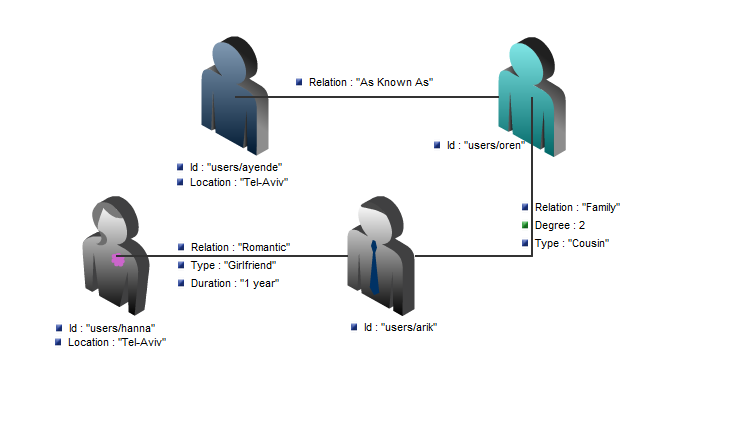

After a short break, let us continue the discussion. Think about a graph database as a document database, with a special type of documents, relations. A simple example is a social network:

There are four documents and three relations in this example. Relations in a graph database are more than just a pointer. A relation can be unidirectional or bidirectional, but more importantly, a relation is typed, I may be associated to you in several ways, you may be a client, family or my alter ego. And the relation itself can carry information. In the case of the relation document in the example above, we simply record the type of the association.

And that is about it, mostly. Once you figured out that graph database can be seen as document databases with a special document type, you are pretty much done.

Except that graph database has one additional quality that make them very useful. They allow you to perform graph operations. The most basic graph operation is traversal. For example, let us say that I want to know who of my friends is in town so I can go and have a drink. That is pretty easy to do, right? But what about indirect friends? Using a graph database, I can define the following query:

new GraphDatabaseQuery { SourceNode = ayende, MaxDepth = 3, RelationsToFollow = new[]{"As Known As", "Family", "Friend", "Romantic", "Ex"}, Where = node => node.Location == ayende.Location, SearchOrder = SearchOrder.BreadthFirst }.Execute();

I can execute more complex queries, filtering on the relation properties, considering weights, etc.

Graph databases are commonly used to solve network problems. In fact, most social networking sites use some form of a graph database to do things like “You might know…”.

Because graph databases are intentionally design to make sure that graph traversal is cheap, they also provide other operations that tend to be very expensive without it. For example, Shortest Path between two nodes. That turn out to be frequently useful when you want to do things like: “Who can recommend me to this company’s CTO so they would hire me”.

One of the things that I get ask frequently about No SQL is how to handle migrations. First, I want to clarify something very important:

Migrations sucks.

It doesn’t matter what you are doing, the moment that you need to be forward / backward compatible, or just update existing data to a new format, you are going to run into a lot of unpleasant situations. Chief of which is that what you thought was true about your data isn’t, and probably never was.

But leaving that aside, in the RDBMS world, we have three major steps when we migrate from one database version to another, non destructive update to the schema (add columns / tables), update the data (or move it to its new location), destructive schema update (delete columns / tables, introduce indexes, etc). With a document database, the situation is much the same, while we don’t have a mandatory schema, we do have the data in a certain shape.

I am going to use three examples, which hopefully demonstrate enough about migrations to give you the basic idea.

- Renaming a column – Posts.Text should be renamed to Posts.Content

- Update column value – Update tax rate in all orders after 2010-01-01

- Change many-to-one association to many-to-many association – Posts.Category to Posts Categories

Renaming a column

Using SQL, we can do this using:

-- non destructive update alter table Posts add Content nvarchar(max) null -- update the data update Posts set Content = Text -- destructive schema update alter table Posts drop column Text alter table Posts alter column Content nvarchar(max) not null

Using a Document Database, we run the following Linq query on the server (this is effectively an update):

from doc in docs where doc.type == "post" select new { type = doc.type, content = doc.text, // rename a column posted_at = doc.posted_at }

Update column value

In SQL, it is pretty easy:

-- update the data update Orders set Tax = 0.16 where OrderDate > '2010-01-01'

And using an update Linq query:

from doc in docs where doc.type == "order" && doc.order_date > new DateTime(2010,1,1) select new { tax = 0.16, order_date = doc.order_date, order_lines = doc.order_lines }

Change many-to-one association to many-to-many association

Using SQL, it is pretty complex (especially when you add the FKs):

create table CategoriesPosts (PostId int, CategoryId int) insert into CategoriesPosts (PostId, CategoryId) select Id, CategoryId from Posts alter table Posts drop column CategoryId

And using a doc db:

from doc in docs where doc.type == "post" select new { type = doc.type, content = doc.content, posted_at = doc.posted_at, categories = new [] { doc.category } }

It is pretty much the same pattern over and over again :-)

So, after telling you the wrong way to go about it, I intend to show the right way to design the data model using a document database. So let’s be about it.

As a reminder, those are the scenarios that I want to deal with:

- Main page: show list of blogs

- Main page: show list of recent posts

- Main page: show list of recent comments

- Main page: show tag cloud for posts

- Main page: show categories

- Post page: show post and all comments

- Post page: add comment to post

- Tag page: show all posts for tag

- Categories page: show all posts for category

And here is a sample data that we are going to work with (again, using C# because any other representation pretty much dictate the storage format).

var user = new User("ayende"); var blog = new Blog("Ayende @ Rahien", user) { Tags = {".NET", "Architecture", "Databases" } }; var categoryRaven = new Category("Raven"); var categoryNoSQL = new Category("NoSQL"); var post = new Post(blog, "RavenDB", "... content ...") { Categories = { categoryRaven, categoryNoSQL }, Tags = {"RavenDB", "Announcements" } }; var comment = new Comment(post, "Great news"); PersistAll(user, blog, categoryRaven, categoryNoSQL, post, comment);

When approaching a document database model design, I like to think in aggregates. What entities in the model above are aggregates? User and Blog, obviously, and Post as well. Each of those has a right to exist in an independent manner. But nothing else has a right to exist on its own. Tags are obviously Value Objects, and the only usage I can see for categories is to use them for indexing the data. Comments, as well, aren’t really meaningful outside their post. Given that decision, I decided on the following format:

That gives me a much smaller model, and it make things like pulling a post and its comments (a very common operation) extremely cheap. Let us see how we can handle each scenario using this model…

Main page: show list of blogs

This is simple, using a commonly used index:

var blogs = docDb.Query<Blog>("DocumentsByType", "type:blog");

Main page: show list of recent posts

var posts = docDb.Query<Post>("PostsByTime", orderBy:"-posted_at");

This is using an index for posts by time:

from doc in docs where doc.type == "post" select new {doc.posted_at}

Main page: show list of recent comments

This is more interesting, because we don’t have the concept of comments as a separate thing. We handle this using an index that extract the values from the post document:

from doc in docs where doc.type == "post" from comment in doc.comments select new {comment.posted_at, comment.content }

And the query itself:

var recentComments = docDb.Query<Comment>("CommentsByTime", orderBy:"-posted_at");

Main page: show tag cloud for posts

And now, we have another interesting challenge, we need to do aggregation on top of the tags. This is done using Map/Reduce operation. It sounds frightening, but it is pretty easy, just two linq queries:

from doc in docs where doc.type == "post" select tag in doc.tags select new { tag, count = 1} from result in results group result by result.tag into g select new {tag = g.Key, count = g.Sum(x=>x.count) }

And given that, we can now execute a simple query on top of that:

var tagCloud = docDb.Query<TagAndCount>("TagCloud");

Main page: show categories

This is pretty much the same thing as tag cloud, because we want to extract the categories from the posts, and get the number of posts in each category.

from doc in docs where doc.type == "post" select category in doc.categories select new { category, count = 1} from result in results group result by result.category into g select new {category= g.Key, count = g.Sum(x=>x.count) }

And given that, we can now execute a simple query on top of that:

var categories = docDb.Query<CategoryAndCount>("CategoriesCount");

Post page: show post and all comments

Here we see how easy it is to manage object graphs, all we need to do is pull the post document, and we get everything we need to render the post page:

var post = docDb.Get<Post>("posts/1");

Post page: add comment to post

Remember, a comment cannot live outside a post, so adding a comment involves getting the post, adding the comment and saving:

var post = docDb.Get<Post>("posts/1"); post.Comments.Add(new Comment(...)); docDb.SaveChanges();

Note that this uses the Unit of Work pattern, the Doc DB keep track of the post entity and will persist it to the database when SaveChanges() is called.

Tag page: show all posts for tag

Again, we have a two step approach, define the index, and then query on it. It is pretty simple:

from doc in docs where doc.type == "post" from tag in doc.tags select new { tag }

And the query itself is:

var posts = docDb.Query<Post>("PostsByTag", "tag:Raven");

Categories page: show all posts for category

This is the same as all posts for tag, so I’ll skip it.

There are a few things to notice about this approach.

- A good modeling technique is to think about Aggregates in the DDD sense, an Aggregate and all its associations are stored as a single document.

- If we need to query on an entity encapsulated in an Aggregate, we can extract that information using an index. That extraction, however, doesn’t change the way that way that we access the data.

- We can create “virtual entities”, such as Tags or Categories, which doesn’t really exists, but are generated by the indexes we define. Those are usually things that are only useful for users to search / organize the aggregates on.

- Schema doesn’t really matter, because we either search by key, or on well known indexes, which massage the data to the format that we expect it to be.

Thoughts?

I am going to demonstrate the design of the data model in a document database for a typical blog application.

The following is my default sample data model, showing a very simple blog:

The absolutely wrong approach with a document database is to try to take the relational model and apply it on a document level. This is especially wrong because for a while, it might actually work. Let us say that we want to store the following:

var user = new User("ayende"); var blog = new Blog("Ayende @ Rahien", user) { Tags = {".NET", "Architecture", "Databases" } }; var categoryRaven = new Category("Raven"); var categoryNoSQL = new Category("NoSQL"); var post = new Post(blog, "RavenDB", "... content ...") { Categories = { categoryRaven, categoryNoSQL }, Tags = {"RavenDB", "Announcements" } }; var comment = new Comment(post, "Great news"); PersistAll(user, blog, categoryRaven, categoryNoSQL, post, comment);

Interestingly enough, I need to use code to represent the data without tying it to a particular storage format.

The wrong approach to store the data would be to store each object as its own document, similar to the way we would store each object as its own row in a relational database. That wrong approach would look like this:

I know that I am repeating myself here, but I have seen people miss the point before. Do NOT try to model a document database in this way.

The main reason that this is wrong is that a document database has no real support for doing joins, unions or any of the things that make such a model work effectively in a relational model.

The main reason that this is wrong is that a document database has no real support for doing joins, unions or any of the things that make such a model work effectively in a relational model.

Let us try to analyze the scenarios where we need this data, okay?

- Main page: show list of blogs

- Main page: show list of recent posts

- Main page: show list of recent comments

- Main page: show tag cloud for posts

- Main page: show categories

- Post page: show post and all comments

- Post page: add comment to post

- Tag page: show all posts for tag

- Categories page: show all posts for category

I am going to analyze each of those scenarios using SQL (and the above model) and the current (and bad, smelly, nasty) document database model. I’ll have another post showing how to correctly model this in a document database, this post is about how not to do it.

Main page: show list of blogs

Using SQL, this is pretty easy:

select * from blogs

Using DocDB, this is easy, we are using a built-in index to query for documents by their type:

docDb.Query<Blog>("DocumentsByType", query:"type:blog");

Main page: show list of recent posts

Using SQL, this is pretty easy:

select * from posts order by PostedAt desc

Using DocDB, we need to define our own index function, to allow use to sort on it. That is painless, even if I say so myself:

from doc in docs where doc.type == "post" select new {doc.posted_at}

And now we can query this using:

docDb.Query<Post>("Posts", orderBy:"-posted_at");

Main page: show list of recent comments

This is exactly the same as recent posts, so I’ll skip it.

Main page: show tag cloud for posts

Here the SQL grows interesting:

select Name, COUNT(*) as TagCount from tags where ItemType = 'Posts' group by Name

And with the document database we need to write a map/reduce index (“Did you just told me to go @%$# myself?”)

from doc in docs where doc.type == "posts" from tag in doc.tags select new { tag, count = 1 } from result in results group result by result.tag into g select new { tag = g.Key, count = g.Sum(x=>x.count) }

And now that we have the index, we can get the values from it using:

var tagCloud = new TagCloud(); var tagIds = docDb.Query<TagAndCount>("TagsCloud", orderBy:"+count"); foreach(var tagId in tagIds) { var tag = docDb.Get<Tag>(tagId.Tag); tagCloud.Add(tag.Name, tagId.Count); }

Now this is ugly on many levels. First, we have the fairly complex index. Second, we have to merge the data ourselves at the client side. Third, we have to perform a SELECT N+1.

Now this is ugly on many levels. First, we have the fairly complex index. Second, we have to merge the data ourselves at the client side. Third, we have to perform a SELECT N+1.

Yuck doesn’t being to cover it. There are actually ways to handle this more nicely, by making a multi get request, but I’ll not bother.

Main page: show categories

Exactly the same as show blogs, so I’ll skip it.

Post page: show post and all comments

Using stupid SQL:

select * from Posts where Id = 1 select * from Comments where PostId = 1

A more efficient method would be to use a join:

select * from Posts join Comments on Posts.Id = Comments.Id where Posts.Id = 1

With the doc db, we can do:

var post = docDb.Get<Post>(1); var comments = docDb.Query<Comment>("CommentsByPost", query:"post_id:1", orderBy:"+posted_at");

Which, of course, require us to define the comments by post index:

from doc in docs where doc.type == "comment" select new{doc.post_id, doc.posted_at}

Note that we have to make two calls here, because a document database has no notion of joins.

Post page: add comment to post

In SQL, it is a straightforward insert:

insert into comments (PostId, ... ) values(1, ...)

And with a document database, you can use:

docDb.Store(new Comment{ PostId = 1, ... });

docDb.SaveChanges();

Nothing much to look at here, using this flawed model.

Tag page: show all posts for tag

Tag page: show all posts for tag

Using sql, that is slightly complex, because tags may be associated with blogs or with posts, so we need to do:

select * from Posts where Id in ( select ItemId from tags where ItemType = 'Posts' and TagId = 1 )

Using a document database:

var posts = docDb.Query<Post>("PostsByTag", query:"tag:tags/1");

With the following index:

from doc in docs where doc.type == "post" from tag in doc.tags select new {tag}

Categories page: show all posts for category

This is exactly like tags, so I’ll skip it.

As you’ve seen, by copying the relational model, we have created just the same sort of environment that we already had with RDBMS, but now we are faced with the problem that a document database can’t do things that a relational database can. In my eyes, what we have done is a net lose. Oh, we may gain some small benefit by being schemaless, but that isn’t really that beneficial in compared to the amount of effort that we have to go to by trying to be relational on a non relational database.

I described what a document database is, but I haven’t touched about why you would want to use it.

The major benefit, of course, is that you are dealing with documents. There is little or no impedance mismatch between DTOs and documents. That means that storing data in the document database is usually significantly easier than when using an RDBMS for most non trivial scenarios.

It is usually quite painful to design a good physical data model for an RDBMS, because the way the data is laid out in the database and the way that we think about it in our application are drastically different. Moreover, RDBMS has this little thing called Schemas. And modifying a schema can be a painful thing indeed.

Sidebar: One of the most common problems that I find when reviewing a project is that the first step (or one of them) was to build the Entity Relations Diagram, thereby sinking a large time/effort commitment into it before the project really starts and real world usage tells us what we actually need.

The schemaless nature of a document database means that we don’t have to worry about the shape of the data we are using, we can just serialize things into and out of the database. It helps that the commonly used format (JSON) is both human readable and easily managed by tools.

A document database doesn’t support relations, which means that each document is independent. That makes it much easier to shard the database than it would be in a relational database, because we don’t need to either store all relations on the same shard or support distributed joins.

Finally, I like to think about document databases as a natural candidate for DDD and DDDish (DDD-like?) applications. When using a relational database, we are instructed to think in terms of Aggregates and always go through an aggregate. The problem with that is that it tends to produce very bad performance in many instances, as we need to traverse the aggregate associations, or specialized knowledge in each context. With a document database, aggregates are quite natural, and highly performant, they are just the same document, after all.

I’ll post more about this issue tomorrow.