I got a great comment on my previous post about using Map/Reduce indexes in RavenDB for event sourcing. The question was how to handle time sensitive events or ordered events in this manner. The simple answer is that you can’t, RavenDB intentionally don’t expose anything about the ordering of the documents to the index. In fact, given the distributed nature of RavenDB, even the notion of ordering documents by time become really hard.

But before we close the question as “cannot do that by design", let’s see why we want to do something like that. Sometimes, this really is just the developer wanting to do things in the way they are used to and there is no need for actually enforcing the ordering of documents. But in other cases, you want to do this because there is a business meaning behind these events. In those cases, however, you need to handle several things that are a lot more complex than they appear. Because you may be informed of an event long after that actually happened, and you need to handle that.

Our example for this post is going to be mortgage payments. This is a good example of a system where time matters. If you don’t pay your payments on time, that matters. So let’s see how we can model this as an event based system, shall we?

A mortgage goes through several stages, but the only two that are of interest for us right now are:

- Approval – when the terms of the loan are set (how much money, what is the collateral, the APR, etc).

- Withdrawal – when money is actually withdrawn, which may happen in installments.

Depending on the terms of the mortgage, we need to compute how much money should be paid on a monthly basis. This depend on a lot of factors, for example, if the principle is tied to some base line, changes to the base line will change the amount of the principle. If only some of the amount was withdrawn, if there are late fees, balloon payment, etc. Because of that, on a monthly basis, we are going to run a computation for the expected amount due for the next month.

And, obviously, we have the actual payments that are being made.

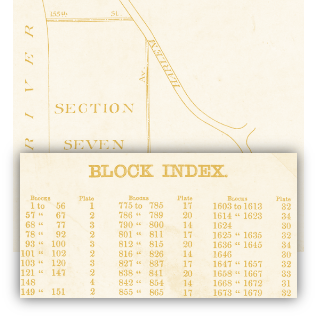

Here is what the (highly simplified) structure looks like:

This includes all the details about the mortgage, how much was approved, the APR, etc.

The following is what the expected amount to be paid looks like:

And here we have the actual payment:

All pretty much bare bones, but sufficient to explain what is going on here.

With that in place, let’s see how we can actually make use of it, shall we?

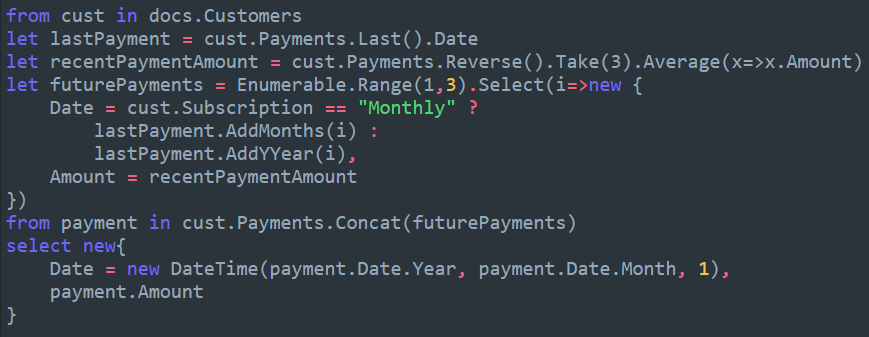

Here are the expected payments:

Here are the mortgage payments:

The first thing we want to do is to aggregate the relevant operations on a monthly basis, since this is how mortgages usually work. I’m going to use a map reduce index to do so, and as usual in this series of post, we’ll use JavaScript indexes to do the deed.

Unlike previous examples, now we have real business logic in the index. Most specifically, funds allocations for partial payments. If the amount of money paid is less than the expected amount, we first apply it to the interest, and only then to the principle.

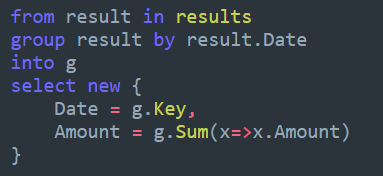

Here are the results of this index:

You can clearly see that mistake that were made in the payments. On March, the amount due for the loan increased (took another installment from the mortgage) but the payments were made on the old amount.

We aren’t done yet, though. So far we have the status of the mortgage on a monthly basis, but we want to have a global view of the mortgage. In order to do that, we need to take a few steps. First, we need to define an Output Collection for the index, that will allow us to further process the results on this index.

In order to compute the current status of the mortgage, we aggregate both the mortgage status over time and the amount paid by the bank for the mortgage, so we have the following index:

Which gives us the following output:

As you can see, we have a PastDue marker on the loan. At this point, we can make another payment on the mortgage, to close the missing amount, like so:

This will update the monthly mortgage status and then the overall status. Of course, in a real system (I mentioned this is highly simplified, right?) we’ll need to take into account payments made in one time but applied to different times (which we can handle by an AppliedTo property) and a lot of the actual core logic isn’t in indexes. Please don’t do mortgage logic in RavenDB indexes, that stuff deserve its own handling, in your own code. And most certainly don’t do that in JavaScript. The idea behind this post is to explore how we can handle non trivial event projection using RavenDB. The example was chosen because I assume most people will be familiar with it and it wasn’t immediately obvious how to go about actually solving it.

If you want to play with this, you can import the following file (Settings > Import Data) to get the documents and index definitions.