The bug started as pretty much all others. “We have a problem when replicating from a Linux machine to a Windows machine, I’m seeing some funny values there”. This didn’t raise any alarm bells, after all, that was the point of checking what was going on in a mixed mode cluster. We didn’t expect any issues, but it wasn’t surprising that they happened.

The bug started as pretty much all others. “We have a problem when replicating from a Linux machine to a Windows machine, I’m seeing some funny values there”. This didn’t raise any alarm bells, after all, that was the point of checking what was going on in a mixed mode cluster. We didn’t expect any issues, but it wasn’t surprising that they happened.

The bug in question showed up as an invalid database id in some documents. In particular, it meant that we might have node A, node B and node C in the cluster, and running a particular scenario suddenly started also reporting node Ω, node Σ and other fun stuff like that.

And so the investigation began. We were able to reproduce this error once we put enough load on the cluster (typically around the 20th million document write or so), and it was never consistent.

We looked at how we save the data to disk, we looked at how we ready it, we scanned all the incoming and outgoing data. We sniffed raw TCP sockets and we looked at everything from the threading model to random corruption of data on the wire to our own code reading the data to manual review of the TCP code in the Linux kernel.

The later might require some explanation, it turned out that setting TCP_NODELAY on Linux would make the issue go away. That only made things a lot harder to figure out. What was worse, this corruption only ever happened in this particular location, never anywhere else. It was maddening, and about three people worked on this particular issue for over a week with the sole result being: “We know where it roughly happening, but no idea why or how”.

That in itself was a very valuable thing to have, and along the way we were able to fix a bunch of other stuff that was found under this level of scrutiny. But the original problem persisted, quite annoyingly.

Eventually, we tracked it down to this method:

We were there before, and we looked at the code, and it looked fine. Except that it wasn’t. In particular, there is a problem when the range we want to move is overlapped with the range we want to move it to.

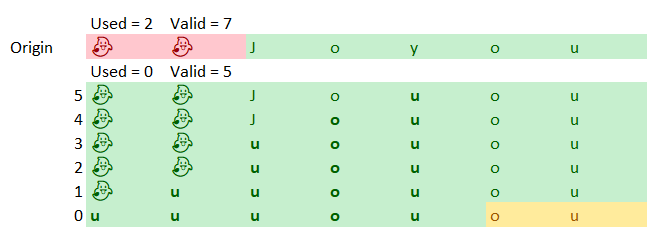

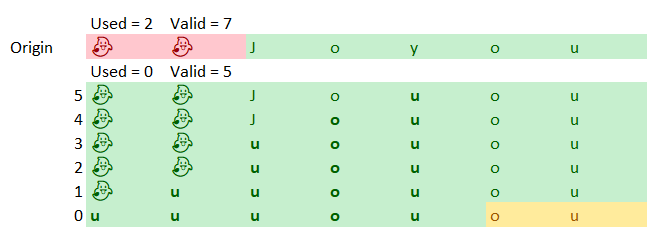

For example, consider that we have a buffer of 32KB, and we read from the network 7 bytes. We then consumed 2 of those bytes. In the image below, you can see that as the Origin, with the consumed bytes shown as ghosts.

What we need to do now is to move the “Joyou” to the beginning of the buffer, but note that we need to move it from 2 – 7 to 0 – 5, which are overlapping ranges. The issue is that we want to be able to fully read “Joyous”, which require us to do some work to make sure that we can do that. This ReadExactly piece of code was written with the knowledge that at most it will be called with 16 bytes to read, and the buffer size is 32KB, so there was an implicit assumption that those ranges can’t overlap.

when they do… Well, you can see in the image how the data is changed with each iteration of the loop. The end result is that we have corrupted our buffer and mess everything up. The Linux TCP stack had no issue, it was all in our code. The problem is that while it is rare, it is perfectly fine to fragment the data you send into multiple packets, each with very small length. The reason why TCP_NODELAY “fixed” the issue was that it probably didn’t trigger the multiple small buffers one after another in that particular scenario. It is also worth noting that we tracked this down to specific load pattern that would cause the sender to split packets in this way to generate this error condition.

That didn’t actually fix anything, since it could still happen, but I traced the code, and I think that this happened with more regularity since we hit the buffer just right to send a value over the buffer size in just the wrong way. The fix for this, by the way, is to avoid the manual buffer copying and to use memove(), which is safe to use for overlapped ranges.

That leave us with the question, why did it take us so long to find this out? For that matter, how could this error surface only in this particular case? There is nothing really special with the database id, and this particular method is called a lot by the code.

Figuring this out took even more time, basically, this bug was hidden by the way our code validate the incoming stream. We don’t trust data from the network, and we run it through a set of validations to ensure that it is safe to consume. When this error happened in the normal course of things, higher level code would typically detect that as corruption and close the connection. The other side would retry and since this is timing dependent, it will very likely be able to proceed. The issue with database ids is that they are opaque binary values (they are guids, so no structure at all that is meaningful for the application). That means that only when we got this particular issue on that particular field (and other field at all) will we be able to pass validation and actually get the error.

The fix was annoyingly simply given the amount of time we spent finding it, but we have been able to root out a significant bug as a result of the real world tests we run.

We are running a lot of tests right now on RavenDB, in all sort of interesting configurations. Some of the more interesting results came from testing wildly heterogeneous systems. Put a node on a fast Windows machine, connect it to a couple of Raspberry PIs, a cheap Windows tablet over WiFi and a slow Linux machine and see how that kind of cluster is handling high load.

We are running a lot of tests right now on RavenDB, in all sort of interesting configurations. Some of the more interesting results came from testing wildly heterogeneous systems. Put a node on a fast Windows machine, connect it to a couple of Raspberry PIs, a cheap Windows tablet over WiFi and a slow Linux machine and see how that kind of cluster is handling high load.