A major design goal for RavenDB is that it would be easy and convenient to user. A major constraint is that it must be secured. As you can imagine, those two are quite often work against one another. Security is often anything but easy to use, and it is rarely convenient.

A major design goal for RavenDB is that it would be easy and convenient to user. A major constraint is that it must be secured. As you can imagine, those two are quite often work against one another. Security is often anything but easy to use, and it is rarely convenient.

Previously, we have used Windows Authentication and OAuth to secure access to RavenDB. That works and has been deployed in the wild for quite some time. It is also a major pain whenever there is an issue. If the connection to the domain controller drops, we might have authentication delays of many seconds, and trying to debug Active Directory issues in production deployments can be… a bit of a pain, in the same way that an audit by the IRS that starts with SWAT team bashing down your door is mildly annoying. OAuth, on the other hand, is much better, since it is under our control, and we can figure out exactly what is going on with it if need be.

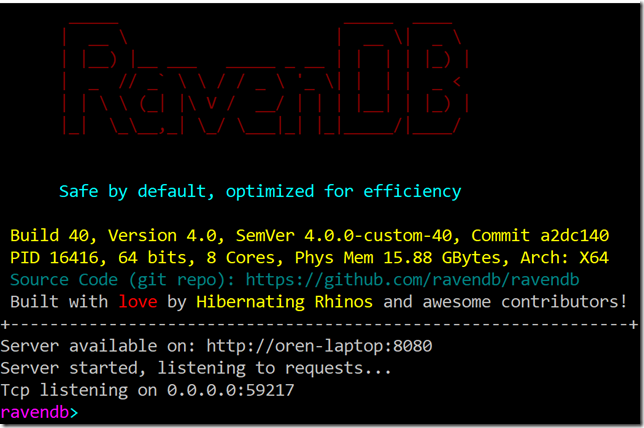

Since RavenDB 4.0 is running on Windows, Linux & Mac, we decided to drop the Windows Authentication support and just use OAuth. The problem is that if we choose to support HTTP, we have to rely on extremely complex protocols that attempt to secure authentication using plain text, but don’t usually deliver good results and are typically a pain to debug and support. Or, we can use HTTPS and just let SSL/TLS to handle it all for us. A good example of the difference can be seen in OAuth 1.0 vs OAuth 2.0.

When we built RavenDB 1.0, roughly around 2009, the operating environment was quite different. In 2017, not using HTTPS is pretty much a sin into itself. As we started security modeling for RavenDB 4.0, it became obvious that we couldn’t really support any security on top of HTTP without effectively having to implement most of the properties of HTTPS ourselves. I’m many things, but I’m not a security expert, not by a long shot. Given the chance to implement my own security protocol, I would gladly do that, for a toy project or a weekend hackfest. But there is no way I would trust my own security in production against serious attacks. That pretty much led us to the realization that we have to require HTTPS for anything that require security.

That includes running inside the organization, exposed to the public internet, running inside the cloud or in a shared datacenter, etc. Pretty much, unless you have HTTPS, there is no real point in talking about security. Given that, it meant that we could shift our baseline approach to security. If we are always going to require HTTPS for security, it means that we are operating in an environment that is much nicer for us to apply security.

Now, you can choose to run HTTP only, and avoid the need for certificate management, etc. However, at that point, you aren’t running a secure system, or you are already running it in a trusted and secured environment. In that case, we want to be clear that there isn’t any point to try to apply security policy (such as who can access what). Any network sniffer can figure out the access tokens and pretend to be whomever they want, if you are using HTTP.

With HTTPS required, we now move to the realm of having the admin take care of the certificates, securing them, renewal, etc. That is the part where it isn’t as easy or convenient as we could wish for. However, once we had that as a baseline, it opens an interesting path for security. Instead of relying on our own solution, we can use the builtin one and use x509 certificates from the client for authentication. This has the advantage that it is widely supported, standardized and secured. It is a bit less convenient then just a password, but the advantage is that any security system already in place know how to deal with, store, authorize and manage access to certificates.

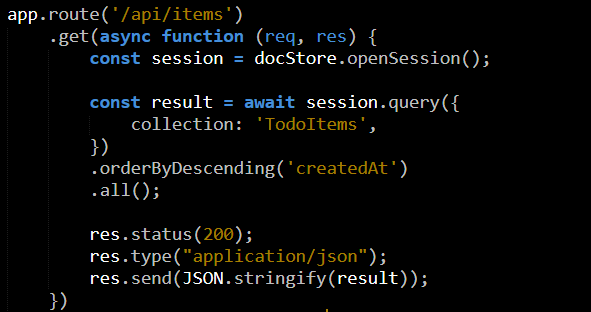

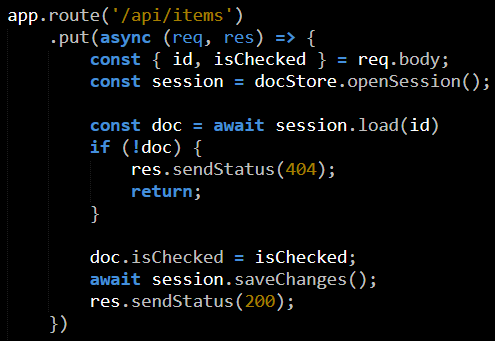

The idea is that you can go to RavenDB and either register or generate a x509 certificate. To that certificate an administrator can assign permissions (such as what dbs it is allowed to access). From that point on, a client (RavenDB, browser, curl, etc) can connect to RavenDB and just issue REST requests. There is no need to do anything else for the system to work. Contrast that with how you would typically have to deal authentication using OAuth, by sending the token, keeping it fresh manually, etc.

Using x509 also has the distinct advantage that it is widely trusted. We intend to provide this level of security to all editions of RavenDB (so the Community Edition will also be able to use it).

A nice accidental feature of this decision is that we are going to be able to apply authentication at the connection level, and connection pooling means that we are likely going to have connections live for a long time. That means that we only need to pay the authentication cost once, instead of per request, with OAuth.

To simplify matters, we’ll likely just use the client certificates for authenticating the client, so we’ll not care if they are from a trusted root, etc. We’ll just require that the admin register the valid certificate with the cluster so they will be recognized. If you need to stop using a certificate, you can delete its registration or generate a new certificate to take its place. On the client side, it means that the DocumentStore will expose a X509Certificate property that you can set (or the equivalent in other clients). That means that you can use your own policies on the client to determine how to store the certificate.

On the server side, by the way, we’ll expose an extension point that will allow you to retrieve the certificate using your own policies. For example, if you are using Azure Key Vault or Hashicorp Vault or even your own HSM. This is done by invoking a process you specify, so you can write your own scripts / mini programs and apply whatever logic you need. This creates a clean separation between RavenDB and the secret store in use.

Authentication between servers is also done using SSL and certificates. We expect that we’ll commonly have all the servers running the same wildcard certificate, in which case they will obviously trust each other. Alternatively, you can also specify additional certificates that will be treated as servers. This is useful for when you are running with separate certificate for each server, but it is also a critical part of certificate rotation. When your certificate is about to expire, the admin will register the new certificate as trusted, and then start replacing the certificates of each of the nodes in turn. This allow us to run with both old and new certificates concurrently during this process.

We considered relying on some properties of the certificate itself, but it seemed like an error prune process. It is better to have the admin explicitly state, both for clients and server certificates which one we should actually trust, and at what level.

I would really appreciate any commentary you have about this feature, both in terms of ease of use, acceptability and obviously its security.