A user reported a bug to our support. When running on MacOS, they were unable to authenticate against a remote RavenDB instance.

That was strange, since we support running on MacOS, both as a client and as a server. We have had some issues around different behavior, but it is working, so what could the issue be?

RavenDB uses X509 certificates for authentication. That ensure mutual authentication for client and server, as well as secure the communication from any prying eyes. But on that particular system, it just did not work. RavenDB was accessible, but when attempting to access it, we weren’t able to authenticate. When using the browser, we didn’t get the “Choose the certificate” dialog either. That was really strange. Digging deeper, we verified that the certificate was setup property in the keychain. We also tested FireFox, which has a separate store for certificates, nothing worked.

Then we tested using curl, and were able to properly access and authenticate to the server. So something was really strange here. Testing from a different machine, we were able to observe no issues.

The user mentioned that they recently moved to Catalina, which is known to have some changes in how it process certificates. None of which applied to our scenario, however.

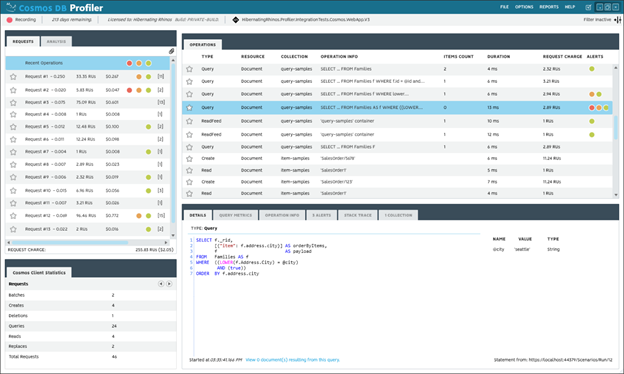

Eventually, we started comparing network traces and then we found something really interesting. Take a look at this:

That was an interesting discovery. The user had an anti virus installed, and the AV installed a root CA and then setup a proxy to direct all traffic through the AV. Because it added a root CA, it was able to sniff all the traffic on the machine.

However, with a client certificate, that model doesn’t work. The proxy would need to have the private key of the certificate to be able to authenticate to the remote system, which it obviously does not have. It silently stripped the request for a client certificate, which meant that as far as RavenDB was concerned, we saw no client certificate in the request, so we rightfully rejected it.

I found it interesting that we were able to actually use curl, I assume that Avast didn’t setup the proxy so curl would be included.

The solution was simple, exclude RavenDB from the inspected addresses, which immediately fixed the problem.

I spent some time trying to figure out if there was a good way for us to detect this automatically. Sadly, there is no way to tell from the client side what is the certificate that was used. If there was, we could compare it to the expected result and alert on that.