I said that I would post about it, so here is the high level design for generic implementation of natural language looking parsing. Let us explore the problem scenario first. We want to be able to build this language, without having to build a full blown language from scratch:

open http://www.ayende.com/

click on link to Blog

click on link to first post

enter comment with name Ayende Rahien and email foo@example.org and url http://www.ayende.com/Blog/

enter comment text This is an awesome post.

click on submit

comment with This is an awesome post should appear on page

And to prove that we are not focusing on a single language, let us try this one as well:

when account balance is 500$ and withdrawal is made of 400$ we should get a low funds alert

when account balance is 500$ and withdrawal is made of 501$ we should deny the transaction

when weekly international charge is at 3,500$ and max weekly international charge is of 5,000$ and new charge arrives for amount 2,230$ we should deny the transaction

I think that those are divergent enough to show that the solution is a generic one.

And now, to the solution. Each type of language is going to have its own DSL engine, which know how to deal with the particular dialect that we are using. The default parsing is a three steps solution. First, split the text into sentences, then, split each sentence to tokens by whitespace. Now, for each statement, we search for the appropriate statement resolve, which is a class that knows how to deal with it. The statement resolver methods are then called to process the statement.

There are two key principal to the design. First, turning something like 'click on link' to an invocation of the ClickOnLink statement resolver and lazy parameter evaluation.

This is going to be interesting, the time right now is 19:38, and I am going to start implementing this.

It is now 22:04, and I finished the first language.

Working on the second now. It is 22:10 and I am done with the second one.

What did I do?

I took the text we had and turn that into executable commands. Now, this isn't flexible at all. If you make a modification in the way it is structured, it will fail, coming back to why natural language is a bad choice here, but it had quite a bit of flexibility in it.

You can get the code for this, including tests, here: https://rhino-tools.svn.sourceforge.net/svnroot/rhino-tools/experiments/natrual-language

But let us talk for a bit about how this is implemented. I'll show the bank example, because it is easier.

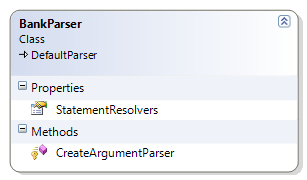

We start by defining the BankParser, which looks like this:

The bank parser merely define what the statement resolvers are, and any special parsers that are needed (in this case, we need to handle dollar values).

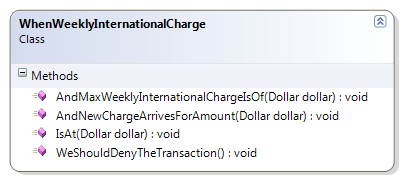

A statement parser is trivial:

And yes, those are pure POCO classes.

The whole idea here was that I can implement some smarts into the default engine about how it recognize methods and resolve parameters. I will admit that overloading caused some issues, but I think that this is pretty simple implementation.

It also does a good job in demonstrating the problems in such a language. Go ahead and try to build operator precedence into it. Or implement an if statement. You really can't, not without introducing a lot more structure into it. And that would turn it into yet another programming language.

What about the tooling? Intellisense and syntax highlighting?

Well, since we have the structure of the code, and we know the conventions, you shouldn't have a problem taking my previous posts about this and translating them directly into supporting this.

And yes, I can create a language in this in a few minutes, As BankParser has proven.