Analyzing a performance problem – Is a prisoner dangerous?

Recently I run into a performance problem in an application that I was reviewing, and I thought that it would make a great post. Since I can’t use the actual application model, I decided that I am tired of using the same old online shop model and turned to the one domain in which I am a domain expert. Prisons.

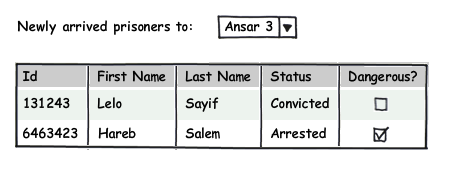

Let us imagine that this is part of the Prison 10’s* Dashboard. It looks pretty simple, right?

Let us talk about this as SQL, ignoring all layers in the middle. We can express this as:

SELECT TOP 10 Prisoner.Id, Prisoner.FirstName, Prisoner.LastName, Prisoner.Status FROM Prisoner JOIN Imprisonment on Prisoner.Id = Imprisonment.Prisoner WHERE Imprisonment.Prion = 6 AND Imprisonment.IsCurrent = 1 ORDER BY Imprisonment.ArrivalDate DESC

Seems simple enough, right? But notice that we don’t have Prisoner.Dangerous column on the result. That is because there is no such column in the table. So what does our good developer do? He goes to the domain expert and ask him where he is supposed to get the data from. The domain expert answer is that this is not an attribute of the prisoner itself, it is a decision based on many factors, any of which can flag a prisoner as a dangerous. He then gives the developer a list of the few common ones:

- If the prisoner is not convicted

- If the prisoner has a disciplinary notice in the last 2 months

- If the prisoner has more than one disciplinary notice in the last 6 months

- If the prisoner is charged with violent crime

- If the prisoner is currently in withdrawal

- If the prisoner request for vacation was denied in the last 3 months

As you can see, the list goes on (and on, and on). Our developer starts working on the problem. Since he is working on a small local database, he is starting out with a spike of everything as in memory filters. It is beautifully Object Oriented, and it looks like this:

public class NotConvicted : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.Status != PrisonerStatus.Convicted; } } public class DisciplinaryNoticeInLast2Months : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.DisciplinaryNotices .Any(x=>x.Date > DateTime.Today.AddMonths(-2)); } } public class MorethanOneDisciplinaryNoticeInLast6Months : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.DisciplinaryNotices .Where(x=>x.Date > DateTime.Today.AddMonths(-6)) .Count() > 1; } } public class ChargedWithViolentCrime : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.Charges .Any(x=>x.ChargeStatus.IsViolent); } } public class CurrentlyInDrugWithdrawal : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.MedicalRecord .Withdrawals .Any(x=>x.IsCurrent); } } public class VacationRequestDeniedInLast3Months : IPrisonerDangerousEvaluator { public bool Eval(Prisoner p) { return p.Requests .Where(x=>x.RequestType == RequestType.Vacation && x.Date > DateTime.Today.AddMonths(-3)) .Any(x=>x.Status.Approved == false); } }

Now, this works, and from OO perspective, it works just great. From a performance perspective, this is horrible. Let us do the math of how many queries this is going to generate, oaky?

- Get all prisoners – 1

- For each prisoner – N(Prisoners)

- NotConvicted – 0 (load data in the prisoner entity, which was already loaded)

- DisciplinaryNoticeInLast2Months – 1 (load DisciplinaryNotices)

- MorethanOneDisciplinaryNoticeInLast6Months – 0 (DisciplinaryNotices already loaded)

- ChargedWithViolentCrime – 1 (load Charges)

- CurrentlyInDrugWithdrawal – 2 (load Medical Record, load Withdrawals)

- VacationRequestDeniedInLast3Months – 2 (load Requests, load Status)

This isn’t SELECT N+1 ! This is so much worse. What we have is actually: SELECT 1 + N(1+1+2+2)

Or, in other words, assuming we want to display the first ten inmates, showing that little grid up above is going to result in 41 queries!

This actually show us several important worst practices:

- In memory filtering should be avoided if possible.

- Trying to ignore the realities of data access is going to bite you, hard.

- There is a good reason for the call for aggregate roots and the avoidance of highly connected object graphs.

So, what is the obvious approach? We can change the way that we load the data. Instead of trying to go through the object model, we can query the data directly from the database. It is going to look something like this:

SELECT TOP 10 Prisoner.Id, Prisoner.FirstName, Prisoner.LastName, Prisoner.Status, ( SELECT 1 WHERE Prisoner.Status != 'Convicted' OR EXISTS (SELECT 1 FROM DisciplinaryNotices WHERE DisciplinaryNotices.Prisoner = Prisoner.Id AND DisciplinaryNotices.Date > getdate()-60) OR 1 < (SELECT COUNT(*) FROM DisciplinaryNotices WHERE DisciplinaryNotices.Prisoner = Prisoner.Id AND DisciplinaryNotices.Date > getdate()-180) OR EXISTS (SELECT 1 FROM Charges WHERE Charges.Prisoner = Prsioner.Id AND Charges.IsViolent = 1) OR EXISTS (SELECT 1 FROM MedicalRecords JOIN Withdrawals ON MedicalRecords.Id = Withdrawal.Record WHERE MedicalRecords.Prisoner = Prisoner.Id AND Withdrawal.IsCurrent = 1) OR EXISTS (SELECT 1 FROM Requests JOIN RequestsStatus ON Requests.Id = RequestsStatus.Request WHERE Requests.Prisnoer = Prisoner.Id AND Request.Date > getdate() - 90 AND RequestsStatus.Approved = 0 AND Request.Type = 'Vacation') ) as Dangerous FROM Prisoner JOIN Imprisonment on Prisoner.Id = Imprisonment.Prisoner WHERE Imprisonment.Prion = 6 AND Imprisonment.IsCurrent = 1 ORDER BY Imprisonment.ArrivalDate DESC

From the point of view of database access, we are in a much better position, we now have only a single query to execute. For that matter, we can change the IPrisonerDangerousEval to perform their evaluation in the database, retaining the nice OO model that we have. It would look something like this:

public class NotConvicted : IPrisonerDangerousEvaluator { public void AddSelectionCriteria(DetachedCriteria crit) { return crit.Add(Restrictions.NotEq("Status", PrisonerStatus.Convicted)); } } public class DisciplinaryNoticeInLast2Months : IPrisonerDangerousEvaluator { public void AddSelectionCriteria(DetachedCriteria crit) { return crit.CreateCriteria("DisciplinaryNotices) .Add(Restrictions.Gt("Date", DateTime.Today.AddMonths(-2))); } }

Note, doing something like this require a fairly interesting infrastructure, to make sure that the results are consistent and each rule isn’t stepping on its other toes.

Problem solved?

Not… really.

Take a look at the SQL that we have above. This query is hitting 8 tables, and please remember that I explicitly stated that I kept the number of things that make a prisoner dangerous limited, there are about forty rules relating to this, not half a dozen. But even with just half a dozen, trying to optimize the above query is going to be… problematic.

This is where we figure out something really interesting. In most systems, the number of reads far outweigh the number of writes. The most basic optimization that we can do is move work from the read phase of the application to the write phase, since it is going to execute so much less often.

It seems like it would be a very simple solution for the application to execute the rules on save and set the IsDangerous property, right? Well, it would, except that there are so many different places in the application that can need this. Remember, there are a lot of rules, and just about anything can change that. So we would need to execute the rules whenever we change something in the application.

Here, again, we see the importance of aggregates, because instead of spreading that logic all over the system, we can define Prisoner as the aggregate, and force all changes to it to occur through the entity. When saving a prisoner, then we can execute this.

However, there is another aspect, this can cause an unacceptable performance for saving, since we expect the number of rules to grow and only become more complex over time. This looks like a good opportunity to to shift work to a background process. There is also the matter that we have to process those rules against every day, to make sure that we reset any rules that depend on time.

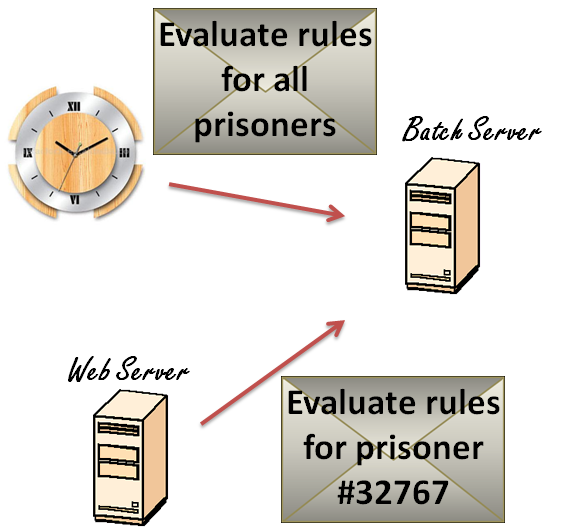

I would build such a system using the following structure:

The web server would send a notification to the batch processing server whenever a prisoner aggregate root is updated. The batch server will then process all rules for the prisoner. Once a day, the batch server will re-evaluate the rules for all prisoners. This solves the performance problem that we have when updating a prisoner. It introduce a new one, a problem of consistency. Now, there is going to be some delay between updating a prisoner and updating the IsDangerous status. In practice, I would expect the window of inconsistency to be be in the order of the time it takes to process all the rules. I would be extremely surprised if it was more than a second, and probably a lot less.

In the duration, we can show in the UI that the prisoner’s danger status in unknown. The UI can then query the application periodically until the danger status became known again using Ajax. From the user experience point of view, there would be almost no change, but in cases where the evaluation takes long enough for the user to notice, it will be made explicit what is going on.

Yes, it is a more complex solution, but it is also one that neatly avoids all the complications that we have run into during this post.

* I am sorry, but this post is going to be choke full of jokes that only I will probably understand.

Comments

Do you mean like

"If the prisoner request for vacation was denied in the last 3 months"?

Prisoners can't request vacation!

Ba dump crash!

Could you underline all the jokes (a beginner mode)?

Hopefully one day we'll be able to put make all those criteria into Linq Expressions working against IQeuryable collections on the Prisoner object, combine them and push them against the database as a one off. If we're worried about the db performance, some smart DBA can write some indexed views to stop all that joining thats going on.

@Harry, I'd rather bet on relational databases being replaced with schema-less document databases with built-in offline indexing

"Trying to ignore the realities of data access is going to bite you, hard."

Amen, +1.

J,

IN most places, prisoners can request vacations

Harry,

Yes, we can do that, but it gets very complicated, and the queries are too wide.

We could have a reporting model in memory that will be updated at every modification of our entity, something like a table in memory with colums PrisonerID and Dangerous. So now we don't need to run a process all the time and we have already calculated our attributes.

@Rafal - then you'd be pretty glad you'd written your queries in a non-persistence specific method like LINQ :)

@Ayende - which bit is the really complicated bit? do you mean writing the linq provider or combining the expressions or optimising the db?

Leonard,

What happens when the server restart?

Harry,

The logic of the queries themselves can get complicated.

Often beyond the ability to optimize them for reads

Surely the idea about saving the status on write isn't that slow, considering later on you said you didn't think the background process would even take a second to calculate the value.. surely you'd calculate the value at save time AND have the batch process do the time dependent checks every 'whatever' to update the value if needed.

This is a good post that really highlights how developers can really overreach using ORMs and IQueryable. I've seen some true monsters get spit out of both NHibernate and Linq2Sql.

There is something about writing huge sql statements by hand that forces a developer to actually think about the performance implications of a 8 way join.

@John, Really? also personally I'd want to see if the batch process could be triggered by something that can apply the same business rules the saving process would run.. ie, I'd want to trigger batch updates from my .net domain via nhibernate (or whatever).. rather than having to add logic to my .net domain and then make sure I translate that back to a 'native' sql executor.. especially considering I could technically change db product without my .net domain falling on its ass (nhib config changes at most hopefully).. but then I'd probably have to translate the entire native sql to another sql product.

Another solution is to create a table that stores the IsDangerous column values. The IsDangerous column would be updated via triggers whenever any data it depends on gets updated. (I blogged about this approach: igoro.com/.../precomputed-view-a-cool-and-usefu....)

All the triggers could be a pain to maintain, and apparently you can use an indexed view for similar effect.

@Igor

You still need to run daily batch to update time dependent values like "If the prisoner has more than one disciplinary notice in the last 6 months" because 6 months can pass.

Nice walkthrough of a practical solution to a common problem.

Oh and Stephen, I'll Maim That Tune in one...

Rik

"There is no database"

-- Oren Eini, January 2009

"Trying to ignore the realities of data access is going to bite you, hard."

-- Oren Eini, June 2009

@Stephen, I wasn't clear in my comment. No, I do not advocate writing large sql by hand. I just know from experience that ORMs make complex queries deceivingly easy to write.

Being a junior dba who has been on the receiving end of some true monster queries I just wish developers sometimes realized that a dba can't work miracles.

Ah of course, very true..

@rik, hah good eye! ;)

@Alex

In that case, you could change the type of the precomputed column from "bool" to "datetime". It would represent the date until when the prisoner should be considered dangerous.

@Igor

Are you going to have different DateTimes for every time dependant Reason that a prisoner should be considered dangerous?

i.e.

A prisoner should be considered dangerous for 1 week after Suspensions.

A prisoner needs to be considered Dangerous for 10 days after Getting meds?

Prisoner gets 1 week supension. so is dangerous for next 2 weeks. Prisoner takes Meds. Dangerous still 2 weeks out.

Suspension ended early after 2 days. How does Suspension table know to change Date to Medication Date instead of it's date?? All triggers need to know about each other? Or one monster trigger recreating all domain rules in it? With Ayende saying more than 40 rules and that they are dynamic(changing), that seems pretty fragile.

Erik L

@Erik

All good points - I should clarify.

(1) - (3): The precomputed date would be the Max over all those dates.

(4): One good approach is to have a single stored procedure, UpdateIsDangerousRecord. Then, each trigger becomes trivial: it simply calls that stored procedure, passing in the appropriate prisoner ID. Now, we only need to recompute one IsDangerous value on each update, instead of recomputing all IsDangerous values on each read.

The implementation is fairly straightforward, and the performance should be acceptable for many realistic workloads.

Another way to implement a similar solution without triggers is to use an indexed view.

(And by the way, this approach does work in practice. I used it to implement various scoreboards for the online game I develop - http://robozzle.com/. Some scoreboards would otherwise take 60+ seconds to display.)

Really nice walkthrough. Background evaluation is really the best approach here.

But I feel DisciplinaryNoticeInLast2Months :

bool Eval(Prisoner p) {

return p.DisciplinaryNotices.Any(x=>x.Date > DateTime.Today.AddMonths(-2));

}

I'd replace such methods to Prisoner or service methods implementing LINQ query extensions, if I had them in DO (i.e. we described them, but don't have this implemented for now: code.google.com/.../detail?id=93 ). In this case they'd be inlined into the SQL query. Do you consider implementing something like this for NHibernate?

@Igor

"But notice that we don’t have Prisoner.Dangerous column on the result. That is because there is no such column in the table. So what does our good developer do?"

So the problem is to resolve this without a new table, because we don't have access to database, I think.

I would probably go with triggers + daily job for the date dependant rules.

The less business logic in your database / SQL, the better.

For two reasons :

Architectural reason : In the command part, you evaluate the business logic in code, not in the database. Stay consistant.

Performance reason : You usually update data far less often than you query it. If the result of a query cannot change between updates, compute it and store it on updates ! You can then query it at will for free. And there is no more business logic on the query part.

So no triggers for me. No linq or NH for me. No big Sql script for me. It's even simpler this way.

I follow you on this one Ayende.

We have just embarked on a vaguely-similar project at work, and this post has answered a lot of questions for me about NHibernate, specifications, complex querying and eventual consistency. Thank you!

Any particular reason that the prisoner names are all Arab names?!

K,

They don't have Arab names, the names are Hebrew words.

Alex,

That violates PI in a pretty significant ways.

It also still leaves you with the complexity of the queries and the performance hit that you are going to get.

I didn't say this must be done right in Prisoner - I wrote "Prisoner or service methods", and if you love PI, you should obviously use the second way.

Concerning performance: obviously, this may bring a dramatic improvement only if after all substitutions you get a query with the lower complexity. In exactly this case this is obviously not true.

But there is another benefit of such an approach: you're getting more human readable queries. UDFs and views solve nearly the same problem, but in SQL rather than LINQ. You example is just a good illistration of this: I could write e.g.:

from prisoner in Prisoner

where prisoner.ChargedWithViolentCrime

select prisoner

and get a result by a single query.

Btw, I even could automatically cache the result of such call. An example with caching should look like (ok, lef me forget about PI here):

public class Prisoner {

...

public bool ChargedWithViolentCrime {

}

[Translator("ChargedWithViolentCrime", MemberType.PropertyGetter)]

private static Expression(of Func(of Prisoner,bool)) TranslateChargedWithViolentCrime

{

}

...

}

So the effect of this example:

Query result is cached for 1 day. Cache key varies by all query parameters by default ("this" in this case)

Query uses ChargedWithViolentCrime getter, for which LINQ translator is provided. Obviously it, as well as ChargedWithViolentCrime method itself can be implemented externally.

Opinions?

Alex, there are 40 such things, of varying complexity, they change often (based on intelligence work) and are of critical importance to the app.

Do you really think that execute hundreds of queries would be a viable option here?

Why can't the UI force an eager load (via context) of the data it needs? Is that bad from a design standpoint?

Joao,

There is too much data, in too many tables

Well, obviously everything depends on a particular case. As I've mentioned, exactly here I'd prefer background recalculation of persistent property value. But in many other cases I'd prefer this approach.

E.g. I could implement Article.PublishedVersion and ArticleVersion.IsPublished by this way, and use both properties in queries. ArticleVersion.IsPublished is true when some simple condition is satisfied for it, e.g. its publication date is greater than now and it's the latest version of it.

Benefits:

Simpler queries

Normally result can easily be cached in BLL for e.g. 1 minute.

And... Actually I didn't understood the part about executing hundreds of queries. In above example a single query will be executed once per day for a particular Prisoner.Key on accessing the property; if this property (ChargedWithViolentCrime) will be used in queries, it also won't lead to any additional ones.

How many prisoners do you need to manage at the time?

What about fetching all and them go with the beautifully Object Oriented approach.

from prisoners

inner join disciplinarynotices

inner join charges

inner join medicalrecord

inner join withdrawals

inner join request

inner join status

8-table join?, The problem is not hitting 8 tables, give me more details about the volume of data.

Alex,

You are going to have a LOT of properties like that.

I won't touch the caching issue, but generally I don't like that, it violates the single state principle.

You can do this with NHibernate using query only formulas.

JoserFR,

Do you have any idea what this will look like with the resulting Cartesian product?

Concerning 8-table join - obviously, this won't work ;)

Did I say this? Actually these are your words ;)

That's obvious: use of caching normally implies this. And all depends on the costs & real business case here - e.g. if getting precise value is important, caching won't work. But in case with article versions it's normally ok to get cached value.

Really? I.e. mapping of calculated values is pretty obvious feature, but:

Can I do the same with methods? Like GetActiveVersionFor(DateTime time)?

Can I cache such a value separately - e.g. with similar expiration policy?

How many times do you need to see all the prisoner dangerous property?

Correct me If I wrong; there will be various tipically places where the application will need to dangerous property:

1-You need to know if a prisoner is dangerous.

2-You need to display Prisoners with certain characteristics.

3-You need to display ALL the Prisoners in a PAGINATED grid.

4-You need to process all the Prisoners of the database ....

5-You need all the dangerous or you need all the quiet.

For 1,2,3 is ok because the query is limited. The database will pick the better execution plan and if you don't like it, probabillly you could change (I never need to change one).

4 is supposed to be a long time task...

5 is the WORSE case.

Alex,

I am saying that, yes, for the problem that we have here, we have lots of props to check.

About calculated methods, no, NH won't do that. If you need that, go and write query, that would be a much cleaner solution

JoseFR,

An 8 table join is going to produce a horrendous Cartesian product.

Let us say that I want to show the first 20 prisoners.

Using this:

from prisoners

inner join disciplinarynotices -- most dangerous inmates have 2 - 5

inner join charges -- most dangerous inmates have 3

inner join medicalrecord -- most dangerous inmates have at least 10

inner join withdrawals -- most dangerous inmates have at least one

inner join request -- most dangerous inmates have 4

inner join status -- most dangerous inmates have 6

This means that to load 20 prisoners data, you have to load 43,200 rows.

Ok, now I see the problem (hehe).

One more question Ayende... Will be redundant to say that is a dangerous property?

How about this implementation (kind of Alex's way except queries are separated from entities):

public interface IPrisonerDangerousEvaluator

{

<func<prisoner,>

}

public class DisciplinaryNoticeInLast2Months

{

<func<prisoner,>

}

.. and so on. The expressions would be translated to SQL by a LINQ provider, there is still an OO model, queries are separated from entities, LINQ is used as a primary query language instead of Criteria so queries are strongly-typed...but still no better than the 8 joins right?

And I can't even imagine how hard it is to write the LINQ provider.

Suiden,

You missed the part of having tens of them?

Sorry, some browser issue with my post. The return type of method Eval() is

Expression < Func < Prisoner, bool > >

My solution for this is usually to force load of the lazy evaluated properties for the whole data set.

So instead of having SELECT 1 + N(1+1+2+2) you have select 1 + 1 + 1 + 2 + 2

Ending up with queries like:

SELECT DisciplinaryNotices.Prisoner FROM DisciplinaryNotices

<list)

You end up with a lot of queries

Sure but you can Future them using a two stage approach, and thus only make a single roundtrip to the DB.

Will you get super optimal speed, no. Will you get something that is decent, probably. Will you get the same developer efficiency as the first solution, close to it.

I do agree with you that putting this on the writer side might be a better idea. 40+ rules is just nasty.

Futures are cool, but 40+ queries are still slow no matter how you are doing things

I like the IPrisonerDangerousEvaluator interface, and the way you use it! Is this a more general pattern?

Command pattern

We use a similar pattern in one of the apps I work on, only it's for hospitals and not prisons. There are dozens of complex rules for billing - some of the jargon is "group code," "authorized sessions," "service code," and so on - all of which are assigned based on many other variables of a particular service that may exist in the main Activities table or through a number of other one to many tables.

We have a batch job that runs once a night and goes through all of the (non-invoiced) activities and computes or updates these values, if needed. In the case where a user needs to bill an activity in a one-off fashion, we perform these rules for that specific activity.

This approach has worked well for us given that there are dozens of rules and that several rules change every year (or new ones are added, or old ones removed).

Comment preview