The value of self contained diagnostics

I’m inordinately fond of the Fallacies of Distributed Computing, these are a set of common (false) assumptions that people make when building distributed systems, to their sorrow.

I’m inordinately fond of the Fallacies of Distributed Computing, these are a set of common (false) assumptions that people make when building distributed systems, to their sorrow.

Today I want to talk about one of those fallacies:

There is one administrator.

I like to add the term competent in there as well.

A pretty significant amount of time in the development of RavenDB was dedicated to addressing that issue. For example, RavenDB has a lot of code and behavior around externalizing metrics. Both its own and the underlying system.

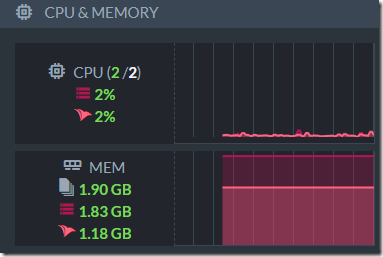

That is a duplication of effort, surely. Let’s consider the simplest stuff, such as CPU, memory and I/O resource utilization. RavenDB makes sure to track those values, plot them in the user interface and expose that to external monitoring systems.

All of those have better metrics sources. You can ask the OS directly about those details, and it will likely give you far better answers (with more details) than RavenDB can.

There have been numerous times where detailed monitoring from the systems that RavenDB runs on was the thing that allowed us to figure out what is going on. Having the underlying hardware tell us in detail about its status is wonderful. Plug that into a monitoring system so you can see trends and I’m overjoyed.

So why did we bother investing all this effort to add support for this to RavenDB? We would rather have the source data, not whatever we expose outside. RavenDB runs on a wide variety of hardware and software systems. By necessity, whatever we can provide is only a partial view.

The answer to that is that we cannot assume that the administrator has set up such monitoring. Nor can we assume that they are able to.

For example, the system may be running on a container in an environment where the people we talk to have no actual access to the host machine to pull production details.

Having a significant investment in self-contained set of diagnostics means that we aren’t limited to whatever the admin has set up (and has the permissions to view) but have a consistent experience digging into issues.

And since we have our own self contained diagnostics, we can push them out to create a debug package for offline analysis or even take active actions in response to the state of the system.

If we were relying on external monitoring, we would need to integrate that, each and every time. The amount of work (and quality of the result) in such an endeavor is huge.

We build RavenDB to last in production, and part of that is that it needs to be able to survive even outside of the hothouse environment.

Comments

Could you make a NUGET package for other applications?

Andrei,

I'm not sure that I follow you here.

My point here is : You have created a special software to monitor the PC/VM/Docker/and so on where the RAVENDB is installed, because we want to know everything Could you share this special software as a NUGET in order to add to my project also ? ;-)

Andrei,

I don't think that would be a viable option. The actual process for gathering the information isn't that complex. The key here is aggregating them, pushing to the user, making use of the information to change behavior, etc.

For example, tracking disk performance is critical for database, but for your application, that is unlikely to be a factor

I understand that some will be irrelevant. But , then , I just ignore the irrelevant ones ;-)

Comment preview