Building a social media platform without going bankruptPart IV–Caching and distribution

In my previous post we looked at the process in which we process a request for a batch of posts and get their results. The code made no assumptions about where it is running and aside from specifying whatever it is okay or not to allow caching, did no such work.

Caching is important, it matters a lot for performance. To the point where if you aren’t using caching, you are past willful neglect and in the territory of intentional malpractice. The difference can be between needing 18,500 cores to serve a website and needing less than 400 to serve a much busier website. Except that the difference will likely be more pronounced.

Because it is so important, we need to take it into account at the architecture level. Another aspect we have to consider is the data distribution. Assuming we want to build a global social media platform, that means having to access it from multiple locations. Which mean, in turn, that we have to consider the fallacies of distributed computing in our system. Locality of reference is another key factor that you have to take into account. Which means that you have to consider the flow of data in the system.

Let’s assume that we have the following datacenters around the world:

We are using geo routing and the relevant infrastructure to make sure that you’ll always hit the nearest data center to you.

Let’s say that we have a Mr. Beat in our social platform, who is very popular and like to post controversial messages such as the need to abolish peppers from your menus. Mr. Beat is located in Australia, so when he is posting yet another “peppers have no place in the kitchen” post, the data center in Brisbane is going to be the one to field the request.

A system like that would do best if we can avoid any and all required coordination between the different data centers, as such, we are going to be using gossip to share the results among all the data centers. In other words, the post will be written in the Brisbane data center and then replicated to the rest of the data centers. There are several ways that we can implement such a feature.

The simplest way to replicate all the data to all the data centers. This way, we can always access it from the local store. However, that present two challenges:

- First, there is latency involved with replicating information across the glove. We may get a request for a post in the Odessa data center for a post originating in Brisbane. If the network devils decided to have a party, we may not have that particular post yet in Odessa. What do you do then? This is where the format of the post id come into play. If we can’t find the post in our local storage, we can figure out who owns that (based on the machine id segment) and go ask the owning data center.

- The second problem is that in many cases, the data is purely local. For example, consider this Twitter account. For the most part, everyone that is following that is going to be located nearby. I assume you may have a very small minority of followers who left the area who are still interested in following up on what is going on, but for the most part, this is not that common. That means that replicating all the information to all the data centers is likely a waste.

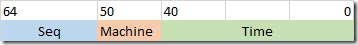

Given these two facts together, we are actually better off using a different model for data distribution and caching. The post id is generated using the following mechanism:

The machine segment in the post id (10 bits long) gives us a good indication of where that post was created. This is originally used only for the purpose of generating unique ids, but we can make far greater usage of this. We decide that the data center that created the post is also the one that owns it. In other words, Mr. Beat’s posts are “owned” by the Brisbane data center. The actual posts are held in a key/value store, but there is a difference in how we do lookup by id based on the ownership.

Here is the relevant code:

The idea is that we first check the key/value in the current data center for the post id. If it isn’t found, we check if we are the owner of the post. If so, it doesn’t exists or was removed. If it belongs to another data center, we’ll go and fetch it from there. Note that we’ll record a null if needed, so we’ll not need to go and fetch a missing value each time it is requested.

In other words, the first time that an item is requested from the owner data center, we’ll place it in our own key/value for next time. We do so with an indication to the key/value that this is a remote value. Remote values may be purge early or be put on a least frequently viewed rotation, etc.

There are other things that we need to deal with, of course. Concurrency, for example, if we have multiple concurrent requests to the same missing id. We’ll have multiple chats between data centers to fetch the same value. I don’t think that this is a problem. That is likely to only happen the first time, and then it is cached. We might want to monitor how often remote values are requested and only get them after a certain number of requests, but in all honesty, it probably doesn’t matter.

The key/value store architecture is already likely to cause us to use both disk and memory. We can take advantage of the fact that the remote key isn’t important locally and not persist it to disk right away, or not in a durable format. When we need to remove the value from memory, we can see if it had enough hits to warrant writing locally or not.

The most complex issue, however, is related to the cache itself. We have a strong ownership model, in which the data center that created a post is its owner. What happens when we need to update a post?

Twitter, for example, doesn’t have an Edit feature. That is a great reduction of the complexity we have to deal with, but it isn’t all that simple. An update to the post can also be a delete. For example, let’s say that Mr. Beat posted: “eating steamed Br0ccoli”. Such a violation of community standards cannot stand. Even though Mr. Beat cleverly disguised his broccoli tendencies by typo-ing the forbidden term. Mr. Beat is very popular and his posts have likely spread across many data centers. An admin marking the post as deleted also has to deal with the possibility that the post is located on other locations as well.

We can try to keep track of this, but to be fair, it is easier to simply queue a delete command on all the data centers except the owner. That will ensure that they will remove the cached version and have to re-read it from the owner data center.

Everything that I describe so far was about behavior, but we also ought to talk about policies. As part of the work we do when writing a post, we can apply all sort of interesting policies. For example, we may know that Mr. Beat is popular globally and as part of writing a post from him preemptively send that post to all the other data centers. If we have an update to a post, instead of sending a delete command to the rest of the data centers and let it refresh automatically, we can send the updated post content immediately.

I intentionally don’t want to dig too deeply into those policies, because they are important, but they aren’t on the same level as the infrastructure I describe here is. Those are like the cherry on top, if you like such a thing, it can take something good and make it great. But given that those are policies that can be applied on a per item basis and modified as you go along, there isn’t a reason to start going there yet.

Finally, there is a last aspect to discuss: Expiry.

In most social media, the now is important beyond all else. In other words, you are very unlikely to be seeing posts from two years ago and if you are, it matters a lot less if you have to deal with slightly higher latency. Expiring remote content that is over 3 months old, for example, and not placing that in our local key/value at all can be a great way to handle long tail issues. For that matter, given that old content is rarely accessed, we can also optimize our storage by compressing old posts instead of holding on to them directly.

And after all of this discussion, I wanted to point out that you are also likely to want to have another layer of caching in place. The API calls you make in many cases may be good candidate for at least short term caching. In other words, if you put them behind something like Cloudflare, given that we explicitly state what post ids we want, we can set a cache duration of 1 – 3 minutes without needing to worry too much about updates. That can massively reduce the number of requests that we actually have to handle, and it costs a lot less. Under what scenarios would that be useful?

Consider people going to view a popular user, such as Mr. Beat’s page. The list of posts there is going to be the same for anyone, and even a short duration on the cache would massively help our load.

As you can see, the design of the system assume caching and actively work to make it possible for you to utilize the cache at multiple levels.

More posts in "Building a social media platform without going bankrupt" series:

- (05 Feb 2021) Part X–Optimizing for whales

- (04 Feb 2021) Part IX–Dealing with the past

- (03 Feb 2021) Part VIII–Tagging and searching

- (02 Feb 2021) Part VII–Counting views, replies and likes

- (01 Feb 2021) Part VI–Dealing with edits and deletions

- (29 Jan 2021) Part V–Handling the timeline

- (28 Jan 2021) Part IV–Caching and distribution

- (27 Jan 2021) Part III–Reading posts

- (26 Jan 2021) Part II–Accepting posts

- (25 Jan 2021) Part I–Laying the numbers

Comments

I realize the image is just for illustration, and not remotely the point of this article, but why would you put two of your datacenters in Greenland and Siberia?

Svick,

Specifically to be far out :-)

Actually, so I would have geo distribution and the icons won't overlap one another

Comment preview