Designing RavenDB’s debug log system

I have talked before about the roles of logs (for debug statements) in RavenDB. In this post, I want to start talking about the actual concrete design we have of them.

Overall guidelines (in no particular order):

- As little configuration as possible.

- Development / Operational – only two levels we support.

- Idiomatic – should be easy to read the log code and see if it is good or not.

- Builtin understanding of RavenDB mechanics – Scoped to database, etc.

- Fast & Cheap as possible (especially if disabled).

- Memory efficient.

The idea that log configuration should be flexible is a great idea, if you are talking about a generic logging framework. In particular, however, we have just one configuration option, writing to a sized limited text file, that is it. (This isn’t strictly true, we also have an HTTP endpoint(s) that we need to take care of, but that is true enough).

So the log file name format is going to be fixed at:

- 2016-06-26.001.log

- 2016-06-26.002.log

Each of which is going to be roughly 256MB in size.

How this is done? We spin off a single background logging task that has a list of per thread queues, and it is running over all of those queues. Each time we log, we do all of the work of preparing the log entry on the sending thread, and just send the actual buffer to the background logging thread. The idea is that the background logging thread should be focused on just writing to the log file, nothing else. Technically speaking, it would be much better to have a log file per thread, but that would require us to merge them at a later point in time using dedicated tools, and it isn’t really worth it at this point.

Each thread actually have two queues, one that is used to communicate with the background logging thread, and the other to get the buffers it allocated back, for reuse. This allows us to drastically reduce the amount of memory that is being held by logging, and it also gives us a good way to buffer the amount of in flight logging information.

We can process over 400MB/second for logs using this approach, on a laptop, so I’m pretty happy with the performance.

This has some particular behaviors, though. Because we are processing things on a per thread basis, it means that we’ll group all operations from the same thread in the log for a certain duration (by default, 16 messages), even if in practice they are interleaved. This is actually make it easier to read the log, but it make it harder to see when you want to see the interleaving of different operations at the same time (a key aspect of the log in multi threaded environment). You can get the correlation from the log timestamp, of course, but that isn’t the same.

The limit of max 16 operations per thread in the log should limit that, and the amount of cross thread coordination we want to see if likely to be lower in the RavenDB 4.0 codebase, so that is an acceptable compromise.

In terms of the API we have, we define the following:

The idea is that when you create a logger, you specify what is your logger (the BulkInsertHandler, in this case), as well as which database this logger belongs to. This make it easier to read the logs after the fact.

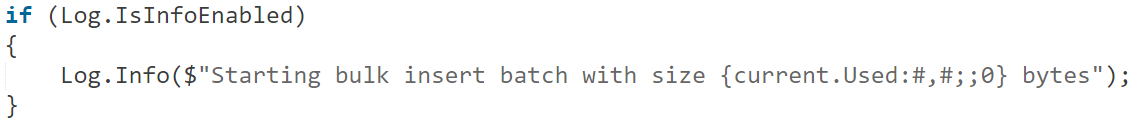

Then there is the usage:

Note that this looks like a regular log usage, but this API is actually very constrained. We have only two log levels:

- Information – The full log

- Operations – Stuff that admins needs to look at

In particular, we don’t have a Warn / Error levels, because they are incredibly hard to get right. What does it means to log an error, if it is handled?

Another thing to note is that this API is actually really primitive, it accepts just a string (relying on the C#’s string interpolation to make it nicer looking), and it assumes that it is always going to be called with a check if the logging is enabled. This enables us to reduce costs when actually logging, because we can skip all those checks and additional API that is there just to save performance when you don’t have the right log level enabled.

In this case, we are forcing the caller to take care of that, making our life much simpler.

Other things that we don’t allow is filtering by logger, hierarchy, etc. We don’t need any of those things, and they cost.

So this is how things stand right now with our logging system.

Comments

You say "In particular, however, we have just one configuration option" so it's not clear from the blog post. Will I be able to configure which directory the logs go into?

Joao, Yes, you can setup: What is the log level, and where do you log to.

Ideally by using a Verbose log (Development in your case) one should be able to see all the units of execution performed as if stepping through the code and using plain English to describe what the application is doing. My favorite analogy: Good logging is like in an Nature TV Show where the Narrator narrates the daily routine of a Lion pack, easy to follow, simple and non scientific ...

I hope the grouping of log lines does not ruin the fluidity of the logs, Logs that are not temporally aligned and mixed on top of that are the worst.

Pop, Yes, the idea is that we aren't going to do that too much, we limit the number of running entries from a single thread to 16, so that isn't too spread out.

So the caller must always check whether info logging is enabled? It is probably useful to write a Fody based extension that rewrites your source code to insert this check. You can then never forget and improves readability.

Sebastiaan, Yes, that is by design. We want to make is explicit when you are paying the cost of logging, and it simplify the design. While I like Fody, I really don't like hidden changes to my code, because that really make it nasty afterward to figure out why certain things happen.

Are you anticipating on opening it up to different type of logger implementations? For example, we would write a log to NewRelic implementation.

M. Schopman, No, it goes directly to file. If you want to get it from there to a 3rd system, they all have ways to watch a file / folder for log files

@Oren: You could develop a roslyn inspection though, which checks if the caller checks for logging enabled.

Sebastiaan, We have that in 3.5, and it works great, but it slows us down somewhat.

Comment preview