RavenDB indexing optimizations, Step III–Skipping the disk altogether

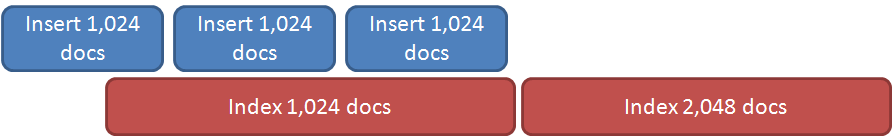

Coming back a bit, before prefetching, we actually had something like this:

With the new prefetching, we can parallelize the indexing & the I/O fetching. That is good, but the obvious optimization is actually not going to the disk at all. We already have the documents we want in memory, why no send them directly to the pre fetched queue?

As you can see, we didn’t need to even touch the disk to get this working properly. This gives us a really big boost in terms of how fast we can index things. Also note that because we already have those docs in memory, we can still merge separate writes into a single indexing batch, reducing the cost of indexing even further.

![image_thumb[1] image_thumb[1]](http://ayende.com/blog/Images/Windows-Live-Writer/RavenDB-indexing-optimizations-Step-IIIS_CBE4/image_thumb%5B1%5D_thumb.png)

Comments

Interesting

In which build are these indexing optimizations available?

what if there is a power outage during indexing of documents in memory (not yet written to disk). Can you thus run into an issue where there is a document indexed, but got lost during the outage before it got a chance to get written to disk?

@PS those documents are also written to disk while/before being indexed. It's just that when the documents come in, they being being sent straight to the index queue instead of being written, then read back off the disk, then put in the index queue.

If there was a power outage, a document might be indexed, but I'm pretty sure the start up clean up would handle that case because IIRC when it starts up it checks when it was working on to see what finished and goes from there on a bad shut down.

Theo, I recommend using the latest, but they came throughout the RavenDB 2.0 pipeline.

PS, Exactly the reason why we have the previous two options. This is an optimization only, it does NOT impact how operations. Feel free to turn off the plug at any time, it will work.

Comment preview