Data checksums in Voron

Every time that I think about this feature, I am reminded of this song. This is a feature that is only ever going to be used in everything fails. In fact, it isn’t a feature, it is an early warning system, whose sole purpose is to tell you when you are screwed.

Every time that I think about this feature, I am reminded of this song. This is a feature that is only ever going to be used in everything fails. In fact, it isn’t a feature, it is an early warning system, whose sole purpose is to tell you when you are screwed.

Checksums in RavenDB (actually, Voron, but for the purpose of discussion, there isn’t much difference) are meant to detect when the hardware has done something bad. We told it to save a particular set of data, and it didn’t do it properly, even though it had been very eager to tell us that this can never happen.

The concept of a checksum is pretty simple, whenever we write a page to disk, we’ll hash it, and store the hash in the page. When we read the page from disk, we’ll check if the hash matches the actual data that we read. If not, there is a serious error. It is important to note that this isn’t actually related to the way we are recovering from failures and midway through transactions.

That is handled by the journal, and the journal is also protected by a checksum, on a per transaction basis. However, handling this sort of errors is both expected and well handled. We know where the data is likely to fail and we know why, and we have the information required (in the journal) to recover from it.

This is different, this is validating that data that we have successfully written to disk, and flushed successfully, is actually still resident in the form that we are familiar with. This can happen because the hardware outright lied to us (can usually happen with cheap hardware) or there is some failure (cosmic rays are just one of the many options that you can run into). In particular if running on crappy hardware, this can be just because overheating or too much load on the system. As a hint, another name for crappy hardware is a cloud machine.

There are all sorts of ways that you can happen, and the literature makes for a very sad reading. In a CERN study, about 900 TB were written in the course of six months, and about 180 MB resulted in errors.

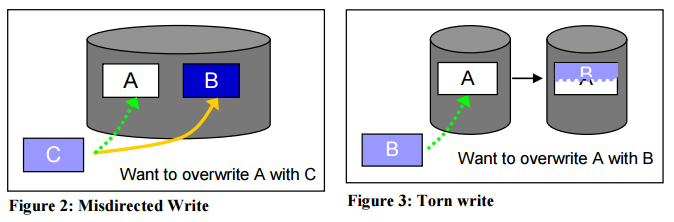

The following images are from a NetApp study shows that over a time period of 2.5 years, 8.5% of disks had silent data corruption errors. You can assume that those are not cheap off the shelves disks. Some of the causes are great reading if you are a fan of mysteries and puzzles, but kind of depressing if you build databases for a living (or rely on databases in general).

Those are just the failures that had interesting images, mind, there are a lot more there. But from the point of view of the poor database, it ends up being the same thing. The hardware lied to me. And there is very little that a database can do to protect itself against such errors.

Actually, that is a lie. There is a lot that a database can do to protect itself. It used to be common to store critical pages in multiple locations on disks (usually making sure that they are physically far away from one another), as a way to reduce the impact of the inevitable data corruption. This way, things like the pages that describe where all the rest of the data in the system reside tend to be safe from most common errors, and you can at least recover a bit.

As you probably guessed, Voron does checksums, but it doesn’t bother to duplicate information. That is already something that is handled by RavenDB itself. Most of the storage systems that are dealing with data duplication (ZFS has this notion with the copies command, for example) were typically designed to work primarily on a single primary node (such as file system that don’t have distribution capabilities). Given that RavenDB replication already does this kind of work for us, there is no point duplicating such work at the storage layer. Instead the checksum feature is meant to detect a data corruption error and abort any future work on suspect data.

In a typical cluster, this will generate an error on access, and the node can be taken down and repaired from a replica. This serves as both an early warning system and as a way to make sure that a single data corruption in one location doesn’t “infect” other locations in the database, or worse, across the network.

So now that I have written oh so much about what this feature is, let us talk a bit about what it is actually doing. Typically, a database would validate the checksum whenever it reads the data from disk, and then trust the data in memory ( is isn’t really safe to do that either, but let’s us not pull the research on that, otherwise you’ll be reading the next post on papyrus) as long as it resides in its buffer pool.

This is simple, easy and reduce the number of validation you need to do. But Voron doesn’t work in this manner. Instead, Voron is mapping the entire file into memory, and accessing it directly. We don’t have a concept of reading from disk, or a buffer pool to manage. Instead of doing the OS work, we assume that it can do what it is supposed to do and concentrate on other things. But it does mean that we don’t control when the data is loaded from disk. Technically speaking, we could have tried to hook into the page fault mechanism and do the checks there, but that is so far outside my comfort zone that it gives me the shivers. “Wanna run my database? Sure, just install this rootkit and we can now operate properly.”

I’m sure that this would be a database administrator’s dream. I mean, sure, I can package that in a container, and then nobody would probably mind, but… the insanity has to stop somewhere.

Another option would be to validate the checksum on every read, that is possible, and quite easy, but this is going to incur a substantial performance penalty to do ensure that something that shouldn’t happen didn’t happen. Doesn’t seem like a good tradeoff to me.

What we do instead is make the best of it. We keep a bitmap of all the pages in the data file, and we’ll validate them the first time that we access them (there is a bit of complexity here regarding concurrent access, but we are racing it to success and at worst we’ll end up validating the page multiple times), and afterward, we know that we don’t need to do that again. Once we loaded the data to memory even once, we assume that is isn’t going to change beneath our feet by something. This isn’t an axiom, and there are situations where a page can be loaded from disk, valid, and then become corrupted on disk. The OS will discard it at some point, and then read the corrupt data again, but this is a much rarer circumstance than before.

The fact that we recently have verified that the the page is valid is a good indication that it will remain valid, and anything else have too much overhead for us to be able to use (and remember that we also have those replicas for those extreme rare cases).

Independent of this post, I just found this article which injected errors in multiple databases data and examined how they behaved. Facincating reading.

Comments

Have you subjected RavenDB to Jepsen and this "CORDS" disk error injection tool discussed in the article you linked to?

Alex, Rachis (RavenDB's Raft impl) has passed Jepsen tests, yes. We didn't have the chance to test CORDS yet.

Comment preview