Lies, Service Level Agreements, Trust and failure mores

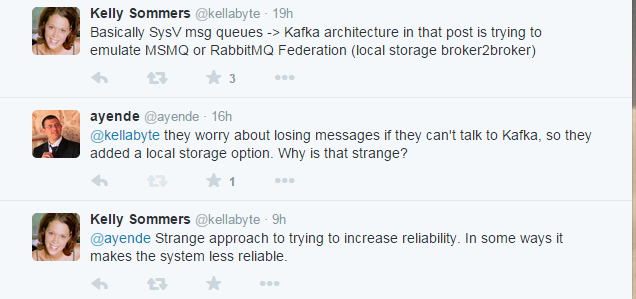

I had a very interesting discussion with Kelly Sommers in twitter. But 140 characters isn’t really enough to explain things. Also, it is interesting topic in general.

Kelly disagreed with this post: http://www.shopify.ca/technology/14909841-kafka-producer-pipeline-for-ruby-on-rails

You can read the full discussion here.

The basic premise is, there is a highly reliable distributed queue that is used to process messages, but because they didn’t have operational experience with this, they used a local queue to store the messages sending them over the network. Kelly seems to think that this is decreasing reliability. I disagree.

The underlying premise is simple, when do you consider it acceptable to lose a message. If returning an error to the client is fine, sure, go ahead and do that if you can’t reach the cluster. But if you are currently processing a 10 million dollar order, that is going to kinda suck, and anything that you can do to reduce the chance of that happening is good. Note that key part in this statement, we can only reduce the chance of this happening, we can’t ensure it.

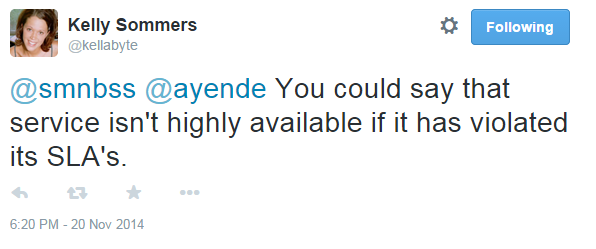

One way to try that is to get a guaranteed SLA from the distributed queue. Once we have that, we can rely that it works. This seems to be what Kelly is aiming at:

And that is true, if you could rely on SLAs. Just this week we had a multi hour, multi region Azure outage. In fact, outages, include outages that violate SLAs are unfortunately common.

In fact, if we look at recent history, we have:

- February 2012 – Azure – incorrect leap year calculation took down multiple regions.

- October 2012 – AWS – memory leak due to misconfiguration took down a single availability zone, API throttling caused other availability zones to be affected.

- December 2012 – AWS – a developer was running against production, and delete some key data, resulting in Netflix (among others) being unable to stream video.

- August 2013 – Azure – more servers brought online to increase capacity caused a misconfigured network appliance to believe that it is under attack, resulting in Azure Europe going dark.

There are actually more of them, but I think that 5 outages in 2 years is enough to show a pattern.

And note specifically that I’m talking about global outages like the ones above. Most people don’t realize that complex systems operate in a constant mode of partial failure. If you ever read an accident investigative report, you’ll know that there is almost never just a single cause of failure. For example, the road was slippery and the driver was speeding and the ABS system failed and the road barrier foundation rotted since being installed. Even a single change in one of those would mitigate the accident from a fatal crash to didn’t happen to a “honey, we need a new car”.

You can try to rely on the distribute queue in this case, because it has an SLA. And Toyota also promises that your car won’t suddenly accelerate into a wall, but if you had a Toyota Camry in 2010… well, you know…

From my point of view, saving the data locally before sending over the network makes a lot of sense. In general, the local state of the machine is much more stable than than the network. And if there is an internal failure in the machine, it is usually too hosed to do anything about anyway. I might try to write to disk, and write to the network even if I can’t do that ,because I want to do my utmost to not lose the message.

Now, let us consider the possible failure scenarios. I’m starting all of them with the notion that I just got a message for a 10 million dollars order, and I need to send it to the backend for processing.

- We can’t communicate with the distributed queue. That can be because it is down, hopefully that isn’t the case, but from our point of view, if our node became split from the network, this has the same effect. We are writing this down to disk, so when we become available again, we’ll be able to forward the stored message to the distributed queue.

- We can’t communicate with the disk, maybe it is full, or there is an error, or something like that .We can still talk to the network, so we place it in the distributed queue, and we go on with our lives.

- We can’t communicate with the disk, we can’t communicate with the network. We can’t keep it in memory (we might overflow the memory), and anyway, if we are out of disk and network, we are probably going to be rebooted soon anyway. SOL, there is nothing else we can do at this point.

Note that the first case assumes that we actually do come back up. If the admin just blew this node away, then the data on that node isn’t coming back, obviously. But since the admin knows that we are storing things locally, s/he will at least try to restore the data from that machine.

We are safer (not safe, but more safe than without it). The question is whatever this is worth it? If your messages aren’t actually carrying financial information, you can probably just drop a few messages as long as you let the end user know about that, so they can retry. If you really care about each individual message, if it is important enough to go the extra few miles for it, then the store and forward model gives you a measurable level of extra safety.

Comments

Hmm, it could be more complicated:

you are storing msg locally, you are returning "success" to caller, he is thinking "my 10M$ order will be soon processed" - well that could be not true, and "hiding" 10M$ could cost a lot in terms of interests, .....

after storing on local store reply is lost, user retries, gets on another node that has connection to the queue, msg goes forward, and after few hours gets to queue again from the 1st attempt, is transaction performed again ? (OK, this is probably solvable if msgs have some kind of globally unique ids)

Bit of a weird conversation. I mean you're saying "save local then send to 3rd party", she's saying "just send to 3rd party". 90% of the process is identical in both your flows except for the local bit right in the beginning.

Theres no way it makes it any less reliable at all. At worst its just as unreliable, at best its slightly more reliable.

I agree, i've found a lot of people that thinks an SLA of 99.9% (as an example) means "the service will be online 99.9% of the time" instead of the reality, which is "if the service will not be online 99.9% of the time we will partially refund you". For some time Google went on and offered a 100% uptime SLA, which obviously cannot garantuee absolute perfection (which is not possible): it was just a way to say "look, we are so confident in our systems that, even knowing in advance we will fail sometimes, those times will be so rare that we will refund you no problem". That was basically a PR move by them.

If your local copy is susceptible to permadeath (in the case of disk failure, VM recycling, mobile phone being stolen or dropped in the loo), then you're on somewhat shaky ground, and you've given yourself a false sense of security.

I'd rather fail the request if I can't write to a durable store.

You are both correct. It depends on the case and the customer if the extra bit of complexity is acceptable or required. YAGNI and KISS.

Harry, You can't really fail the request. Consider the case where you have done everything successfully, but are unable to talk to the client. From the client point of view, the request didn't succeed. This false negative for failure means that you need to already build facilities for recovery.

Ayende, how about transactions in dbs? Once you commit it but before receiving the ack, the network is down. The transaction is committed anyway. Don't get into Byzantine problem.

Scooletz, That is exactly the same problem, yes.

Sounds to me like a simple case of "[availability] in depth". This is how MSMQ works, it stores the copy locally (in memory or disk, depending upon the configuration) before forwarding to the remote queue. I like the idea of not letting one of the two failures block the other (ie don't let local disk failure prevent the network communication from occurring).

Additionally, you may be given an SLA which guarantees a refund upon loss of service, but even if we ignore the $10 million order it's most likely that no pro-rated refund is going to equal your loss of revenue (unless of course your business plan is to not make more $$ than you spend).

@Scooletz, I had to look up Byzantine problem, but it seems to me that messaging systems are prone to this affect anyhow due to at least once guarantees. It seems to me that properly implementing idempotent message handling would mitigate this issue.

Yup...coz $10M transactions are dependent on a single message on a queue, and somebody issueing that command will simply go to a competitor if they get a client error - assuming the client can't transparently retry or refer a number to talk to an actual human being before...erm...taking his $10M order elsewhere.

Ashic, Let me rephrase then, you can have a BIG order dependent on a single confirmation message that got lost, resulting in a critical shipment being delayed, resulting in big fines.

Trivial example that happens all the time, international money orders that can randomly take 5 more days because... well, who knows.

Sure, there are all kind of compensating actions that you can take, but that doesn't mean that you want to. Not if you can add something extra to handle things better. Not 100%, but what is?

@Ayende, @Mark Miller One thing is to unable to tell in some cases whether the service processed the order (use: retry + idempotent receiver), the other thing is to store it on non-HA machine. If you want to respond to client with OK, you must provide something better than storing 10$ on the local machine. It's even more unreliable than a cluster.

Scooletz, I'm not suggesting storing it only on the local machine. However, the cluster can be unavailable, in which case you can still get something from the local machine.

If the cluster is unavailable it is better to fail the request than to store it locally and succeed the request.

"You can't really fail the request." - something has gone terribly wrong. The client should wait for a response indicating that the request was successful. Lack of response should be handled as if it were an error response (with proper idempotent requests to correct for silent success). The server should not send a success response unless the message is handled or safely stored for later handling. A single disc is not safely stored.

If the cluster goes down and we instead store on disc to be sent later we making a tradeoff. Gaining uptime but losing the guarantee that we actually handle a request that the client thinks is handled.

Nate, Take a look at a good example of how you can prevent cascading failures using this type of architecture: http://blog.xamarin.com/xamarin-insights-and-the-azure-outage/

For clarity I'll define reliability as "The server will (eventually) do what it responded that it would do" and uptime as "I can tell the server to do things".

I think Xamarin Insights data collection is a good example of a system in which it make sense to sacrifice reliability to gain uptime. End users don't care if metrics are recorded so downtime is likely to be effectively no different from unreliable request handling. The example of a financial transaction is a place where I would prefer reliability over uptime because an end user certainly will care if their transaction is processed and wants to be notified if it can't.

The design mentioned in the article gains uptime at the loss of reliability but does not acknowledge that such a tradeoff is being made.

One can argue that it is only losing a little reliability, but it is still losing it.

Nate, Why would you prefer reliability for financial transactions? Nothing else does.

For example, if you write a check, that is the same as doing a disconnected transactions that eventually may be operated. In fact, pretty much any financial operation is built with the assumption that time doesn't matter. The common example of "the check is in the mail", and caring only for when it was posted vs. when it was drawn.

I guess the main point I was trying to get at was that there is a tradeoff. I don't necessarily feel as strongly about what cases the tradeoff is worth making... it is an interesting discussion though.

Lets imagine that I am implementing a web store. I have an asynchronous system which bills customers, I just need to drop a message on a guaranteed queue that says "charge customer X" and eventually I'm confident the bill will be sent. I have another asynchronous system which allows me to fulfill orders by dropping a message on a different queue. I trust that if I put a message on the queue, eventually the appropriate actions are taken. The queue suffers from occasional downtime where I am not able to enqueue messages.

Depending on the order in which I drop these messages on the respective queues I might either fulfill an order which is never billed, or bill an order which is never fulfilled and I'll need to make a decision there (along with some best effort at rollback etc). One thing I do know, though, is that I'd much rather tell the customer I can't sell him something and to please come back later if the queue goes down than to keep a message on a single disk and hope that the queue comes back before the disk fails.

Local message store would certainly not work for a voting system.Such software would be called many names (but certainly not 'reliable') if votes were accepted and not sent to the central database before closing time.

Rafal, How do you think elections work?

ok, bad example - you'd be kicked much harder for not accepting votes at all ;)

Rafal, But that is a really good example. Imagine that this is election day, and the phone to report to the head election office is down. You take the votes on a piece of paper. If someone now came and burn down the building, you lost all those votes.

Does this mean that you shouldn't take the votes?

Well, recently we had such elections, the software malfunctioned and failed to gather all the data on time - the cleanup procedures took a long time and of course there were accusations about faking the election and some insinuations about additional time necessary for adjusting some numbers here or there... so probably there's no perfect solution but certainly not being able to vote because of software error would be much worse than that Anyway, in such case i think reliability would require a backup plan that would be a part of the process, there's no option for 'rollback' in case of elections.

I love that the link to "that complex systems operate in a constant mode of partial failure" gives me an HTTP 404. error.

Chris, Works for me

I think this is that referenced article: http://web.mit.edu/2.75/resources/random/How%20Complex%20Systems%20Fail.pdf

Comment preview