Tweaking xUnit

One of the interesting challenges that we have with RavenDB is the number and duration of our tests.

In particular, we current have over three thousands tests, and they take hours to run. We are doing a lot of stuff there “let us insert million docs, write a map/reduce index, query on that, then do a mass update, see what happens”, etc. We are also doing a lot of stuff that really can’t be emulated easily. If I’m testing replication for a non existent target, I need to check that actual behavior, etc. Oh, and we’re probably doing silly stuff in there, too.

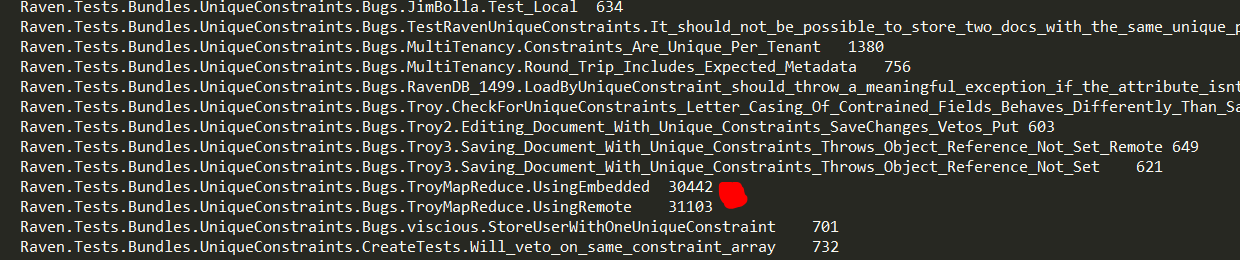

In order to try to increase our feedback cycle times, I made some modifications to xUnit. It is now going to record the test duration of the tests, the results look like that:

You can see that Troy is taking too long. In fact, there is a bug that those tests currently expose that result in a timeout exception, that is why they take so long.

But this is just to demonstrate the issue. The real power here is that we also use this when decided how to run the tests. We are simply sorting them by how long they took to run. If we don’t have a record for that, we’ll give them a run time of –1.

This has a bunch of interesting implications:

- The faster tests are going to run first. That means that we’ll have earlier feedback if we broke something.

- The new tests (haven’t had chance to run ever) will run first, those are were the problems are more likely anyway.

- We only run report this for passing tests, that means that we are going to run failed tests first as well.

In addition to that, this will also give us better feedback on what are slow tests are. So we can actually give them some attention and see if they are really required to be slow or they can be fixed.

Hopefully, we can find a lot of the tests that are long, and just split them off into a separate test project, to be run at a later time.

The important thing is, now we have the information to handle this.

Comments

The prioritisation of tests has been discussed many times before, for instance by Michael Feathers. It's a great tool for bigger tests' sets. The algorithm of running last failed tests/new ones is quite good as well - fail possibly fast, I'd say.

Thw failed captcha made my comment visible twice with different time formats. Sth wrong

We are currently running 11'347 Unit Tests (xUnit) and 2117 Spec Tests (Machine.Specifications) locally before every check-in and again after checking in on the build integration server.

As far as xUnit reporting goes you can have it generate xml reports where the time per [Fact] is reported. Teamcity also reports the times and we sporadically go through the list and check whether we can improve run time for the ones taking long.

Of course with this ammount of tests there's the issue with tests taking long, especially since we are trying to do "small commit" and thus checking in about 8 times per developer per day. Now image if the test suite would take 30, 40, 50 minutes. You would not be doing a lot of development anymore.

So every time we hit an unbearable integration time (more than 3-6 minutes) we invested in improving the build. Some of our most effective measures were: -Parallel Building -Running tests for all assemblies in parallel -Using in memory database and creating NHibernate configuration only once per assembly -Reflection Caching

Anyway, these all boil down to: -Doing the same things fewer times (for example: instead of once per test only once per assembly) -Doing things in parallel

I think the notion of "fast feedback" by prioritizing is interesting and useful, and it's probably the thing which we will invest in next.

It seems to me (i'm guessing) that you (Oren) are currently not executing the tests (massively) in parallel. Why is this? Too much cost for deploying the build on multiple (virtual?) machines and then testing in parallel?

Just a quick addendum: Our build / integration time would be at about 40 minutes if we would not have invested in the improvement continuously.

@bruno And 3-6 minutes before every checkin seems acceptable? There is no way I could put up with that. I can understand doing it on a build server which shouldn't be blocking developer time but locally seems overkill. Do you really need to run all the tests on a pre-commit? What about something like NCrunch?

@Martin The problem with "pre-commit" or "pre-tested-commits" is that in case they fail you will a) either have to have a copy of the code of the version you tried to check-in, and fix this one b) or not commit, fix it within your further progressed code and finish it.

the problem with a) is that it requires quite a while to get a second copy of the "same" source (for us about 2-3 minutes), also consider loading the solution in Visual Studio, restoring of nuget packages etc.

With b), the commit's aren't that small anymore. We've also tried it this way and you often end up perfoming badly because with a failed commit you have to handle two issues at once instead of one.

Since we are doing commit reviews we're spending the "waiting time" rather productively. The commit review is not primarily for ensuring the checked-in code stands true to our standards, but even more about spreading information, syncing up with other developers, planning the next step,...

I've had a look at NCrunch and it looks quite interesting. Thanks for the tip. I don't think it's main advantage would be executing tests in parallel, but rather executing tests parallel to development. I think we'll have to giive it a try!

This is pretty much the logic that NCrunch uses, afaik.

@Bruno Juchli: I think (because we discussed that in my company and I was assigned the task to anaylze the problem) there is only one "good" solution when using a distibuted source control here (with centralized you have always gated checkins): one branch per commit/feature (automated creation on serverside, preferably via web ui), push to that branch, and let the server handle merging, merge conflict detection etc. into a stable branch. Also possible: aggregation of commits into single ones (so devs can commit "dirty" checked pushs at end of each day without polluting commitlog on master, and once they use a keyword like "#final" the server aggregates and rewrites the history) and prewarning when there are potential merge conflicts with another devs branch. Key here is that the branch (could also be feature branchs).

I'm sure that's the way to go, unfortunately someone has to create such a system, and unfortunately it's a bit harder with Mercurial (which my company is using) to rewrite history in comparison to git to do that, but it is possible, thanks to advancements lately. I think the ultimate solution is such a "branch management" system. As is most of the time with dev problems: we lack the proper tools.

Ok, this is a little off-topic, but seeing the sheer number of your tests I have to ask. Did anyone experience OutOfMemory exceptions from automated TFS test runs?

We have over 12 thousand tests in our test suite, out of which ~8K are xUnit (+NSubstitute +FluentAssertions) and ~4K are NUnit (+Moq). I have a hard time to get this mix of tests up and running. And filtering out integration tests would help too.

Csaba, No, we didn't have any issues related to that.

Comment preview