No, really for real this time. I opened my mailbox today to find a very interesting message from the NHibernate commit logs:

NH-258 - Custom SQL support (including stored procedures)

It may look fairly innocent comment, but it has quite an impact on several nay-sayers that will clutch stored procedures until you pry them out of their cold dead hands. Also, sometimes Stored Procedures (or raw SQL) are useful :-).

So, armed with nothing but a toothpick and some shoes' polish, I turned to the task of finding out how to use this wonderous new feature. I started on the Hibernate documentation in the subject

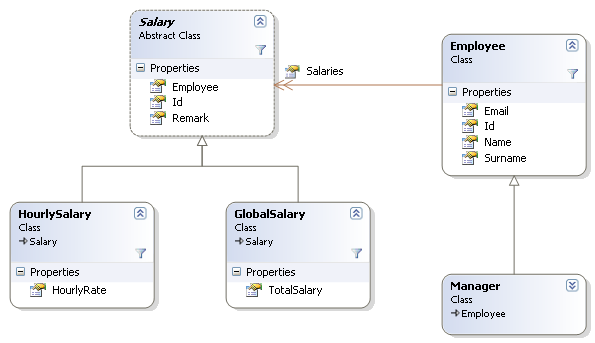

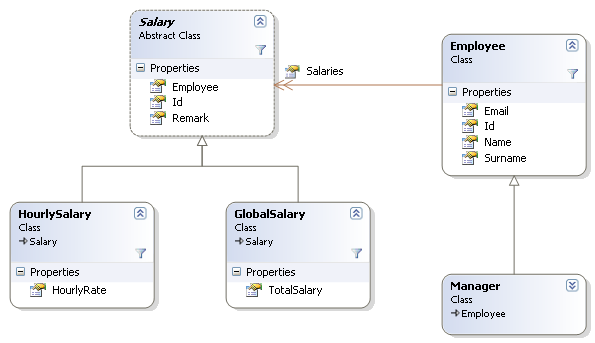

The table model is very simple:

I then create a simple set of stored procedures, all of which were fairly standard, like this one:

ALTER PROCEDURE [dbo].[EmployeesInsert](

@Name nvarchar(50), @Surname nvarchar(50),

@Email nvarchar(50), @Type int, @Id int

)

AS

INSERT INTO [Employees]

(

[Id], [Name], [Surname], [Email], [Type]

)

VALUES

(

@Id, @Name, @Surname, @Email, @Type

)

RETURN @@Error

I have created those procedures via code generations, but I think that you'll agree that most existing stored procedures will have a similar look. I have the following SPs:

· EmployeesInsert

· EmployeesUpdate

· EmployeesDelete

· SalariesInsert

· SalariesUpdate

· SalariesDelete

The only slightly strange thing about these procedures is the position of the ID parameter. While in most SP it is the first parameter, it seems that NHibernate requires it to be the last parameter on the stored procedure. Also note that specifying SET NOCOUNT ON will cause NHibernate to think that it didn't manage to save the row, and throw. The SP must return a count of 1.

So, given those, how am I going to proceed?

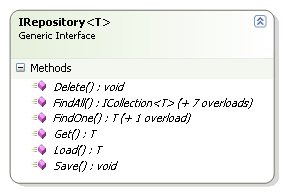

First thing that I should do it to update the XSD schemas, this is so I could get intellisense inside VS.Net when I edit the mapping files. Then, let us create our entites:

This is a fairly simple model to grasp, but hard to implement. I'll not show you everything, but I'll at least put you on the right path. Let us start with mapping the Employee class:

<class name="Employee"

table="Employees"

discriminator-value="1"

lazy="true">

<id name="Id"

unsaved-value="0">

<generator class="assigned"/>

</id>

<discriminator column="Type"/>

<property name="Name"/>

<property name="Surname"/>

<property name="Email"/>

<set name="Salaries"

generic="true">

<key column="EmployeeId"/>

<one-to-many class="Salary"/>

<loader query-ref="allSalariesForEmployee"/>

</set>

<subclass name="Manager"

discriminator-value="2">

<sql-insert>

exec EmployeesInsert ?,?,?,2,?

</sql-insert>

</subclass>

<sql-insert>

exec EmployeesInsert ?,?,?,1,?

</sql-insert>

</class>

This is mostly standard NHibernate mapping, with some interesting additions, notice the generic="true" in the set defination? That means that you can use ISet<T> without using NHibernate.Generics.

Now, for now, let us ignore the <loader> in the set element, and look at the bottom of the code, where we find a <sql-insert> statement, which directs NHibernate to use the text inside when it is inserting an entity to the database. A couple of points about the question marks before we continue:

- The identifier of this entity is always the last one.

- The rest of the fields appear in the order that they were defined in the XML.

- The last two statements are both wrong, in order to know, you need to check the generated SQL that NHibernate generates, and only then you will know. The issue here is that it gets quite complex with inheritance hierarcies, multi column fields, etc.

NHibernate transforms this call to:

exec

EmployeesInsert @p0,@p1,@p2,1,@p3 As I have no real control on the order of the parameters, my SP needs to be changed approprirately (above it appears after the changes). Now, about managers, you noticed that I specified a different statement there? That allows me to pass the different discriminator value (or maybe call ManagersInsert), etc.

Before we get to the set mystery, let us take a look at how I mapped Salary:

<class name="Salary"

discriminator-value="-1">

<id name="Id">

<generator class="assigned"/>

</id>

<discriminator column="Type"/>

<property name="Remark"/>

<many-to-one name="Employee"

class="Employee"

column="EmployeeId"/>

<subclass name="HourlySalary"

discriminator-value="1">

<property name="HourlyRate"

column="ParameterOne"/>

<sql-insert>

exec SalariesInsert ?,?,?,1,?

</sql-insert>

</subclass>

<subclass name="GlobalSalary"

discriminator-value="2">

<property name="TotalSalary"

column="ParameterOne"/>

<sql-insert>

exec SalariesInsert ?,?,?,2,?

</sql-insert>

</subclass>

<sql-insert>

raiserror ('Salary is an abstract type, can''t insert it!',10,1)

</sql-insert>

</class>

This is not very different, isn't it? Notice that because salary is an abstract type, I raise an error if an attempt is made to save it (not really neccecary, since this is not possible, but whatever). Then, each subclass defined each own insert statement.

One thing to notice here is the parameter orderring. It appears that in this case the orderring is in the order defined in the mapping, in reverse hierarchal depth. Which means that the members of the subclass appear first, and then the members of the parent class, and last (but not least) the id.

For reference, here is the SalariesInsert procedure:

ALTER PROCEDURE [dbo].[SalariesInsert]

(

@ParameterOne int, @Remark nvarchar(50),

@EmployeeId int, @Type int, @Id int

)

AS

INSERT INTO [Salaries]

(

[Id], [Type], [ParameterOne], [Remark], [EmployeeId]

)

VALUES

(

@Id, @Type, @ParameterOne, @Remark, @EmployeeId

)

RETURN @@Error

Now, let us check that facinating association between employees and salaries:

<loader query-ref="allSalariesForEmployee"/>

This means that when NHibernate wants to load the employees' salaries, it needs to execute the query (not SP) that is specified. In this case, here is the query:

<sql-query name="allSalariesForEmployee">

<load-collection alias="salaries"

role ="Employee.Salaries"/>

exec [SalariesByEmployeeId] ?

</sql-query>

Note that we need to specify which collection this query resolves. The SalariesByEmployeeId is simply:

ALTER PROCEDURE [dbo].[SalariesByEmployeeId]

(

@EmployeeId int

)

AS

SELECT

[Id], [Type], [ParameterOne], [Remark], [EmployeeId]

FROM [Salaries]

WHERE [EmployeeId] = @EmployeeId

So, that is all that I have to say so far, you can check Hibernate's documentation for all of the details, and check out the latest NHibernate code to play with it.

This is probably not going to help you map directly to pre-existing stored procedures, but it make it that much easier to work in those envrionment where you just can't get the data from the tables (for various reasons, not the least of which is the 160 columns table), or where Stored Procedures are mandated.

I'm very excited by this because I know that there are several places where this can be very helpful. I love PIVOT, and it was annoying not to be able to use it from NHibernate.

The disadvantages - You take away from NHibernate the ability to comprehend the structure of the tables, this mean that it can't do joins, eager fetching, etc. This is extremely important capability that just vanished. Likewise for queries, NHibernate's abilities to query your objects is being severly limited using this method of operation.