Recently, we added a way to track alerts across all the sessions the request. This alert will detect whenever you are making too many database calls in the same request.

But wait, don’t we already have that?

Yes, we do, but that was limited to the scope of one session. there is a very large set of codebases where the usage of OR/Ms is… suboptimal (in other words, they could take the most advantage of the profiler abilities to detect issues and suggest solutions to them), but because of the way they are structured, they weren’t previously detected.

What is the difference between a session and a request?

Note: I am using NHibernate terms here, but naturally this feature is shared among all profiler:

A session is the NHibernate session (or the data/object context in linq to sql / entity framework), and the request is the HTTP request or the WCF operation. If you had code such as the following:

public T GetEntity<T>(int id)

{

using (var session = sessionFactory.OpenSession())

{

return session.Get<T>(id);

}

}

This code is bad, it micro manages the session, it uses too many connections to the database, it … well, you get the point. The problem is that code that uses this code:

public IEnumerable<Friends> GetFriends(int[] friends)

{

var results = new List<Friends>();

foreach(var id in friends)

results.Add(GetEnttiy<Friend>(id));

return results;

}

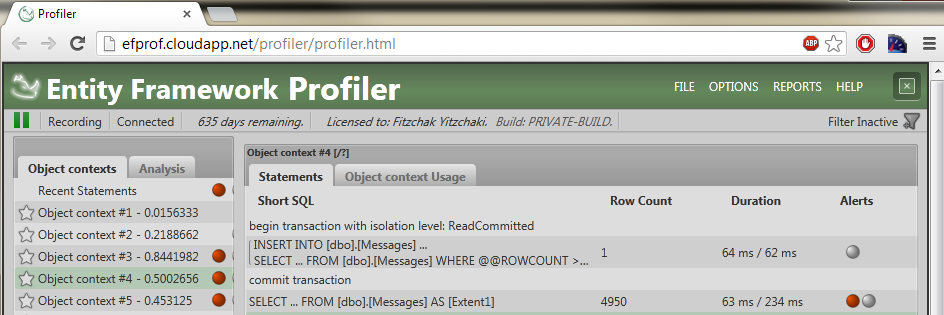

The code above would look like the following in the profiler:

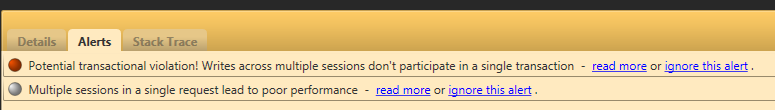

As you can see, each call is in a separate session, and previously, we wouldn’t have been able to detect that you have too many calls (because each call is a separate session).

Now, however, we will alert the user with a too many database calls in the same request alerts.