I just finished spending half a day implementing support for mixed mode authentication in my application. I am putting it here mostly to remind me how it is done, since it was a pretty involved process.

As usual, the requirement was that most of the users would use Windows Authentication in a single sign on fashion, and some users would get a login screen.

I am using Forms Authentication, and I want to keep is as simple as possible. After some searching, it seems that most of the advice on the web seems to include building two sites, and transfering credentials between the sites.

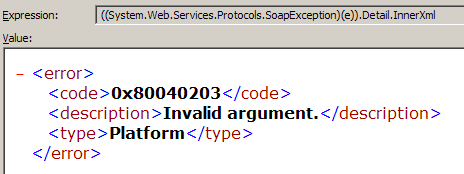

Perhaps the best places to look for it is this image, that explains how Forms Authentication works, and these two posts from Craig Andera #1, #2. After reading those, I had a much better picture of what I needed to do.

This requires several steps that are supposed to be self coordinated in order for it to work:

- Setup IIS for Anonmous + Integrated security.

- In the web.config, specify forms authentication.

- In the Login Controller, you need to check whatever the user is a candidate or windows authentication. In my case, it is decided according to IP ranges, but your case may be different.

- If the user can do windows authentication, you need to send back a 401 status code.

- Here is where it get a bit hairy. You can't do it from the login controller, because the FormsAuthentication Module will intercept that and turn it into a redirect to the login page.

- You need to create a http module and register it last in the HttpModule sections, and there you can specify the 401 status code safely. I used the HttpContext.Items to transfer that request.

- After the request has returned, if Windows Authenticaton has been successful, you can access the user name in the ServerVariables["LOGON_USER"].

- Create a Form Authentication cookie as usual, and carry on with your life.

The main issue here, as far as I am concerned was to make sure that I will do it in a way that is maintainable, there are several disparate actions that are taking place that are all needed to make it work.

It took a while before I could get to grip with what was going on the wire, so here is the code for this:

private void HandleInternalUsersAndSingleSignOn()

{

//semi-internal users and the like

if(Context.ClientIpIsIn(Settings.Default.ExcludeFromWindowsAuthetication))

return;

string logonUser = Context.GetLogonUser();

if(string.IsNullOrEmpty(logonUser))

{

//Internal installation and an empty user means

//that we have not yet auttenticated, we register a request

//to send a 401 (Unauthorized) to the client, so it will provide

//us with their windows credentials.

//we have to register a request for 401, which will be handled by the

//Add401StatusCodeHttpModule on the EndRequest because we are also using

//FormsAuthentication, and we need to bypass the FormAuthentication interception

//of 401 status code.

Context.SetContextVaraible(Constants.Request401StatusCode, true);

Context.EndResponse();

}

// will redirect to destination page if successful

LoginAuthenticatedUser(logonUser);

}