Before I get to the entire story, a few things:

- The SignalR team is amazingly helpful.

- SignalR isn’t released, it is a 0.5 release.

- Even so, the version that I was using was the very latest, not even the properly released 0.5 version.

- My use cases are probably far out from what SignalR is set out to support.

- A lot of the problems were actually my fault.

One of the features for 1.2 is the changes features, a way to subscribe to notifications from the databases, so you won’t have to poll for them. Obviously, this sounded like a good candidate for SingalR, so I set out to integrate SignalR into RavenDB.

Now, that ain’t as simple as it sounds.

- SignalR relies on Newtonsoft.Json, which RavenDB also used to use. The problem with version compact meant that we ended up internalizing this dependency, so we have had to resolve this first.

- RavenDB runs in IIS and as its own (HttpListener based) host. SignalR does the same, but makes assumptions about how it runs.

- We need to minimize connection counts.

- We need to support logic & filtering for events on both server side and client side.

The first two problems we solved by brute force. We internalized the SignalR codebase and converted its Netwonsoft.Json usage to the RavenDB’s internalize version. Then I wrote modified one of the SignalR hosts to allow us to integrate that with the way RavenDB works.

So far, that was relatively straightforward process. Then we had to write the integration parts. I posted about the external API yesterday.

My first attempt to write it was something like this:

public class Notifications : PersistentConnection

{

public event EventHandler Disposed = delegate { };

private HttpServer httpServer;

private string theConnectionId;

public void Send(ChangeNotification notification)

{

Connection.Send(theConnectionId, notification);

}

public override void Initialize(IDependencyResolver resolver)

{

httpServer = resolver.Resolve<HttpServer>();

base.Initialize(resolver);

}

protected override System.Threading.Tasks.Task OnConnectedAsync(IRequest request, string connectionId)

{

this.theConnectionId = connectionId;

var db = request.QueryString["database"];

if(string.IsNullOrEmpty(db))

throw new ArgumentException("The database query string element is mandatory");

httpServer.RegisterConnection(db, this);

return base.OnConnectedAsync(request, connectionId);

}

protected override System.Threading.Tasks.Task OnDisconnectAsync(string connectionId)

{

Disposed(this, EventArgs.Empty);

return base.OnDisconnectAsync(connectionId);

}

}

This is the very first attempt. I then added the ability to add items of interest via the connection string, but that is the basic idea.

It worked, I was able to write the feature, and aside from some issues that I had grasping things, everything was wonderful. We had passing tests, and I moved on to the next step.

Except that…. sometimes…. those tests failed. Once every so often, and that indicate a race condition.

It took a while to figure out what was going on, but basically, what happened was that sometimes, SignalR uses a long polling transport to send messages. Note the code above, we register for events as long as we are connected. In long polling system (and in general in persistent connections that may come & go), it is quite common to have periods of time where you aren’t actually connected.

The race condition would happen because of the following sequence of events:

- Connected

- Got message (long pooling, cause disconnect)

- Disconnect

- Message raised, client is not connected, message is gone

- Connected

- No messages for you

I want to emphasize that this particular issue is all me. I was the one misusing SignalR, and the behavior makes perfect sense.

SignalR actually contains a message bus abstraction exactly for those reasons. So I was supposed to use that. I know that now, but then I decided that I probably using the API at the wrong level, and moved to use hubs and groups.

In this way, you could connect to the hub, request to join to the group watching a particular document, and voila, we are done. That was the theory, at least. In practice, this was very frustrating. The first major issue was that I just couldn’t get this thing to work.

The relevant code is:

return temporaryConnection.Start()

.ContinueWith(task =>

{

task.AssertNotFailed();

hubConnection = temporaryConnection;

proxy = hubConnection.CreateProxy("Notifications");

});

Note that I create the proxy after the connection has been established.

That turned out to be an issue, you have to create the proxy first, then call start. If you don’t, SignalR will look like it is working fine, but will ignore all hub calls. I had to trace really deep into the SignalR codebase to figure that one out.

In my opinion (already communicated to the team) is that if you start a hub without a proxy, that is probably an error and should throw.

Once we got that fix, things started to work, and the test run.

Most of the time, that is. Once in a while, the tests would fail. Again, the issue was a race condition. But I wasn’t doing anything wrong, I was using SignalR’s API in a way straight out of the docs. This turned out to be a probably race condition inside InProcessMessageBus, where because of multiple threads running, registering for a group inside SignalR isn’t visible on the next request.

That was extremely hard to debug.

Next, I decided to do away with hubs, by this time, I had a lot more understanding of the way SignalR worked, and I decided to go back to persistent connections, and simply implement the message dispatch in my code, rather than rely on SignalR groups.

That worked, great. The tests even passed more or less consistently.

The problem was that they also crashed the unit testing process, because of leaked exceptions. Here is one such case, in HubDispatcher.OnRecievedAsync():

return resultTask

.ContinueWith(_ => base.OnReceivedAsync(request, connectionId, data))

.FastUnwrap();

Note that “_” parameter. This is a convention I use as well, to denote a parameter that I don’t care for). The problem here is that this parameter is a task, and if this task failed, you have a major problem, because on .NET 4.0, this will crash your system. In 4.5, that is fine and can be safely ignored, but RavenDB runs on 4.0.

So I found those places and I fixed them.

And then we run into hangs. Specifically, we had issues with disposing of connections, and sometimes of not disposing them, and…

That was the point when I cut it.

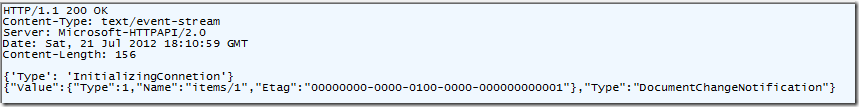

I like the SignalR model, and most of the codebase is really good. But it is just not in the right shape for what I needed. By this time, I already have a pretty good idea about how SignalR operates, and it was a work of a few hours to get it working without SignalR. RavenDB now sports a streamed endpoint that you can register yourself to, and we have a side channel that you can use to send commands on to the server. It might not be as elegant, but it is simpler by a few orders of magnitude, and once we figure that out, we have a full blown working system at our hands. All the test passes, we have no crashes, yeah!

I will post exactly on how we did it in a future post.

![]() .

.